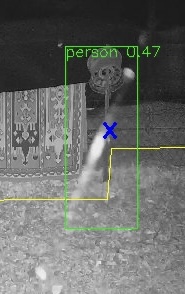

After processing millions of images and examing countless false positives a number of these provide interesting insights as to what computer vision is really learning. The following example is a classic.

In the beginning, I saw a lot of these, as I used better models they incidence dropped off somewhat. The problem is the spider web streak (Also happens with rain streaks). If you look you can start see what's going on, the model has learnt the a human is a somewhat vertical thing with a lump on the top and often angled bits sticking out the side. When the rain leave an angular streak on the image, it sees this as an arm. In this case the confidence score is not so high but I have seen ridiculous ones with very high confidence scores on good models as well many times.

The training process is lazy, it only learns as much as is necessary to pass the tests based on the training data. In this case if the features it learns mostly are that people are verticial things with lumps in different places, like on top for instance, with bits ticking out the side and it's able to pass with a high score with that then it's job done. This points to a way to improve the training, we need to penalize it for that sort of poor decision, something I will do in the future for my training sets it is put a lot of composed images made out of vertically segmented things like bags and angular things down the side, like broom handles. This should force the training process to look for more defining features.

Now I should point out, that this model is a very good one, yolov6, large model with around 59 million parameters. A lot of the on the edge models have just around the 1 million parameters mark. The number of parameters represents in principle the amount of memory it has to "potentially" learn new defining features, so long as it has it to improve it's score.

So if you are looking for deer and there are other animals that potentially good be seen as deer make sure you include that sort in the training data as well so it learns to tell the difference.

31 October 2024 10:19pm

Interesting observation, Kim!

I finally located the reference I talked about the other day at the Variety Hours After Hours:

Can AI Tell the Difference Between a Polar Bear and a Can Opener?

2019-01-25T13:00:25-08:00

The post is covering the following paper:

Deep convolutional networks do not classify based on global object shape | PLOS Computational Biology

Author summary “Deep learning” systems–specifically, deep convolutional neural networks (DCNNs)–have recently achieved near human levels of performance in object recognition tasks. It has been suggested that the processing in these systems may model or explain object perception abilities in biological vision. For humans, shape is the most important cue for recognizing objects. We tested whether deep convolutional neural networks trained to recognize objects make use of object shape. Our findings indicate that other cues, such as surface texture, play a larger role in deep network classification than in human recognition. Most crucially, we show that deep learning systems have no sensitivity to the overall shape of an object. Whereas deep learning systems can access some local shape features, such as local orientation relations, they are not sensitive to the arrangement of these edge features or global shape in general, and they do not appear to distinguish bounding contours of objects from other edge information. These findings show a crucial divergence between artificial visual systems and biological visual processes.

The paper examines how deep convolutional neural networks (DCNNs) recognize objects, focusing on their reliance on texture rather than global shape. The key points include:

- Human vs. AI Recognition: Humans use global shape for recognition, while DCNNs rely more on local features.

- Experimental Findings: DCNNs performed well with local contours but struggled with animal recognition when global shapes were disrupted.

- Conclusion: DCNNs do not effectively utilize global shape information, highlighting a critical difference between artificial and human vision systems.

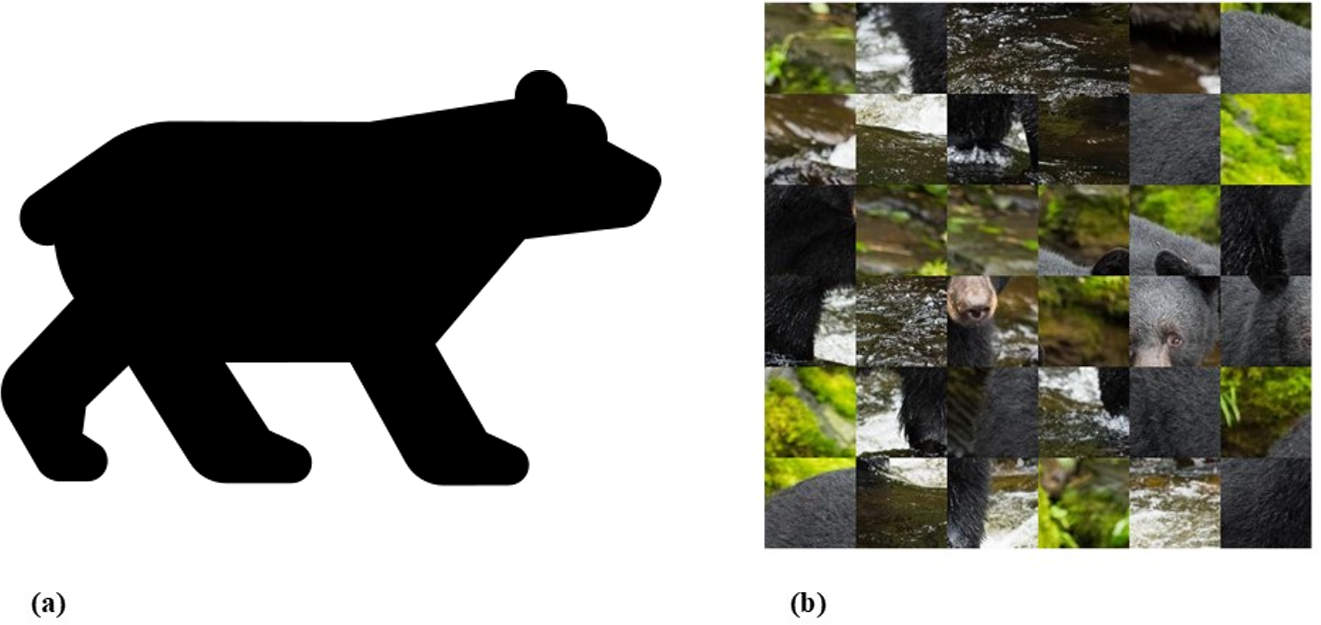

This figure from the paper illustrates that we as humans can quickly detect the left as a bear but not so quickly the right:

The paper is admittedly quite old (2018) and the DCNNs were even older (AlexNet 2012 and VGG-19 2014).

It would be really interesting to find out how modern models like the modern YOLO versions would behave in similar experiments.

Cheers, Lars

Rob Appleby

Wild Spy

1 November 2024 1:45pm

I am certainly a somewhat vertical thing with a lump on top, so I get it...

Lars Holst Hansen

Aarhus University