Earlier this year, we ran AI for Conservation: Office Hours, once again teaming up with Dan Morris from Google's AI for Nature program to connect AI specialists with conservationists for 1:1 advice and support on solving AI or ML problems in their conservation projects.

Building on the progress made in 2021 and 2023, in AI for Conservation Office Hours 2025 we went even further by supporting more projects than ever with a wider range of specialist support. In total, we received 51 applications seeking help with diverse AI challenges. We narrowed these down to 18 applications who would benefit most from one-hour 1:1 support sessions, and 24 who would benefit more from specialist support via email.

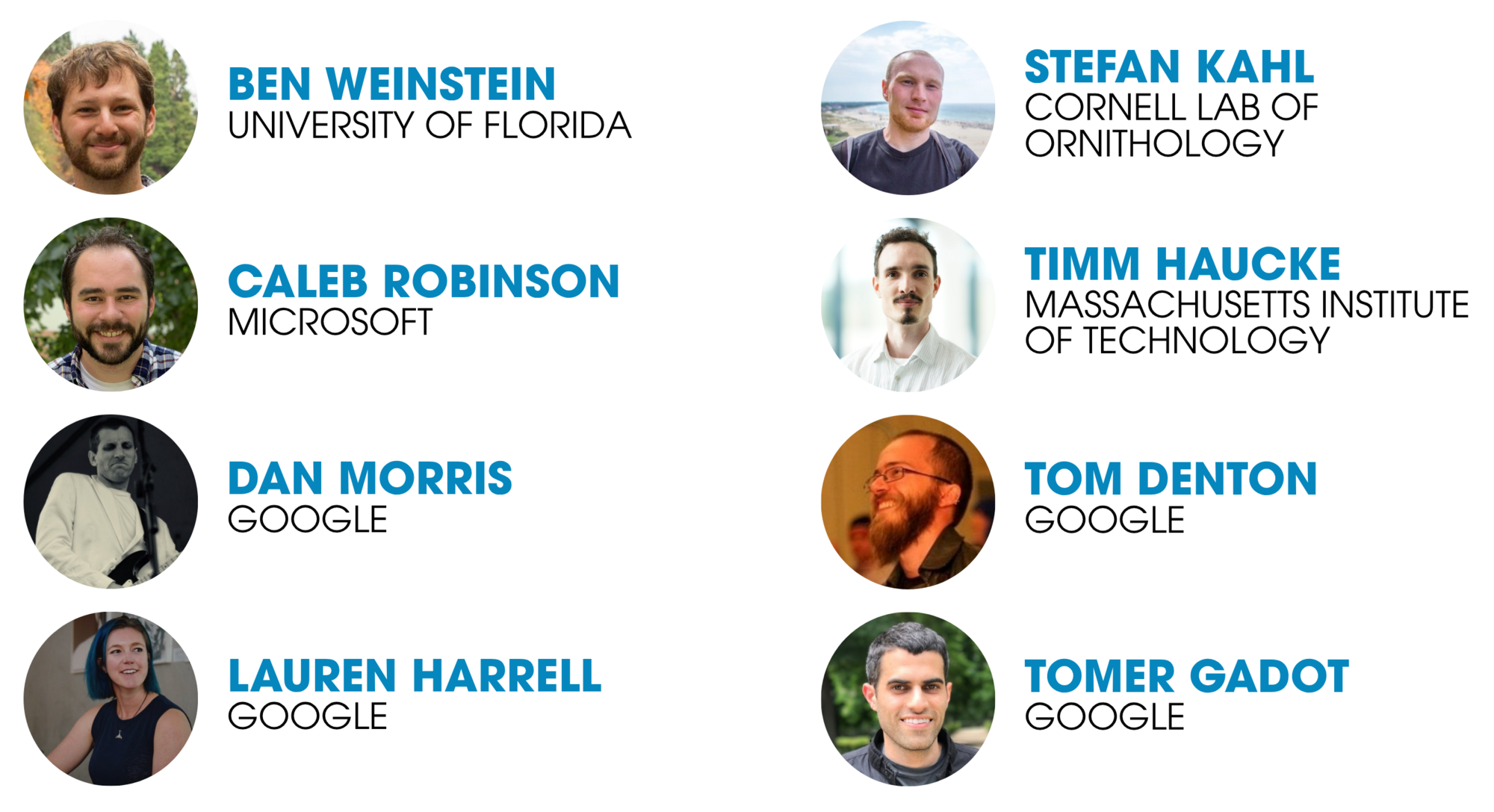

AI Specialists

This year, we had a great selection of AI specialists who dedicated their time to advise conservationists on their work:

Support Topics and Updates

There was an exciting selection of challenges for the AI specialists to tackle this year, with most issues falling into three main areas of AI implementation - camera traps/computer vision, acoustics, and geospatial. Some projects also sought support on using LLMs for broader data analysis.

Acoustics

Nine of our 1:1 virtual sessions focussed on advice for acoustic analysis. These topics included projects such as: discerning between individual howler monkey calls, de-noising acoustic recordings of Atlantic cod to reduce electrical noise interference, building a library of annotations for different species and vocalisation types, identifying amphibian vocalisations, comparing soundscapes using CNN models, and classifying primate species based on vocalisations.

One example of an acoustics project we provided advice on was for Agostina Juncosa, a PhD student from Argentina studying nocturnal raptors using passive acoustic monitoring (PAM). Agostina’s data came from 140 sites, and her target species included 13 types of owl, 9 nightjars, and 3 other bird species. She was encountering issues using BirdNET to accurately identify her target species. Her model showed poor performance in correctly identifying the selected species while also incorrectly labelling calls as other animals, such as frogs. Agostina sought advice on the best path forward, and she received advice from Stefan Kahl, who develops BirdNET at the Cornell Lab of Ornithology, along with Tom Denton and Dan Morris from Google.

The specialists provided advice such as:

- Incorrect classifications are less concerning than missed detections, because as long as species are identified amongst the recordings you can then manually classify them afterwards.

- Since the vocalizations of the target species fall within a low-frequency range, Agostina should apply a low-pass filter to exclude everything above 5 kHz to help streamline data processing and sampling.

- Focus on data curation, acquire more data and experiment with a number of different modelling approaches.

“The experts were incredibly generous with their time. They examined my workflow, and Stefan Kahl and Tom Denton told me it made sense and encouraged me to keep going… Overall, the session helped me to validate my workflow, gave me clear next steps, gave me confidence and renewed my enthusiasm for tackling these challenges.”

Agostina Juncosa, Consejo Nacional de Investigaciones Científicas y Técnicas (CONICET)

Another acoustics project supported this year was from Consolata Gathoni Gitau, whose project involved evaluating ecosystem degradation and restoration gradients in tropical savannas, specifically in Kenya's Maasai Mara ecosystem. Her data consisted of 16 recorders collecting one week of data per season, with three seasons of data collected so far, and plans to do four seasons in 2025 and four in 2026. The project aimed to track the overall soundscape degradation over time and assess whether soundscapes can serve as reliable indicators of ecosystem health compared to traditional methods.

Consolata was seeking advice on: which AI tools or frameworks were best suited for soundscape analysis and integrating multi source datasets, how to process spectrograms to extract patterns related to degradation, how to combine spectrograms and satellite images into a multi-input CNN architecture and how to use this to predict ecosystem health or degradation levels by combining auditory and visual cues, and improving the sensitivity and accuracy of acoustic indices using AI.

Her session involved support from Stefan Kahl and Dan Morris. Alongside streamlining her process, they recommended she:

- Use BirdNET to build a detector that is species agnostic without coding.

- Run BirdNET on the data as an experiment, then use a western Kenya dataset provided to train a custom version of BirdNET.

“The advice provided by the AI specialist was instrumental in refining the direction of our project. Specifically, the specialist helped me identify key areas where AI could streamline our data processing workflows, particularly around automating data classification. As a result of the session, we restructured part of our data pipeline to accommodate the model training practices, which has improved overall efficiency and reduced training time. Overall, the session has accelerated our progress significantly and provided clarity on our technical direction.”

Consolata Gathoni Gitau, Nottingham Trent University

Camera Traps / Computer Vision / Drones / Geospatial

Eight 1:1 virtual sessions involved AI for camera traps or computer vision projects. Topics for our sessions included lion identification in Northern Cameroon, identification of polar bears, nest prey identification by African Crowned Eagles, optimisation of real-time image processing and species identification from bird feeder footage, and image classification for tropical mammals in Southeast Asia to help estimate species abundance. Three of these projects also involved the use of drone technology and geospatial tools, including detection of wire-snares using SAR imagery, botanical survey using drone imagery in Hawaii, and aboveground biomass estimation using deep learning.

One camera trap project we supported was Varalika Jain’s. She sought advice on how AI could assist in identifying when prey was delivered to the nest of African Crowned Eagles in South Africa. She was specifically curious about how AI could automate the filtering of nest camera photos to sequences of interest, how it could identify complex prey, and how to link camera data to GPS data to identify where hunts are made across the landscape.

During the call, Varalika and Dan Morris discussed how software such as Timelapse could be added to the workflow, and how AI tools such as MegaDetector and AddaxAI could then be used to divide the images into eagle chick present or absent, which is an indicator of prey delivery. One key piece of advice was that although a species classifier may not be able to identify the eagle chick as the correct species, it will likely be able filter out all the images that do not contain any birds, which would drastically reduce how many images need to be checked.

“Dan was incredibly helpful in showing me which tools would be relevant for my camera trap analysis. We talked about workflow management and how to work efficiently. We also explored where workflow management tools could benefit, and where AI could benefit. Overall, it was insightful for me to get an overview of how to think about camera trap analysis.”

Varalika Jain, University of Vienna

In another support session, Dan Morris brought together two separate applications from Filippo Varini and Océane Boulais on a similar topic which has led to the formation of a wider collaborative effort to develop a new model to classify fish species. Filippo Varini initially reached out seeking advice on classifying deep sea animals encountered during submersible/ROV dives, where CNN object detectors were struggling to draw bounding boxes around amorphic, interlaid aggregation of marine life found in the benthic environment. Océane Boulais initially sought advice on creating a ‘Segment Any Fish model’ using ESP32-camera data from kelp forests off the coast of La Jolla, California. Together they had a productive call which has gone on to form a much larger community collaboration to build an open-source comprehensive fish detection model.

“Office Hours helped Dan, Océane and I meet and discuss applications of AI for Underwater Computer Vision. Dan and I had been discussing the idea of a "universal fish detector" (like the MegaDetector on land) for a year and thanks to Office Hours we had the spark to properly start the project. Now we have already collected and processed more than 30 datasets and compiled them into a unique dataset with more than 500,000 images. We have now started training the models and will soon share the dataset and models openly to the community!”

Filippo Varini, OceanX

Thank you to all the AI specialists for giving their valuable time to help support these conservation projects and for making this whole collaboration possible. A special thanks to all the members of the WILDLABS Team who stepped in to help run these calls as well. To all the conservationists who took part in this year’s AI for Conservation Office Hours, please keep in touch and let us know how your projects are progressing in the future!

Thank you, and we’ll see you in the next AI for Conservation Office Hours!

Add the first post in this thread.