Acoustic sensors enable efficient and non-invasive monitoring of a wide range of species, including many that are difficult to monitor in other ways. Although they were initially limited in application scope largely due to cost and hardware constraints, the development of low-cost, open-source models like the Audiomoth in recent years has increased access immensely and opened up new avenues of research. For example, some teams are using them to identify illicit human activities through the detection of associated sounds, like gunshots, vehicles, or chainsaws (e.g. OpenEars).

With this relatively novel dimension of wildlife monitoring rapidly advancing in both marine and terrestrial systems, it is crucial that we identify and share information about the utility and constraints of these sensors to inform efforts. A recent study identified advancements in hardware and machine learning applications, as well as early development of acoustic biodiversity indicators, as factors facilitating progress in the field. In terms of limitations, the authors highlight insufficient reference sound libraries, a lack of open-source audio processing tools, and a need for standardization of survey and analysis protocols. They also stress the importance of collaboration in moving forward, which is precisely what this group will aim to facilitate.

If you're new to acoustic monitoring and want to get up to speed on the basics, check out these beginner's resources and conversations from across the WILDLABS platform:

Three Resources for Beginners:

- Listening to Nature: The Emerging Field of Bioacoustics, Adam Welz

- Ecoacoustics and Biodiversity Monitoring, RSEC Journal

- Monitoring Ecosystems through Sound: The Present and Future of Passive Acoustics, Ella Browning and Rory Gibb

Three Forum Threads for Beginners:

- AudioMoth user guide | Tessa Rhinehart

- Audiomoth and Natterjack Monitoring (UK) | Stuart Newson

- Help with analysing bat recordings from Audiomoth | Carlos Abrahams

Three Tutorials for Beginners:

- "How do I perform automated recordings of bird assemblages?" | Carlos Abrahams, Tech Tutors

- "How do I scale up acoustic surveys with Audiomoths and automated processing?" | Tessa Rhinehart, Tech Tutors

- Acoustic Monitoring | David Watson, Ruby Lee, Andy Hill, and Dimitri Ponirakis, Virtual Meetups

Want to know more about acoustic monitoring and learn from experts in the WILDLABS community? Jump into the discussion in our Acoustic Monitoring group!

Header image: Carly Batist

No showcases have been added to this group yet.

Stop The Desert

Founder of Stop The Desert, leading global efforts in regenerative agriculture, sustainable travel, and inclusive development.

- 0 Resources

- 3 Discussions

- 8 Groups

WILDLABS & Wildlife Conservation Society (WCS)

I'm the Bioacoustics Research Analyst at WILDLABS. I'm a marine biologist with particular interest in the acoustics behavior of cetaceans. I'm also a backend web developer, hoping to use technology to improve wildlife conservation efforts.

- 40 Resources

- 38 Discussions

- 33 Groups

- @JRousseau

- | She/Her

- 0 Resources

- 0 Discussions

- 1 Groups

R & D Tech | Industrial Designer | Wildlife Management Technology

- 0 Resources

- 93 Discussions

- 6 Groups

- @YvanSG

- | he/him

Clemson University

Seabird ecologist at Clemson University, South Carolina Cooperative Fish and Wildlife Research Unit. Co-chair of Caribbean Seabird Working Group. Porteur de béret occasionel.

- 0 Resources

- 62 Discussions

- 7 Groups

- @carlybatist

- | she/her

ecoacoustics, biodiversity monitoring, nature tech

- 113 Resources

- 361 Discussions

- 19 Groups

I'm a software developer. I have projects in practical object detection and alerting that is well suited for poacher detection and a Raspberry Pi based sound localizing ARU project

- 0 Resources

- 432 Discussions

- 7 Groups

- @Ebennitt

- | She/her

Working primarily in Botswana and other southern African countries, on wild mammal movement, ecology and physiology

- 1 Resources

- 9 Discussions

- 5 Groups

- @Kyle_Birchard

- | He/Him

Building tools for research and management of insect populations

- 0 Resources

- 0 Discussions

- 6 Groups

- @jsulloa

- | He/Him

Instituto Humboldt & Red Ecoacústica Colombiana

Scientist and engineer developing smart tools for ecology and biodiversity conservation.

- 3 Resources

- 22 Discussions

- 7 Groups

- @TaliaSpeaker

- | She/her

WILDLABS & World Wide Fund for Nature/ World Wildlife Fund (WWF)

I'm the Executive Manager of WILDLABS at WWF

- 23 Resources

- 64 Discussions

- 31 Groups

- @sam_lima

- | She/her

Postdoctoral Associate studying burrowing owls at the San Diego Zoo Wildlife Alliance

- 0 Resources

- 6 Discussions

- 5 Groups

Read about the advice provided by AI specialists in AI Conservation Office Hours 2025 earlier this year and reflect on how this helped projects so far.

6 August 2025

If you're a Post-Doctoral Fellow, a PhD student, or a member of the research staff interested in applying your computational skills to support active research publications, please read on to learn about the Cross-...

5 August 2025

A group of Montreal labs is searching for a postdoctoral researcher for a project applying machine learning to the spatial scaling of biodiversity.

28 July 2025

The Marine Innovation Lab for Leading-edge Oceanography develops hardware and software to expand the ocean observing network and for the sustainable management of natural resources. For Fall 2026, we are actively...

24 July 2025

Still unfunded, we are looking for a lab office, materials, used instruments and to create our educational basement.

17 July 2025

Join us in curating an annotated acoustic dataset for India! We are accepting strong and weak labels, read the FAQ document (linked below) for more info and sign the Data Agreement form to join

17 July 2025

Conservation technologies have a critical role in supporting biodiversity surveys at scale. Acoustic sensors have been increasingly adopted in ecological research, enabling us to expand our capacities in assessing...

15 June 2025

HawkEars is a deep learning model designed specifically to recognize the calls of 328 Canadian bird species and 13 amphibians.

13 May 2025

The Biological Recording Company's ecoTECH YouTube playlist has a focus on webinars about Bioacoustic monitoring.

29 April 2025

This paper includes, in its supplementary materials, a helpful table comparing several acoustic recorders used in terrestrial environments, along with associated audio and metadata information.

15 April 2025

Conservation International is proud to announce the launch of the Nature Tech for Biodiversity Sector Map, developed in partnership with the Nature Tech Collective!

1 April 2025

PhD position available at the University of Konstanz in the Active Sensing Collective Group!

28 March 2025

August 2025

event

September 2025

event

October 2025

November 2025

event

July 2025

64 Products

Recently updated products

| Description | Activity | Replies | Groups | Updated |

|---|---|---|---|---|

| As someone from the future, thank you for posting this update! |

|

Acoustics | 2 days 3 hours ago | |

| Dear Colleagues,I hope this message finds you well. I am Manuel Sánchez Nivicela., an independent ornithology researcher based in Ecuador,... |

|

Funding and Finance, Acoustics, Latin America Community | 2 days 21 hours ago | |

| Kudos for such an innovative approach—integrating additional sensors with acoustic recorders is a brilliant step forward! I'm especially interested in how you tackle energy... |

|

Acoustics, AI for Conservation, Latin America Community, Open Source Solutions | 5 days 14 hours ago | |

| 🐝 Apis Nomadica Labs: Mapping Royal Jelly Terroir Through Mobile Bee BiotechAbout Us:Apis Nomadica Labs is a mobile apiary research... |

|

Acoustics, Conservation Tech Training and Education, Animal Movement, Human-Wildlife Coexistence | 6 days 6 hours ago | |

| Awesome, I think I get what you mean! Thank you! |

|

Acoustics | 1 week 6 days ago | |

| This is such a compelling direction, especially the idea of linking unsupervised vocalisation clustering to generative models for controlled playback. I haven’t seen much done... |

|

Acoustics, AI for Conservation, Emerging Tech | 2 weeks ago | |

| Hi Josept! Thank you for sharing your experience! This types of feedback are important for the community to know about when choosing what tech to use for their work. Would you be... |

|

Acoustics, Latin America Community, Software Development | 2 weeks 5 days ago | |

| Another option you can try is HawkEars, which is a classifier made particularly for Canadian birds. Unlike BirdNET, it doesn't have a graphical interface, though. |

|

Acoustics | 2 weeks 5 days ago | |

| PROJECT UPDATE: We are curating an open-access, annotated acoustic dataset for India! 🇮🇳🌿This project has two main goals:🎧 To develop a freely available dataset of annotated... |

|

Acoustics | 3 weeks 6 days ago | |

| Just to provide an update to my post from 2021. Colleagues and I at the BTO have built a species classifier now for the BTO Acoustic Pipeline for the sound identification of all... |

+10

|

Acoustics | 1 month ago | |

| Thanks @Adrien_Pajot, we are! Also buoys! |

|

Acoustics, Animal Movement | 1 month 1 week ago | |

| I create ocean exploration and marine life content on YouTube, whether it be recording nautilus on BRUVs, swimming with endangered bowmouth... |

|

Acoustics, AI for Conservation, Animal Movement, Camera Traps, Citizen Science, Drones, Emerging Tech, Marine Conservation, Sensors, Sustainable Fishing Challenges, Wildlife Crime | 1 month 2 weeks ago |

Safe and Sound: a standard for bioacoustic data

7 April 2025 11:05pm

11 July 2025 3:29pm

For anyone seeing this in the future. An update can be seen in the June 2025 event:

11 August 2025 12:15pm

As someone from the future, thank you for posting this update!

Suggestions for research funds

10 August 2025 5:56pm

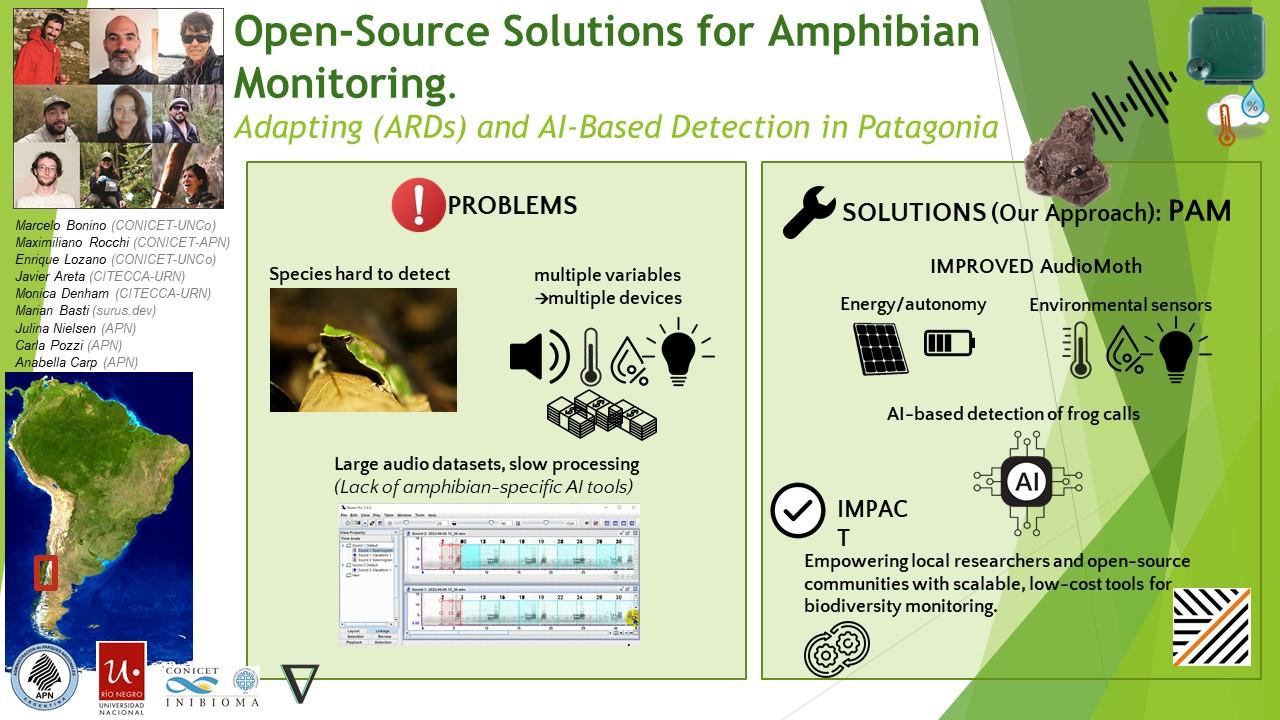

🐸 WILDLABS Awards 2025: Open-Source Solutions for Amphibian Monitoring: Adapting Autonomous Recording Devices (ARDs) and AI-Based Detection in Patagonia

27 May 2025 8:39pm

7 August 2025 9:27pm

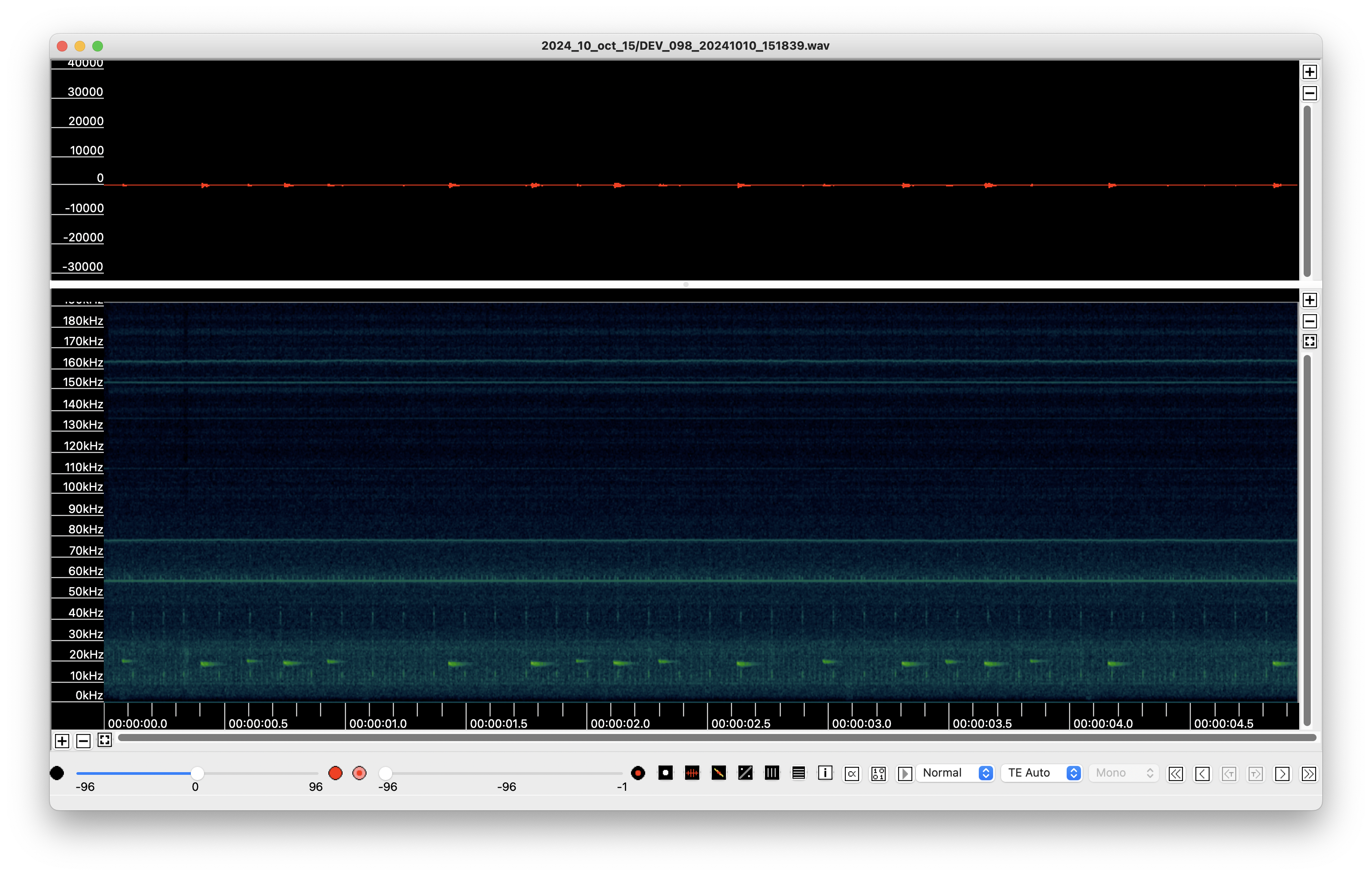

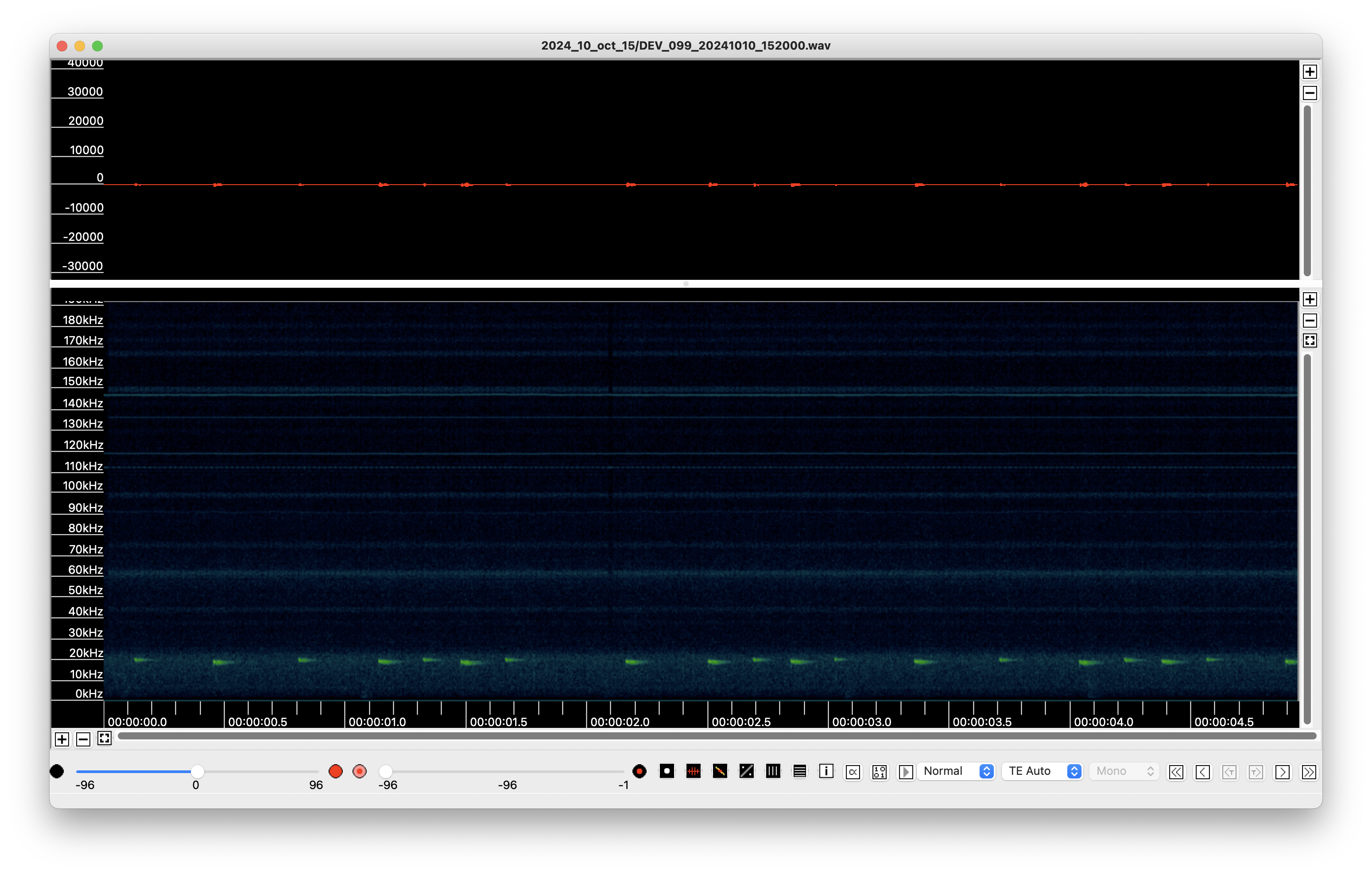

Project Update — Sensors, Sounds, and DIY Solutions (sensores, sonidos y soluciones caseras)

We continue making progress on our bioacoustics project focused on the conservation of Patagonian amphibians, thanks to the support of WILDLABS. Here are some of the areas we’ve been working on in recent months:

(Seguimos avanzando en nuestro proyecto de bioacústica aplicada a la conservación de anfibios patagónicos, gracias al apoyo de WildLabs.Queremos compartir algunos de los frentes en los que estuvimos trabajando estos meses)

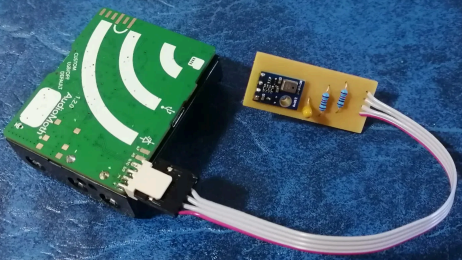

1. Hardware

One of our main goals was to explore how to improve AudioMoth recorders to capture not only sound but also key environmental variables for amphibian monitoring. We tested an implementation of the I2C protocol using bit banging via the GPIO pins, allowing us to connect external sensors. The modified firmware is already available in our repository:

👉 https://gitlab.com/emiliobascary/audiomoth

We are still working on managing power consumption and integrating specific sensors, but the initial tests have been promising.

(Uno de nuestros principales objetivos fue explorar cómo mejorar las grabadoras AudioMoth para que registren no sólo sonido, sino también variables ambientales clave para el monitoreo de anfibios. Probamos una implementación del protocolo I2C mediante bit banging en los pines GPIO, lo que permite conectar sensores externos. La modificación del firmware ya está disponible en nuestro repositorio:

https://gitlab.com/emiliobascary/audiomoth

Aún estamos trabajando en la gestión del consumo energético y en incorporar sensores específicos, pero los primeros ensayos son alentadores.)

2. Software (AI)

We explored different strategies for automatically detecting vocalizations in complex acoustic landscapes.

BirdNET is by far the most widely used, but we noted that it’s implemented in TensorFlow — a library that is becoming somewhat outdated.

This gave us the opportunity to reimplement it in PyTorch (currently the most widely used and actively maintained deep learning library) and begin pretraining a new model using AnuraSet and our own data. Given the rapid evolution of neural network architectures, we also took the chance to experiment with Transformers — specifically, Whisper and DeltaNet.

Our code and progress will be shared soon on GitHub.

(Exploramos diferentes estrategias para la detección automática de vocalizaciones en paisajes acústicos complejos. La más utilizada por lejos es BirdNet, aunque notamos que está implementado en TensorFlow, una libreria de que está quedando al margen. Aprovechamos la oportunidad para reimplementarla en PyTorch (la librería de deep learning con mayor mantenimiento y más popular hoy en día) y realizar un nuevo pre-entrenamiento basado en AnuraSet y nuestros propios datos. Dado la rápida evolución de las arquitecturas de redes neuronales disponibles, tomamos la oportunidad para implementar y experimentar con Transformers. Más específicamente Whisper y DeltaNet. Nuestro código y avances irán siendo compartidos en GitHub.)

3. Miscellaneous

Alongside hardware and software, we’ve been refining our workflow.

We found interesting points of alignment with the “Safe and Sound: a standard for bioacoustic data” initiative (still in progress), which offers clear guidelines for folder structure and data handling in bioacoustics. This is helping us design protocols that ensure organization, traceability, and future reuse of our data.

We also discussed annotation criteria with @jsulloa to ensure consistent and replicable labeling that supports the training of automatic models.

We're excited to continue sharing experiences with the Latin America Community— we know we share many of the same challenges, but also great potential to apply these technologies to conservation in our region.

(Además del trabajo en hardware y software, estamos afinando nuestro flujo de trabajo. Encontramos puntos de articulación muy interesantes con la iniciativa “Safe and Sound: a standard for bioacoustic data” (todavía en progreso), que ofrece lineamientos claros sobre la estructura de carpetas y el manejo de datos bioacústicos. Esto nos está ayudando a diseñar protocolos que garanticen orden, trazabilidad y reutilización futura de la información. También discutimos criterios de etiquetado con @jsulloa, para lograr anotaciones consistentes y replicables que faciliten el entrenamiento de modelos automáticos. Estamos entusiasmados por seguir compartiendo experiencias con Latin America Community , con quienes sabemos que compartimos muchos desafíos, pero también un enorme potencial para aplicar estas tecnologías a la conservación en nuestra región.)

7 August 2025 10:02pm

Love this team <3

We would love to connect with teams also working on the whole AI pipeline- pretraining, finetuning and deployment! Training of the models is in progress, and we know lots can be learned from your experiences!

Also, we are approaching the UI design and development from the software-on-demand philosophy. Why depend on third-party software, having to learn, adapt and comply to their UX and ecosystem? Thanks to agentic AI in our IDEs we can quickly and reliably iterate towards tools designed to satisfy our own specific needs and practices, putting the researcher first.

Your ideas, thoughts or critiques are very much welcome!

8 August 2025 1:20pm

Kudos for such an innovative approach—integrating additional sensors with acoustic recorders is a brilliant step forward! I'm especially interested in how you tackle energy autonomy, which I personally see as the main limitation of Audiomoths

Looking forward to seeing how your system evolves!

Apis Nomadica Labs: Mobile Bee-Search for the Future of Food & Medicine

7 August 2025 8:23am

Tech4Nature Presents: 2025 Innovation Challenge Workshop Series - Registration Now Open!

6 August 2025 5:08pm

AI for Conservation Office Hours: 2025 Review

Jake Burton

and 1 more

Jake Burton

and 1 more

6 August 2025 2:16pm

Collaborate on Conservation Tech Publications With HAAG

5 August 2025 11:46pm

WEBINAR: Sound Decisions

5 August 2025 5:15pm

Issues with Audiomoth recorders

10 July 2025 10:54pm

24 July 2025 9:20pm

Cool! Can you specify how you wrap the painters tape? Is it all the way around the sensor, or partial, or some other configuration?

30 July 2025 4:39pm

We don't wrap all the way around, just halfway around sticking to either side of the battery case right next to, but not touching, the circuitboard. If that's not clear I can take a pic :)

30 July 2025 10:00pm

Awesome, I think I get what you mean! Thank you!

A technical and conceptual curiosity... Could generative AI help us simulate or decode animal communication?

21 July 2025 1:04am

25 July 2025 2:30pm

Hi Jorge,

I think you'll find this research interesting: https://blog.google/technology/ai/dolphingemma/

Google's researchers did exactly that. They trained an LLM on dolphin vocalizations to produce continuation output, exactly as in the autoregressive papers you've mentioned, VALL-E or WaveNet.

I think they plan to test it in the field this summer and see if it will produce any interesting interaction.

Looking forward to see what they'll find :)

Besides, two more cool organizations working in the field of language understanding of animals using AI:

28 July 2025 3:51pm

This is a really fascinating concept. I’ve been thinking about similar overlaps between AI and animal communication, especially for conservation applications. Definitely interested in seeing where this kind of work goes.

30 July 2025 9:46am

This is such a compelling direction, especially the idea of linking unsupervised vocalisation clustering to generative models for controlled playback. I haven’t seen much done with SpecGAN or AudioLDM in this space yet, but the potential is huge. Definitely curious how the field might adopt this for species beyond whales. Following this thread closely!

Postdoctoral Position — Machine Learning and Downscaling to Advance Ecological Applications

28 July 2025 5:08pm

Issue with SongBeam recorder

17 July 2025 9:40pm

24 July 2025 9:19pm

Hi Josept! Thank you for sharing your experience! This types of feedback are important for the community to know about when choosing what tech to use for their work. Would you be interested in sharing a review of Songbeam and the Audiomoth on The Inventory, our wiki-style database of conservation tech tools, R&D projects, and organizations? You can learn more here about how to leave reviews!

Analyzing Bird Song

16 July 2025 5:20pm

18 July 2025 9:44am

Hi John,

You can try upload it to BirdNEt or try using BirdNET GUI

I believe BirdNET's native classifier is already good enough for the majority of north american birds.

24 July 2025 5:08pm

Another option you can try is HawkEars, which is a classifier made particularly for Canadian birds. Unlike BirdNET, it doesn't have a graphical interface, though.

MS and PhD Opportunities in Ocean Engineering and Oceanography (Fall 2026)

24 July 2025 5:55am

The Variety Hour: July 2025

21 July 2025 3:33pm

Help Shape India’s First Centralized Bioacoustics Database – 5 Min Survey

13 May 2025 3:01pm

17 July 2025 8:07am

PROJECT UPDATE:

We are curating an open-access, annotated acoustic dataset for India! 🇮🇳🌿

This project has two main goals:

🎧 To develop a freely available dataset of annotated acoustic data from across India (for non-commercial use)

📝 To publish a peer-reviewed paper with all community contributors that ensures transparency, credibility, and future usability

Join us:

1️⃣ Read the FAQs for more details: 🔗 https://drive.google.com/file/u/1/d/1dYRZ579upiU6s-qMrlT1T3iNxbHfNFMi/view?usp=sharing

2️⃣ Fill out the Data Agreement Form to indicate your participation in the project: 🔗 https://docs.google.com/forms/d/e/1FAIpQLSezMdysMokgfP-6UKwnetRpCafTJpj55uvKnDe1G7KvKBbIaQ/viewform

By contributing your field recordings or annotation expertise, you’ll be supporting the future creation of AI-powered tools for wildlife detection and conservation research

This initiative is community-powered and open science-driven. Whether you're a researcher, sound recordist, conservationist, or bioacoustics enthusiast, your input will shape the future of biodiversity research in India.

Contact indiaecocacousticsnetwork@gmail.com for any questions or collaborations!

Still open for fundings

17 July 2025 12:28pm

Contribute to India's largest open-access, annotated acoustic dataset

17 July 2025 8:10am

Audiomoth and Natterjack Monitoring (UK)

23 December 2020 8:16pm

18 June 2025 11:41am

Just out of curiosity (and apologies for resurrecting such an ancient thread) - did you ever get this off the ground? I just saw an article that mentioned how noisy natterjack toads are so I thought I'd google whether anyone's using bioacoustics to monitor them, and this popped up!

27 June 2025 3:53pm

Issy (hi again!) - I'm currently trying to finish off a paper based on three years of natterjack survey at WWT Caerlaverock, and am currently involved in a new project this year, where I'm working with ARC with deployments at Saltfleetby, Talacre (just got back from there a few minutes ago), and Woolmer Forest. So yep, definitely off the ground with this species. Ta, Carlos

11 July 2025 12:19pm

Just to provide an update to my post from 2021. Colleagues and I at the BTO have built a species classifier now for the BTO Acoustic Pipeline for the sound identification of all UK native and non-native frogs and toad species - including Natterjack Toad.

As with all our audible / bird classifiers, this will be free to use where the results / data can be shared.

We are currently working with researchers at ARC, and a few other individuals to test and refine the identification for particular species before we make this publicly available.

I would be interested to hear from anyone with sound recordings of Natterjack Toad, who would be happy to try out a beta version of the classifier.

Acoustic recording tags for marine mammals (soundscape research)

5 June 2025 6:04pm

30 June 2025 3:36pm

Hi There!

For biologgers, I think there are a few on the market that have been attached to marine mammals. I've provided a few links to ones that I know have been used for understanding the behavioural responses to sound - Dtags made by SMRU and Acousonde tags.

You could also have a look through The Inventory here on WildLabs for other devices on the market.

Hope that helps!

Courtney

3 July 2025 9:08am

Hey Maggie,

@TomKnowles and his team, 2025 awardees, are working on a acoustic tags to be deployed Basking Sharks!

3 July 2025 7:22pm

Thanks @Adrien_Pajot, we are!

Also buoys!

Bioacústica de insectos para la conservación

3 July 2025 4:08pm

I WANT TO TELL YOUR STORY

29 June 2025 10:22am

New Group Proposal: Systems Builders & PACIM Designers

18 June 2025 2:52pm

19 June 2025 9:08am

Hi Chad,

Thanks for the text. As I read it, PACIMs play a role in something else/bigger, but it doesn't explain what PACIMs are or what they look like. Now I've re-read your original post, I'm thinking, maybe I do understand, but then I feel the concept is too big ( an entire system can be part of a PACIM ? ) to get going within a WildLabs group. And you want to develop 10 PACIMS within a year through this group? Don't get me wrong, I am all for some systems change, but perhaps you're aiming too high.

19 June 2025 12:19pm

Hello again sir - PACIMs really mean 'projects' is the way I see it. Each part of the acronym can be seen as a project (if you have an assignment to do, you have a project really).

As for your query on 10 projects in 'this' group - I should ask for clarification if you mean particularly acoustics or in any group (I see now this is the acoustics thread after I selected all the groups for this post). If you are asking on acoustics, you're right - I am unsure on 10 as I am not too keen on acoustics yet. If you are asking 10 projects as a whole like 10 projects in the funding and finance group - I believe 10 to be a very reasonable number. Our projects we have co-created are for the most part replicable, rapidly deployable, quickly scalable, fundable through blended finance and more.

Thank you again for the feedback.

19 June 2025 1:43pm

Thank you for your reply, Chad

I meant 10 as a whole, indeed. Perhaps you see your post in one group, but since it is tagged for all groups, I assumed you meant 10 in total.

In your first post you explain PACIM stands for "Projects, Assignments, Campaigns, Initiatives, Movements, and Systems", so I understood it as more than just projects. Obviously, many things can be packed into a project or called a project, but then, what does it mean that 'Projects' is part of the list?

Well, if you think 10 projects is doable, then don't let me stop you.

WILDLABS AWARDS 2025 - Pioneering Acoustic Tags for Mysterious Hebridean Giants (Basking Sharks)

16 June 2025 1:12pm

Acoustic monitoring for tropical ecology and conservation

15 June 2025 10:33am

Feedback needed for new Tech Concepts

3 June 2025 12:43pm

11 June 2025 10:44pm

Gina- Sounds like an interesting thesis topic! I work with bioacoustics in offshore waters and I'd be happy to have a chat and provide feedback-- feel free to message me via Wildlabs.

The Locally-led East Asian Flyway Acoustics (LEAFA) Migratory Program; Harnessing emerging technologies to understand the impacts of a changing planet on ecosystem function of migratory birds in the East-Asian Australasian Flyway

11 June 2025 5:40am

Improving the performance of the pippyg static bat detector with a remote microphone

4 October 2024 11:19am

7 October 2024 11:19am

All inputs gratefully received and acknowledged! There will be experimentation with ferrite and resistor combinations when I get my components in.

The circuit on shipping PCBs has a 220 ohm resistor on the mic and op amp to operate as a low-pass filter to suppress high frequency supply noise. The current to the op amp and mic together are low enough that the 3.3v input drops to around 3.2v beyond the filter, definitely good for both op-amp and microphone. The combination of 44uF and 220ohms should put the 3dB point at 17Hz, so the filtering effect at even 17kHz - 10 octaves above that - is 60dB. The supply to the analog circuit should be very stable and quiet at the frequencies we care about, 20kHz and beyond, which is why the clicking noises with high frequency components was so annoying - it's clearly acoustic, no electrical in origin, it's pretty clear after all the comments on here that, even though SD cards do seem to generate ultrasound, the ceramic capacitors I've been using are the bigger culprit, so I need to address that and report back with an update to this post.

This has all been, and continues to be, EXTREMELY interesting and educational, and will ultimately lead to big improvements in what is already a great, affordable recorder.

10 October 2024 3:35pm

Here is the final, dramatic word. I built a pipistrelle (noisier than a pippyg so a good test) and used non-ceramic capacitors and played with ferrites and 1R resistors.

Attached are new vs old sonograms, and it's a remarkable improvement. The visual difference is stark, and there is a measured 5dB improvement in the "silence" between Noctule calls. Thanks to everyone who commented on this, this problem was entirely about ceramic capacitors. I have no doubt SD cards do generate ultrasound, but in this case it was being dwarfed by the ultrasound generated by the capacitors.

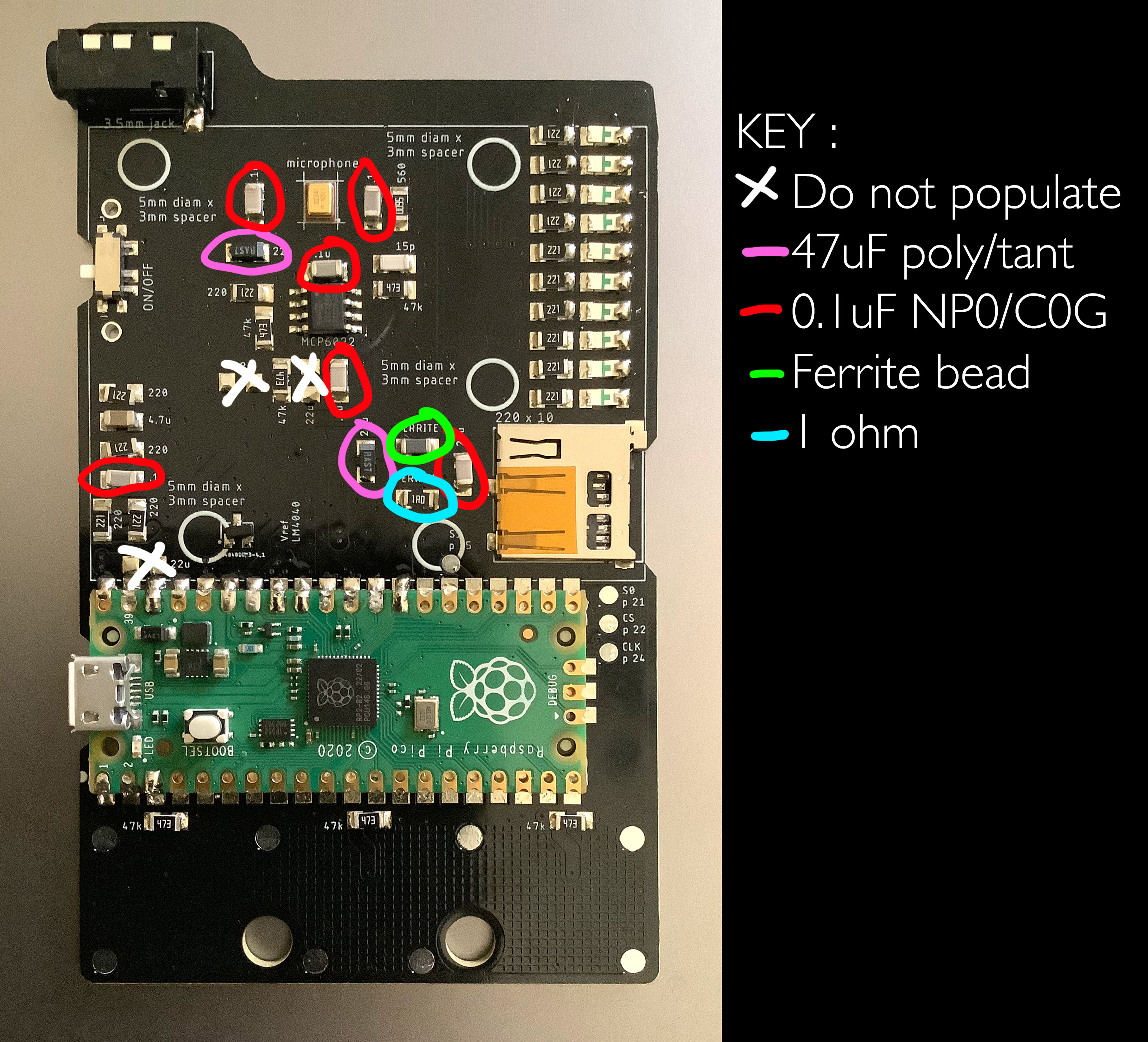

Circuit changes shown below - 3x 22uF ceramics removed, 2x 22uF ceramics replaced by 47uF poly/tant, 5x 100nF ceramics replaced by 100nF NP0/C0Gs, 1 ferrite swapped for a 1R - this one may be pointless!

10 June 2025 2:15pm

@PhilAtkin Really excited to PippyG available for public. Can't wait to get my hands on these little babies soon.

Apodemus PippyG | NHBS Wildlife Survey & Monitoring

Buy Apodemus PippyG: NHBS

Feedback New Tech - tracking aquatic biodiversity in offshore windfarms

7 June 2025 2:47pm

Any experience using camera traps for wading bird presence?

5 June 2025 8:04pm

23 April 2025 4:19pm

Fantastic! Can't wait to hear updates.