Hi everyone,

I am Dana, a PhD student. I’m planning to use AudioMoth Dev recorders for a passive acoustic monitoring project that involves localizing sound sources using multilateration. I’d love to hear from anyone who has experience with these recorders!

I’m particularly interested in any operational challenges you’ve encountered, such as deployment, durability, or power management. How is the recording quality in different environments? Have you noticed any issues with noise levels? Another key concern is time synchronization—how accurate is it over extended periods and across multiple devices?

If you’ve worked with AudioMoth Dev devices, I’d really appreciate any insights or recommendations. Looking forward to your thoughts!

Thanks in advance!

4 April 2025 6:50am

I guess, you know https://github.com/OpenAcousticDevices/Application-Notes/blob/master/Using_AudioMoth_GPS_Sync_to_Make_Synchronised_Recordings/Using_AudioMoth_GPS_Sync_to_Make_Synchronised_Recordings.pdf . So, as long as your field setup is similar to the experimental setup, good results should be possible. In particular, the use of GPS fixes before and after recordings to evaluate clock offset and drifts, suggests to keep individual recording periods as short as possible. Short means, environmental conditions (e.g. temperature) do not change very much. Obviously, if the sound generating subject is moving or the geometry is very different, your uncertainty in localization will be not as good as described in the document, where the precision is improved by averaging over 60 measurements. For moving targets you may have to use something like a Kalman filter.

4 April 2025 9:05am

Hey Dana, the audiomoth dev units are really powerful, and luckily there is quite a lot of information out there. I offer other solutions depending on the application. When you say localising sound, what do you mean? As there is no time syncing between devices. GPS *might

* give you a good time sync, but maybe not good enough for this application.

If you need a custom solution, or chat more about technical possibilites, send me a message.

Ryan

23 April 2025 10:33pm

Hey Dana!

I and others I work with have experience using Audiomoth GPS units for acoustic localization! I’m a PhD student in Justin Kitzes’ lab at the University of Pittsburgh, and we've deployed large-scale arrays of Audiomoths-GPS recorders for localizing singing birds.

We developed software for automating the time-delay estimation and localization process - its all in our open source python package (www.opensoundscape.org). We’ve tested it in the field on loudspeakers at known locations and with real birds, and it seems to work well. If you use an automated detection method (like BirdNET) the whole pipeline is completely automated. There'll be a paper describing the workflow soon, but as you asked about potential issues I wrote down some of the pain points we've noticed, organized in terms of the different steps in the workflow.

- Designing the localization grid.

For forest birds, we’ve used square grids that have recorders spaced 50m, with an extra recorder in the middle of each grid-square. You want to make sure that >4 recorders are going to be within detection distance of every point within your grid.

Device failure (from water damage, SD card damage, animals nibbling etc) is a potential issue. If you’re trying to make ecological inferences based on different rates of vocalization within parts of the grid, you want to make sure that a device failure isn’t going to make it impossible to localize a sound! There’s only so much you can do to avoid this, but it’s worth thinking about when considering the trade-off between area covered and the greater reliability of densely spaced recorders. - Deploying the recorders.

We attach our audiomoths to small branches, and use a RTK GPS to precisely measure their positions during deployment. You need precise recorder positions for accurate localization. We use a system that has a base station and hand-held rover. We've had problems getting GPS fixes in dense tropical forests.

Note: though the recorders have GPS receivers, it’s not obviously possible to use this data for getting accurate recorder positions. We just use the GPS timestamps for audio synchronization across multiple recorders. - Power management

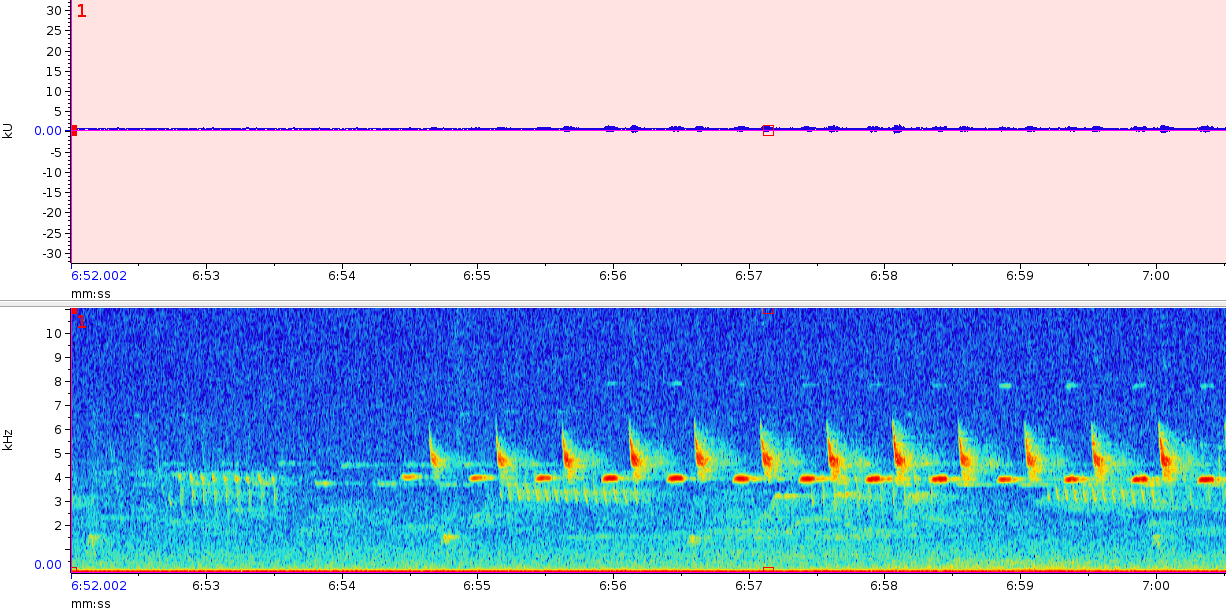

So far we’ve used firmware that records every GPS ping received. This allows you to resample the audio between every signal received (PPS signals are sent every second) to match the desired sampling rate. The new Audiomoth basic 1.11 firmware however has an option to record just the GPS signals at the start and end of the recording. This should make the battery last longer, and if the true sampling rate of the device doesn’t change much during the recording, resampling between these two GPS signals will give accurate time-synchronization across devices. However the longer the recording is and the more a device’s true sampling rate changes during the recording period (e.g. perhaps one audiomoth is in the sun and heats up, changing it’s true sampling rate slightly) the less accurate time-synchronization across multiple devices will be. We haven’t tested if this makes a difference yet (it's on my to-do list!), but I thought it’s worth mentioning as in previous experiments I've seen that the true sampling rate of a recorder can drift substantially over an hour-long recording. - Time-delay estimation

Unless you are having a human annotate the audio to estimate time-delays between recorders, you’ll use generalized cross-correlation to estimate them. This will be more error-prone in noisier environments. If there's a loud noise (like a branch falling), cross-correlation might estimate the delay of that sound in the two audio recordings, rather than the vocalization of interest. In our software we bandpass to species-specific frequency ranges and use the phase transform (GCC-PHAT) which should be robust to reverberation. Time-delay estimation will also likely be harder if a vocalization is highly stereotyped and periodic. In my mind the nightmare scenario is a chorus of vocalizing frogs, which would be very hard/impossible even for a human to annotate the audio and separate the same vocalization across multiple recorders in order to estimate time delays. - Error rejection.

Especially in a more automated approach, there are lots of places errors can slip in (mistaken identity from the automated detector, incorrect time-delay estimation, a dodgy localization from whatever method you're using to turn a set of time-delays into a co-ordinate position). There is a ton to discuss here, we outline some of what we do in our software tutorial.

Feel free to get in touch if you want to have a chat to talk through anything! Likewise, if you give opensoundscape a go and find it confusing, please feel free to raise a github issue or get in touch! https://github.com/kitzeslab/opensoundscape

Louis Freeland-Haynes

24 April 2025 1:55pm

Frontier Labs' BARs have built-in localization functionality (with accompanying software), just as another option. Michael Maggs is a great person to talk to about localization!

Walter Zimmer