State of the art thermal imaging core and the zoo

28 October 2024 6:12pm

13 January 2025 9:15am

I love those numbers 😀 indeed a holy grail. I’ll send you a private mail.

13 January 2025 9:23am

@HeinrichS there’s still time for you or anyone else to make a funding submission to the wildlabs 2025 grants ❤️❤️❤️

I haven't applied for wildlabs funding, but I would love for others to apply that want to use my systems. My preference goes to those who want to use the most units :-)

Rat story

13 January 2025 9:19am

Fellowship Opportunity: Planet Labs and Taylor Geospatial Institute 🚀

11 January 2025 5:37pm

App for WILDLABS?

6 January 2025 8:56am

10 January 2025 1:45pm

Hi Hugo! A WILDLABS App has certainly been on our wish-list of things we could build. Depending on funding availability in the future of course.

At the moment we are working on a number of big improvements and additions to the website, focussing on enhancing access to learning materials and existing content. Such as a new self-led training course system and navigation/discoverability improvements.

WILDLABS Awards 2024 - Statistics

15 January 2024 5:24pm

26 February 2024 5:25pm

Thanks @alexrood for creating this visual!

4 September 2024 2:48pm

10 January 2025 10:15am

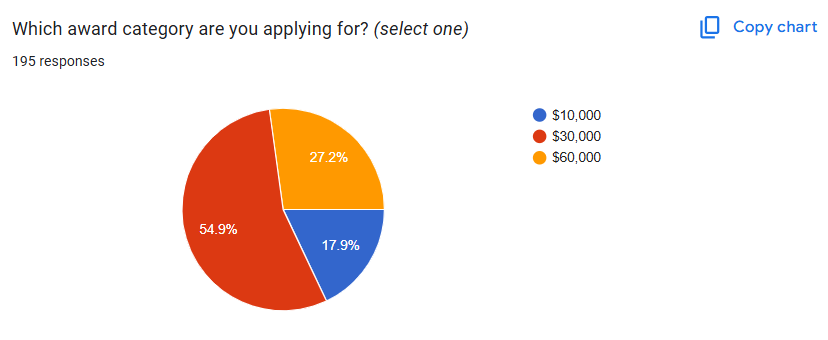

After discussing with a potential applicant for 2025, I realised that I never shared the stats about the number of applications per category. Here they are:

- 35 projects for 7 grants of $10,000

- 107 projects for 5 grants of $30,000 (we selected 3)

- 53 projects for 2 grants of $60,000 (we selected 3)

Webinar: Drone-based VHF tracking for Wildlife Research and Management

9 January 2025 11:45pm

Canopy access or tree climbing resources for arboreal research

12 September 2024 8:51pm

4 October 2024 10:04pm

Hi all! Folks may be interested in the Cornell Tree Climbing program that is a part of Cornell Outdoor Education. Not only does Cornell offer training, and have a bunch of online resources, but they have also facilitated groups of scientists to collect canopy samples and data.

Cornell Tree Climbing | Student & Campus Life | Cornell University

CTC promotes safe and environmentally responsible tree climbing techniques for

9 January 2025 10:50pm

Hi Dominique,

Thanks for your responses and congratulations on getting trained!

I can see that speaking directly with a climbing professional could be the most beneficial because what climbing methods and equipment you may need will depend very much on individual project goals and budgets. Did you end up speaking with your trainers about your field research goals and what climbing methods may be best for you?

9 January 2025 11:27pm

Hi Mark, thanks for responding. I think you've identified one of the most difficult parts of research climbing: maintaining your climbing skills and knowledge between field sessions.

My husband is an experienced arborist and practices his skills almost daily. I am not an arborist, so I schedule climbing time to keep my abilities fresh and my husband is there to assist. But I know it's difficult for individual researchers to practice on their own and they should only be climbing alone if experienced and not in a remote area.

However, it's possible to train researchers to safely climb in the field for multiple field sessions. My husband and I trained a group of climbers in Cameroon in January, 2024. The goal was to train four climbers who would go into the remote rainforest for several weeks and set up camera traps. They would deploy and retrieve arboreal cameras at different survey locations over two years. We needed to train the teams to operate safely and independently (without an instructor present) in very remote areas.

To train them sufficiently, my husband and I spent 1 month in Cameroon with the field team. We did a few days of basic training at a location near the city and then went with them on their initial camera deployment where we continued field training for 2.5 - 3 weeks. Before going to Cameroon, we had several discussions to determine what climbing method and equipment would best meet their data collection goals and were appropriate for their field site and conditions. We taught them basic rescue scenarios. We also set a climbing practice schedule for the team to maintain their climbing and rescue skills. We strongly emphasized to their management that the field team needed access to the climbing gear and needed to follow the practice schedule. Since the training, the team successfully finished two other camera trap surveys and is planning their third.

This was a lot of up-front training and cost. However, these climbers are now operating on their own and can continue operating for the foreseeable future. I think a big reason is receiving extensive training, tailored to their needs. General tree-climbing courses are great for learning the basics, but they'll never be a substitute for in-field, tailored training.

Which LLMs are most valuable for coding/debugging?

25 September 2024 5:48pm

4 October 2024 7:53pm

Thanks, Lampros!

29 October 2024 11:10am

When it comes to coding and debugging, several large language models (LLMs) stand out for their value. Here are a few of the most valuable LLMs for these tasks:

1. OpenAI's Codex: This model is specifically trained for programming tasks, making it excellent for generating code snippets, suggesting improvements, and even debugging existing code. It powers tools like GitHub Copilot, which developers find immensely helpful.

2. Google's PaLM: Known for its versatility, PaLM excels in understanding complex queries, making it suitable for coding-related tasks as well. Its ability to generate and refine code snippets is particularly useful for developers.

3. Meta's LLaMA: This model is designed to be adaptable and can be fine-tuned for specific coding tasks. Its open-source nature allows developers to customize it according to their needs, making it a flexible option for coding and debugging.

4. Mistral: Another emerging model that shows promise in various tasks, including programming. It’s being recognized for its capabilities in generating and understanding code.

These LLMs are gaining traction not just for their coding capabilities but also for their potential to streamline the debugging process, saving developers time and effort. If you want to dive deeper into the features and strengths of these models, you can check out the full article here: Best Open Source Large Language Models LLMs

9 January 2025 8:51pm

thanks kristy! super helpful list.

Tanzania Forest Fund opens 2025 call for proposals (TZS 5M-50M or more)

8 January 2025 2:35pm

WILDLABS Behind the Buzz: Key Policy Frameworks

Talia Speaker

and 1 more

Talia Speaker

and 1 more

7 January 2025 11:17pm

Behind the Buzz Season 1: From Data to Decisions

Talia Speaker

and 1 more

Talia Speaker

and 1 more

7 January 2025 11:15pm

Conservation International - AI Innovation Manager

7 January 2025 6:26pm

What the mice get up to at night

6 January 2025 8:06am

7 January 2025 1:09pm

And I see now they can walk vertically up walls like Spider-Man.

A question about nanopore sequencing analysis

20 August 2024 10:00am

25 November 2024 11:18am

Not an expert here, but when I used this a few years back we initially used the guppy process to do real-time base calling as the nanopore was sequencing, but found that we were better off waiting for the sequencing to finish and then reprocessing all the output using the slow and careful option of the guppy base-calling process (which was way to slow to do effective real-time calling).

25 November 2024 2:10pm

Hi Chris Yesson,

Thank you for your response and answer. Yaps, in my experience with Guppy, we need to increase the model to get better results. However, When I used Dorado for basecalling, it was more appropriate with the result than a Guppy. Also, I found a journal about Porechop_ABI that could minimize unclassified barcodes because of the non-read adapters.

This is a journal : Porechop_ABI: discovering unknown adapters in Oxford Nanopore Technology sequencing reads for downstream trimming | Bioinformatics Advances | Oxford Academic

Best Regards,

6 January 2025 9:18pm

Hi Muhammed,

If you want any help with ONT please contact me

PhD Scholarship - Urban Kākā Ecology and Conservation

6 January 2025 4:24pm

PhD Scholarship - Recognising Taonga with AI: Facial Recognition for Kākā Conservation Management

6 January 2025 4:22pm

DeepForest Code Walkthrough - Airborne Machine Learning for Biodiversity Monitoring

6 January 2025 3:24pm

Recommended Hardware to stitch together Drone Imagery into Orthomosaics

25 November 2024 6:25am

21 December 2024 5:21am

This site may be of use for WebODM and photogrammetry using UAVs, as it focuses purely on processing that type of data and the hardware required. It also covers Conservation and Agriculture analysis and training using open source GIS and 3D point cloud software. Follow the links below:

https://www.geowingacademy.com

Hope this helps.

3 January 2025 3:41am

Hi @willrippon, I've worked with 3D reconstruction systems (software and hardware) for over 10 years now, and lately I've been really pleasantly surprised by Pix4D.

Here is a demo for a landscape survey my company just did.

Here is a 300 MB orthomosaic we just got as well.

Here is the hardware workflow described in detail.

On top of the drone imagery, we did "post-processing kinematics" (PPK) of the drone images (a very important thing to do, otherwise photogrammetry quality really suffers). I'm happy to discuss workflow further, and plan to release open source code and services.

If nothing else, go with Pix4D.

6 January 2025 7:40am

Thank you David, a phenomenal source!

Ai for soil nutrient analysis

24 December 2024 6:42pm

30 December 2024 3:46pm

We'd love to see this added to The Inventory, our wiki-style databse of conservation tech tools, R&D projects, and organizations! Check out the user guide to learn more about how to add a page for your app. Give a shout with any questions!

5 January 2025 1:10am

Thank you for the comments. The app is not made available on the app or google store yet. It is applicable on android systems and does not need a high level skill to operate however one would need to be taught how to use the app and make meaning of the data.

5 January 2025 1:17am

Joint ecoacoustic & camera-trapping project in Indonesia!

1 August 2024 5:29pm

9 December 2024 3:41am

Awesome Carly, thanks. Yes helps a lot. Those all sound like big improvements over the hardware we're currently working with.

13 December 2024 7:42pm

Hi Carly,

That would be great! Send me a message and we'll put something together after the holidays.

4 January 2025 5:27pm

Hello Carly,

Congratulations for this project!

I am studying right now a second MA in Environment Management. I would like to do my MA thesis project about these technologies (bioacoustics and camera traps). I wonder if you would be interested in a collaboration with me on that ?

I already have a professional experience so I think that my contribution could be interesting for you.

Thank you in advance for answering,

Simon

Use of acoustics to combat wildlife crime // Uso de acústica para combatir delitos contra la vida silvestre

14 October 2024 3:04pm

29 November 2024 1:09pm

Yes, thank you!

27 December 2024 2:00pm

Hola Vanessa,

Aside from whats already commented, did you find any interesting new leads on this? I'm also interested in ways ecoacoustic tech can be used against wildlife crime, both as in research development, but even more so in its short or long-term applications and how it can be truly adopted in source countries.

It seems to me research or work in this is still limited but there's potential! I'd love to stay in touch about it :)

31 December 2024 8:02am

Hey Xiona,

I see that you are from a university that is very close to where I live. I have a platform that is well suited for combating wildlife crime and I perceive that it’s rather a small step to go from where I am to where you guys are all asking for. I suspect the piece I’m missing could be generated pretty easily with AI.

Would you be interested in having a chat about this ? Perhaps we can embark on a project ?

Kim from near Sittard,

The Netherlands

Camera trap triggered by loud noise coming from inside a nest box

28 December 2024 6:31pm

29 December 2024 6:18pm

Well, I have an audio recording project (https://github.com/hcfman/sbts-aru), that records potentially with highly accurate clock if you want to do sound localization, but you can use it to both record sounds and process an audio pipeline at the same time. And I have an AI object detector and video alerting project that handles input and output I/O as well. But it has a flexible state machine build in. It doesn't have to trigger with video, it's trivial to make it trigger from anything else. Such as the audio reaching a certain level, or even an audio pipeline recognising a certain sound. It's just a rest http call to the state machine, then it can capture video frames, with pre-capture if needed as well.

It draws a lot of power though. But I haven't tested the power usage when not running with anb object detector though. The lowest power Pi it can run on is a Raspberry Pi 4.

I have intentions to combine the two of these projects at some point to make something that can trigger on both object detection and also audio detection. When I get time... which is the only barrier. Bit it's not my highest prio right now.

It would definately need solar panels though to make it run for a long time. But in principle it would likely not be very hard to tweak it to trigger on sound. If this becomes a really must have bit of functionality, you could reach out to me directly. But it might be a couple of months before I could put any time into something like this.

I know that various people are interested in things like detecting chainsaw sounds. This could do that sort of thing with the right pipeline, but I haven't even started to play with audio analysis pipelines yet.

30 December 2024 3:42pm

That's great, we are in no hurry since this would be for next year's reproduction season. We would also be interested in the Ai that you mention if it's able to detect big size birds (working with the southern ground hornbill). That way the camera would trigger whenever the parents enter the nestbox. I asked a little bit and solar panels shouldn't be an issue. But to explain it in a more detailed way (exactly what will be need it, which camereas can be paired with the program, anything else relevant, etc) maybe we could keep the conversation through email: quimagell@hotmail.com

Im trying to explain everything to my bosses and would be great to know exactly what will be needed besaides solar panels and the camera (since this kind of technology is really new we might need some clear instructions and guidance, my knowlodge extens to CT and solar panels to power survillance cameras at nests but that would be it..). Thanks so much before hand!

30 December 2024 6:28pm

Sounds good, will do.

New PhD - Drones, seaweeds & climate change

30 December 2024 11:33am

Pytorch Wildlife v5 detection in thermal

28 December 2024 10:35am

29 December 2024 8:08pm

I added plain old motion detection because megadetector v5 was not working well with the smaller rat images and in thermal.

This works really well:

Also, I can see really easily where the rat comes into the shed, see this series:

Just visible

Clearly visible.

So now I have a way to build up a create thermal dataset for training :-)

My Journey with the Women in Conservation Technology Program

27 December 2024 1:50pm

UAV flight planning software recommendations?

16 December 2024 7:30am

20 December 2024 1:37am

I believe map pilot pro allows for mapping with the DJI mini or another app I've heard people use is called map-creator. I think the later is a bit harder to get up and running. Third option is to take pictures the best you can in a double grid format and then use a stitching program to analyze the results. Pix4D and Agisoft are commercial options meanwhile OpenDroneMap is open source and has a lot of functionality. I ran into a similar problem when trying to use a DJI mavic air and they hadn't released an SDK.

22 December 2024 8:12am

Hi Chris! Yes, I use Drone Harmony. Although not free, the Basics package is very reasonably prices and allows for quite a good variety of flight mission planning options. It also runs a variety of DJI drones including the Mini and other Mini Models

23 December 2024 8:40pm

I've used both Dronelink and Litchi for flight planning with my DJI Mini SE - and then stitched in WebODM. Both apps support most DJI drones that have a suporting SDK. I'm not aware of any solutions that support any DJI drones without an SDK - and the more recent mini models doesnt have published SDKs since it seems DJI is limiting it to their enterprise level drones only.

Hope this helps!

Heinrich

Finishing Off the 2024 WiCT Programme: Building an East African Female Conservation Technology Community

23 December 2024 3:18pm

12 August 2025 3:45pm

Citizen Science Training Project for Youth and the Community.

23 December 2024 3:22am

Photogrammetry of Coral Reef Breakwaters

20 December 2024 1:11am

22 December 2024 8:35am

Hi Matthew

Wow, what an amazing project! It is incredible how you have managed to figure out some of the complexities of combining underwater imagery with UAV imagery. I am sure that was a fascinating learning experience.

I cannot help you with Points 1 and 3 but can suggest using Orfeo Toolbox for your segmentation process in Point 2. It integrates with QGIS and is open source. It will work with your UAV ortho maps if there is enough visible detail of the corals. You will be able to build and train your own model in order to accurately segment you reef. It takes a bit of know how to us the Machine Learning algorithms but once you get the hang of it it becomes immensely powerful. I will be building an online course on how to build your own machine learning processing algorithms in 2025 using this brilliant package. Once segmented you can save out the shapefiles and use them to segment and define your 3D point clouds in Agisoft.

Alternatively, look into using Picterra Geospatial AI. It is cloud based and much simpler to use but you may need to compress your imagery or point clouds in order to upload them given the file size you mentioned above. (Although they probable do allow for large uploads these days, I used Picterra in the past and it was brilliant!). Also i am not sure if it works with point clouds directly but have a look

I hope this helps. Great project!

Solar powered Rasyberry Pi 5

19 December 2024 11:40am

20 December 2024 2:37pm

Actually, you can use any BMS as long as it supports 1S, and that will be sufficient. However, I recommend connecting the batteries in parallel to increase the capacity and using just one BMS. Everything should run through the power output of the BMS.

That said, don’t rely too much on BMS units from China, as they might not perform well in the long run. I suggest getting a high-quality one or keeping a spare on hand.

20 December 2024 4:45pm

Don't they all come from China ?

The one in the picture I can find on aliexpress for 2.53 euros.

I'm not sure how you would get one that doesn't come from China.

In any case, I know what to search for now, that's very helpful. Thank you.

What is the 1S thing you mention above ?

If you have any links as to what you consider a high quality one that would be great!

21 December 2024 1:20am

Actually, you can source the product from anywhere, but I’m not very confident in the quality of products from China. That doesn’t mean products from China are bad—it might just be my bad luck. However, trust me, they can work just as well; you just need to ensure proper protection.

For 1S, it refers to the number of battery cells supported. A 1S configuration for LiFePO4 means a single battery cell with 3.6V. Of course, you must not confuse LiFePO4 with Li-Ion BMS, as the two types are not interchangeable and cannot be used together.

***However, you can use a power bank board designed for Li-Ion to draw power from the battery for use. But you must not charge the LiFePO4 battery directly without passing through a BMS; otherwise, your battery could be damaged.***

12 January 2025 9:04pm

I would also be interested - looking at starting a project that need observation of large african animals with nocturnal habits... Holy grail with unlimited funding would be a grid of 100's of cameras :-)