Camera traps have been a key part of the conservation toolkit for decades. Remotely triggered video or still cameras allow researchers and managers to monitor cryptic species, survey populations, and support enforcement responses by documenting illegal activities. Increasingly, machine learning is being implemented to automate the processing of data generated by camera traps.

A recent study published showed that, despite being well-established and widely used tools in conservation, progress in the development of camera traps has plateaued since the emergence of the modern model in the mid-2000s, leaving users struggling with many of the same issues they faced a decade ago. That manufacturer ratings have not improved over time, despite technological advancements, demonstrates the need for a new generation of innovative conservation camera traps. Join this group and explore existing efforts, established needs, and what next-generation camera traps might look like - including the integration of AI for data processing through initiatives like Wildlife Insights and Wild Me.

Group Highlights:

Our past Tech Tutors seasons featured multiple episodes for experienced and new camera trappers. How Do I Repair My Camera Traps? featured WILDLABS members Laure Joanny, Alistair Stewart, and Rob Appleby and featured many troubleshooting and DIY resources for common issues.

For camera trap users looking to incorporate machine learning into the data analysis process, Sara Beery's How do I get started using machine learning for my camera traps? is an incredible resource discussing the user-friendly tool MegaDetector.

And for those who are new to camera trapping, Marcella Kelly's How do I choose the right camera trap(s) based on interests, goals, and species? will help you make important decisions based on factors like species, environment, power, durability, and more.

Finally, for an in-depth conversation on camera trap hardware and software, check out the Camera Traps Virtual Meetup featuring Sara Beery, Roland Kays, and Sam Seccombe.

And while you're here, be sure to stop by the camera trap community's collaborative troubleshooting data bank, where we're compiling common problems with the goal of creating a consistent place to exchange tips and tricks!

Header photo: Stephanie O'Donnell

Spatial ecologist focused on landscape ecology and spatial modeling of biodiversity

- 0 Resources

- 0 Discussions

- 4 Groups

- @Britneecheney

- | her/she

My name is Britnee Cheney. I am a keeper and trainer for three North American River Otters at an aquarium in Utah. I have recently started a conservation program for this species in the wild and am looking for resources and mentors to help me with my camera trapping.

- 0 Resources

- 13 Discussions

- 8 Groups

- @HeinrichS

- | he/him

Conservation tech geek, custodian (some say owner) and passionate protector of African wildlife, business systems analyst.

- 0 Resources

- 29 Discussions

- 6 Groups

- @StephODonnell

- | She / Her

- 193 Resources

- 676 Discussions

- 32 Groups

- @Bibou

- | Her

Student at Bordeaux Sciences Agro

- 0 Resources

- 1 Discussions

- 2 Groups

Paul Millhouser GIS Consulting

- 0 Resources

- 5 Discussions

- 4 Groups

Associate Wildlife Biologist M.S. Student

- 2 Resources

- 19 Discussions

- 7 Groups

- 0 Resources

- 7 Discussions

- 7 Groups

- @Rob_Appleby

- | He/him

Wild Spy

Whilst I love everything about WILDLABS and the conservation tech community I am mostly here for the badges!!

- 1 Resources

- 315 Discussions

- 11 Groups

I am a conservation technology advisor with New Zealand's Department of Conservation. I have experience in developing remote monitoring tech, sensors, remote comms and data management.

- 0 Resources

- 0 Discussions

- 15 Groups

- @SimonKariuki

- | He/Him

- 0 Resources

- 0 Discussions

- 3 Groups

I work in Mexico’s Yucatan Peninsula. The focus of my NGO is to facilitate the scientific study of the natural and cultural resources of the cenotes and underground rivers of our region.

- 0 Resources

- 0 Discussions

- 3 Groups

I put together some initial experiences deploying the new SpeciesNet classifier on 37,000 images from a Namibian camera trap dataset and hope that sharing initial impressions might be helpful to others.

23 April 2025

A nice resource that addresses the data interoperability challenge from the GBIF.

4 April 2025

Conservation International is proud to announce the launch of the Nature Tech for Biodiversity Sector Map, developed in partnership with the Nature Tech Collective!

1 April 2025

The FLIR ONE thermal camera is a compact and portable thermal imaging device capable of detecting heat signatures in diverse environments. This report explores its application in locating wild animals across large areas...

27 March 2025

WWF's Arctic Community Wildlife Grants program supports conservation, stewardship, and research initiatives that focus on coastal Arctic ecology, community sustainability, and priority Arctic wildlife, including polar...

7 March 2025

The Smithsonian’s National Zoo and Conservation Biology Institute (SNZCBI) is seeking an intern to assist with multiple projects related to conservation technology for wildlife monitoring. SNZCBI scientists collect data...

3 March 2025

Article

NewtCAM is an underwater camera trap. Devices are getting deployed worldwide in the frame of the CAMPHIBIAN project and thanks to the support of our kind early users. Here an outcome from the UK.

24 February 2025

Osa Conservation is launching our inaugural cohort of the ‘Susan Wojcicki Research Fellowship’ for 2025, worth up to $15,000 per awardee (award value dependent on project length and number of awards given each year)....

10 February 2025

Did someone read/know this book?

9 February 2025

The worst thing a new conservation technology can do is become another maintenance burden on already stretched field teams. This meant Instant Detect 2.0 had to work perfectly from day 1. In this update, Sam Seccombe...

28 January 2025

This leads to an exciting blog we did recently, it also includes a spatial map indicating elephant movement tracks of an orphaned elephant who self released himself into the wild (Kafue National Park). Cartography was...

28 January 2025

The Zoological Society of London's Instant Detect 2.0 is the world's first affordable satellite connected camera trap system designed by conservationists, for conservationists. In this update, Sam Seccombe describes the...

21 January 2025

June 2025

July 2025

January 2025

October 2024

event

58 Products

Recently updated products

4 Products

Recently updated products

| Description | Activity | Replies | Groups | Updated |

|---|---|---|---|---|

| Hi, I'm collecting screenshots (and explanation if needed) of visualisations that you found useful. It could be charts, maps, tables... |

|

Data management and processing tools, Animal Movement, Camera Traps | 3 months 1 week ago | |

| I’ll reply with further commercial details direct to you. I think you are not supposed to discuss to that level in these discussions. This is managed product at this stage, not... |

|

Camera Traps | 3 months 2 weeks ago | |

| Hello, I am new to camera trapping and I have been researching trail cameras for a project to photograph the city. I need a trail cam... |

|

Camera Traps | 3 months 2 weeks ago | |

| Do the short videos happen mostly at night? If so, this is likely a problem with low batteries. The higher power required to power the IR flash can cause the camera to... |

|

Camera Traps | 3 months 4 weeks ago | |

| Hi, I am using these for my current project: They seem to be of good quality and support offline SD card storage. These run on 5V/1A. You should be able to run them for... |

|

Camera Traps, Animal Movement | 3 months 4 weeks ago | |

| Hi Simon,We (Reneco International Wildlife Consultants) have an ongoing collaboration with a local University (Abu Dhabi, UAE) for developing AI tools (cameratrap/drone... |

|

Acoustics, AI for Conservation, Animal Movement, Camera Traps, Citizen Science, Connectivity, Drones, Early Career, eDNA & Genomics, Marine Conservation, Protected Area Management Tools, Sensors | 4 months 1 week ago | |

| @HeinrichS there’s still time for you or anyone else to make a funding submission to the wildlabs 2025 grants ❤️❤️❤️I haven't applied for wildlabs funding, but I would love for... |

|

Human-Wildlife Conflict, AI for Conservation, Camera Traps, Emerging Tech | 4 months 1 week ago | |

| A video speak a thousand words. I thought I’d share some fun observing the local wildlife in the back yard in thermal. |

|

Camera Traps | 4 months 1 week ago | |

| Hi Mark, thanks for responding. I think you've identified one of the most difficult parts of research climbing: maintaining your climbing skills and knowledge between field... |

|

Community Base, Camera Traps, Conservation Tech Training and Education, Early Career | 4 months 1 week ago | |

| And I see now they can walk vertically up walls like Spider-Man. |

|

Camera Traps, AI for Conservation | 4 months 2 weeks ago | |

| Hello Carly, Congratulations for this project!I am studying right now a second MA in Environment Management. I would like to do my MA thesis project about these technologies... |

+6

|

Acoustics, Camera Traps | 4 months 2 weeks ago | |

| I added plain old motion detection because megadetector v5 was not working well with the smaller rat images and in thermal.This works really well: Also, I can see... |

|

AI for Conservation, Camera Traps | 4 months 3 weeks ago |

Postdoc on camera trapping, remote sensing, and AI for wildlife studies

15 January 2025 4:53pm

Thesis Collaboration

4 January 2025 5:15pm

14 January 2025 3:30pm

Hi Simon,

We're a biologging start-up based in Antwerp and are definitely open to collaborate if you're interested. We've got some programs going on with local zoo's. Feel free to send me a DM if you'd like to know more.

15 January 2025 8:30am

Hi Simon,

We (Reneco International Wildlife Consultants) have an ongoing collaboration with a local University (Abu Dhabi, UAE) for developing AI tools (cameratrap/drone images and video analyses) and biomimetic robots applied to conservation (e.g https://www.sciencedirect.com/science/article/pii/S1574954124004813 ). We also have a genetic team working on eDNA. Field experience could be possible, in UAE or Morocco.

Feel free to write me back if you may be interested and would like to know more

State of the art thermal imaging core and the zoo

28 October 2024 6:12pm

12 January 2025 9:04pm

I would also be interested - looking at starting a project that need observation of large african animals with nocturnal habits... Holy grail with unlimited funding would be a grid of 100's of cameras :-)

13 January 2025 9:15am

I love those numbers 😀 indeed a holy grail. I’ll send you a private mail.

13 January 2025 9:23am

@HeinrichS there’s still time for you or anyone else to make a funding submission to the wildlabs 2025 grants ❤️❤️❤️

I haven't applied for wildlabs funding, but I would love for others to apply that want to use my systems. My preference goes to those who want to use the most units :-)

Rat story

13 January 2025 9:19am

Canopy access or tree climbing resources for arboreal research

12 September 2024 8:51pm

4 October 2024 10:04pm

Hi all! Folks may be interested in the Cornell Tree Climbing program that is a part of Cornell Outdoor Education. Not only does Cornell offer training, and have a bunch of online resources, but they have also facilitated groups of scientists to collect canopy samples and data.

Cornell Tree Climbing | Student & Campus Life | Cornell University

CTC promotes safe and environmentally responsible tree climbing techniques for

9 January 2025 10:50pm

Hi Dominique,

Thanks for your responses and congratulations on getting trained!

I can see that speaking directly with a climbing professional could be the most beneficial because what climbing methods and equipment you may need will depend very much on individual project goals and budgets. Did you end up speaking with your trainers about your field research goals and what climbing methods may be best for you?

9 January 2025 11:27pm

Hi Mark, thanks for responding. I think you've identified one of the most difficult parts of research climbing: maintaining your climbing skills and knowledge between field sessions.

My husband is an experienced arborist and practices his skills almost daily. I am not an arborist, so I schedule climbing time to keep my abilities fresh and my husband is there to assist. But I know it's difficult for individual researchers to practice on their own and they should only be climbing alone if experienced and not in a remote area.

However, it's possible to train researchers to safely climb in the field for multiple field sessions. My husband and I trained a group of climbers in Cameroon in January, 2024. The goal was to train four climbers who would go into the remote rainforest for several weeks and set up camera traps. They would deploy and retrieve arboreal cameras at different survey locations over two years. We needed to train the teams to operate safely and independently (without an instructor present) in very remote areas.

To train them sufficiently, my husband and I spent 1 month in Cameroon with the field team. We did a few days of basic training at a location near the city and then went with them on their initial camera deployment where we continued field training for 2.5 - 3 weeks. Before going to Cameroon, we had several discussions to determine what climbing method and equipment would best meet their data collection goals and were appropriate for their field site and conditions. We taught them basic rescue scenarios. We also set a climbing practice schedule for the team to maintain their climbing and rescue skills. We strongly emphasized to their management that the field team needed access to the climbing gear and needed to follow the practice schedule. Since the training, the team successfully finished two other camera trap surveys and is planning their third.

This was a lot of up-front training and cost. However, these climbers are now operating on their own and can continue operating for the foreseeable future. I think a big reason is receiving extensive training, tailored to their needs. General tree-climbing courses are great for learning the basics, but they'll never be a substitute for in-field, tailored training.

What the mice get up to at night

6 January 2025 8:06am

7 January 2025 1:09pm

And I see now they can walk vertically up walls like Spider-Man.

Joint ecoacoustic & camera-trapping project in Indonesia!

1 August 2024 5:29pm

9 December 2024 3:41am

Awesome Carly, thanks. Yes helps a lot. Those all sound like big improvements over the hardware we're currently working with.

13 December 2024 7:42pm

Hi Carly,

That would be great! Send me a message and we'll put something together after the holidays.

4 January 2025 5:27pm

Hello Carly,

Congratulations for this project!

I am studying right now a second MA in Environment Management. I would like to do my MA thesis project about these technologies (bioacoustics and camera traps). I wonder if you would be interested in a collaboration with me on that ?

I already have a professional experience so I think that my contribution could be interesting for you.

Thank you in advance for answering,

Simon

Pytorch Wildlife v5 detection in thermal

28 December 2024 10:35am

29 December 2024 8:08pm

I added plain old motion detection because megadetector v5 was not working well with the smaller rat images and in thermal.

This works really well:

Also, I can see really easily where the rat comes into the shed, see this series:

Just visible

Clearly visible.

So now I have a way to build up a create thermal dataset for training :-)

Solar powered Rasyberry Pi 5

19 December 2024 11:40am

20 December 2024 2:37pm

Actually, you can use any BMS as long as it supports 1S, and that will be sufficient. However, I recommend connecting the batteries in parallel to increase the capacity and using just one BMS. Everything should run through the power output of the BMS.

That said, don’t rely too much on BMS units from China, as they might not perform well in the long run. I suggest getting a high-quality one or keeping a spare on hand.

20 December 2024 4:45pm

Don't they all come from China ?

The one in the picture I can find on aliexpress for 2.53 euros.

I'm not sure how you would get one that doesn't come from China.

In any case, I know what to search for now, that's very helpful. Thank you.

What is the 1S thing you mention above ?

If you have any links as to what you consider a high quality one that would be great!

21 December 2024 1:20am

Actually, you can source the product from anywhere, but I’m not very confident in the quality of products from China. That doesn’t mean products from China are bad—it might just be my bad luck. However, trust me, they can work just as well; you just need to ensure proper protection.

For 1S, it refers to the number of battery cells supported. A 1S configuration for LiFePO4 means a single battery cell with 3.6V. Of course, you must not confuse LiFePO4 with Li-Ion BMS, as the two types are not interchangeable and cannot be used together.

***However, you can use a power bank board designed for Li-Ion to draw power from the battery for use. But you must not charge the LiFePO4 battery directly without passing through a BMS; otherwise, your battery could be damaged.***

Announcement of Project SPARROW

18 December 2024 8:01pm

3 January 2025 6:48pm

Postdoc on camera trapping, remote sensing, and AI for wildlife studies

18 December 2024 2:14pm

Christmas wish list

16 December 2024 11:22am

16 December 2024 1:25pm

Oh there we go, you are selling this setup? Really cool!!!

Great Idea, just posted my requirements here:

lets see if I can find my dreamsolution.

16 December 2024 1:33pm

Yes, I have these. I'm pretty sure that my company is the first company in the world to sell products running on Raspberry Pi 5 in secure boot mode as well :-)

I responded to your wish list. From your description a modified camera like a HIKvision turret might be able to do it for you.

16 December 2024 2:24pm

great, this security cameras might be interesting for monitoring crop development and maybe other bigger pests like boars or some other herbivorous animals that could eventually go into the fields, but this is not what I'm trying to solve now. What I also have interest in is on the go weeds species monitoring, like on a robot, to create a high resolution map of weed infestation in a field (10-20 hectares). There eventually such modified camera could make sense. Thanks for the ideas!!

Postdoc on camera trapping, remote sensing, and AI for wildlife studies

16 December 2024 9:52am

Mirror images - to annotate or not?

5 December 2024 8:32pm

7 December 2024 3:18pm

I will send you a DM on LinkedIn and try to find a time to chat

8 December 2024 12:36pm

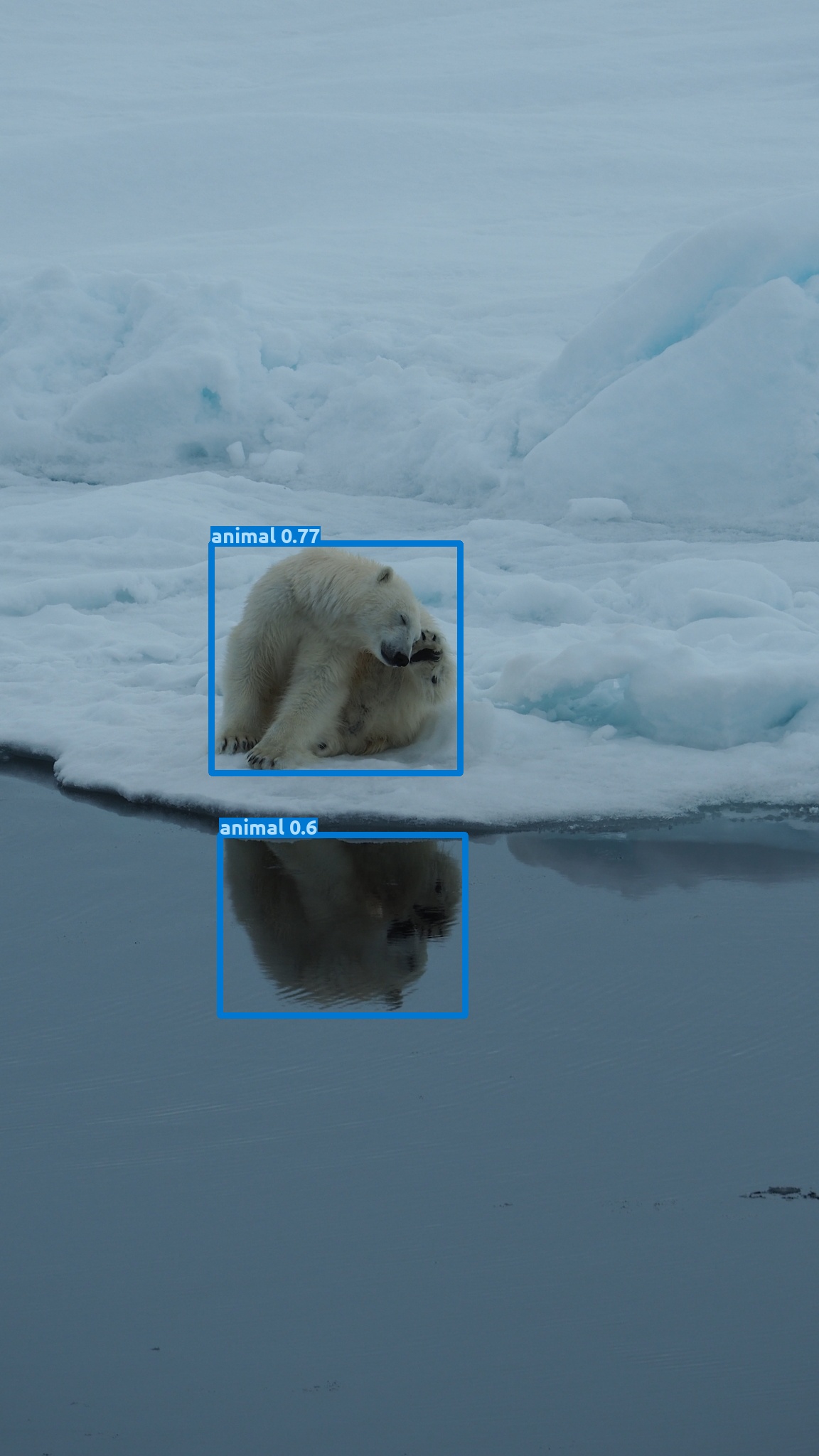

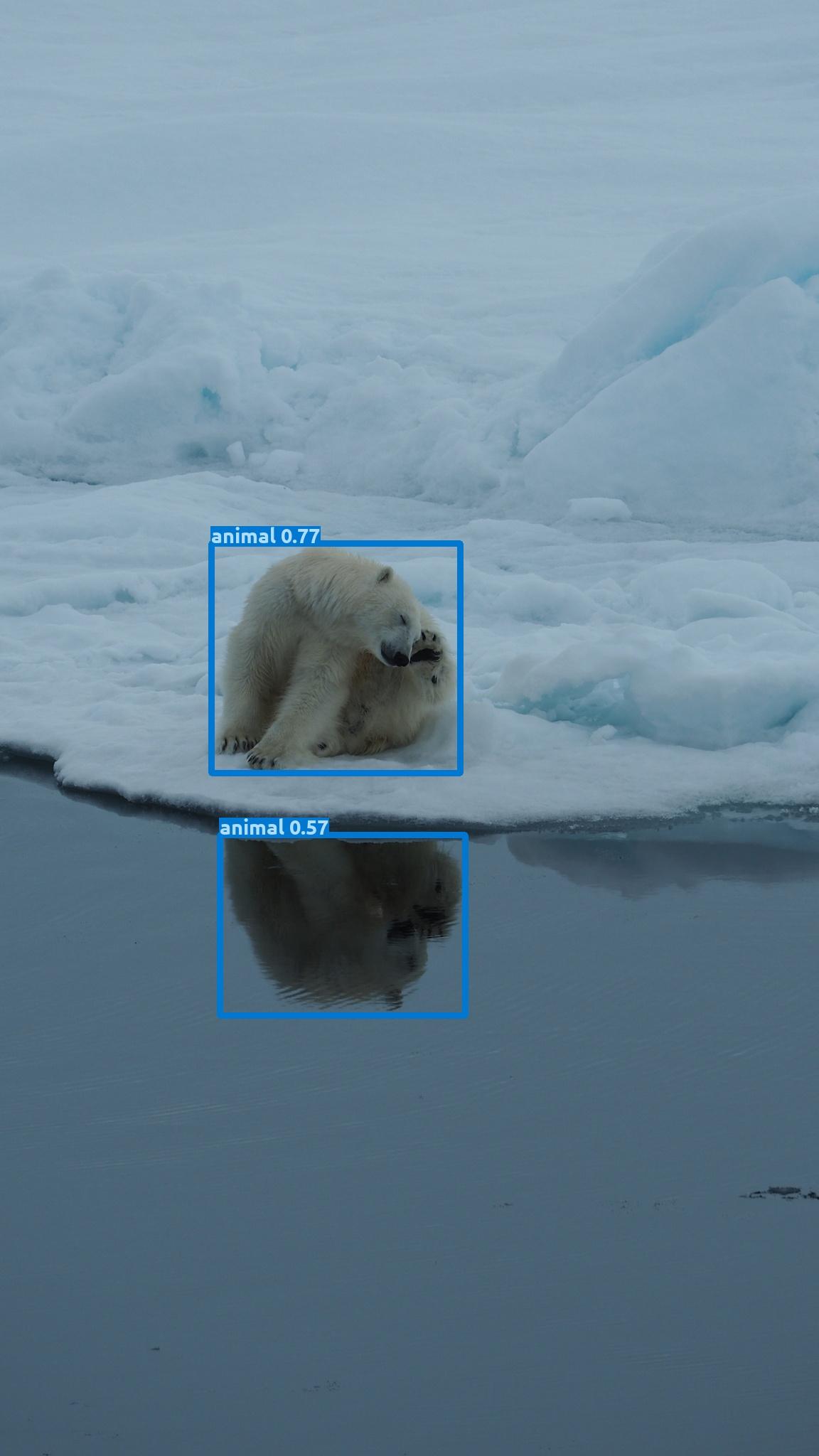

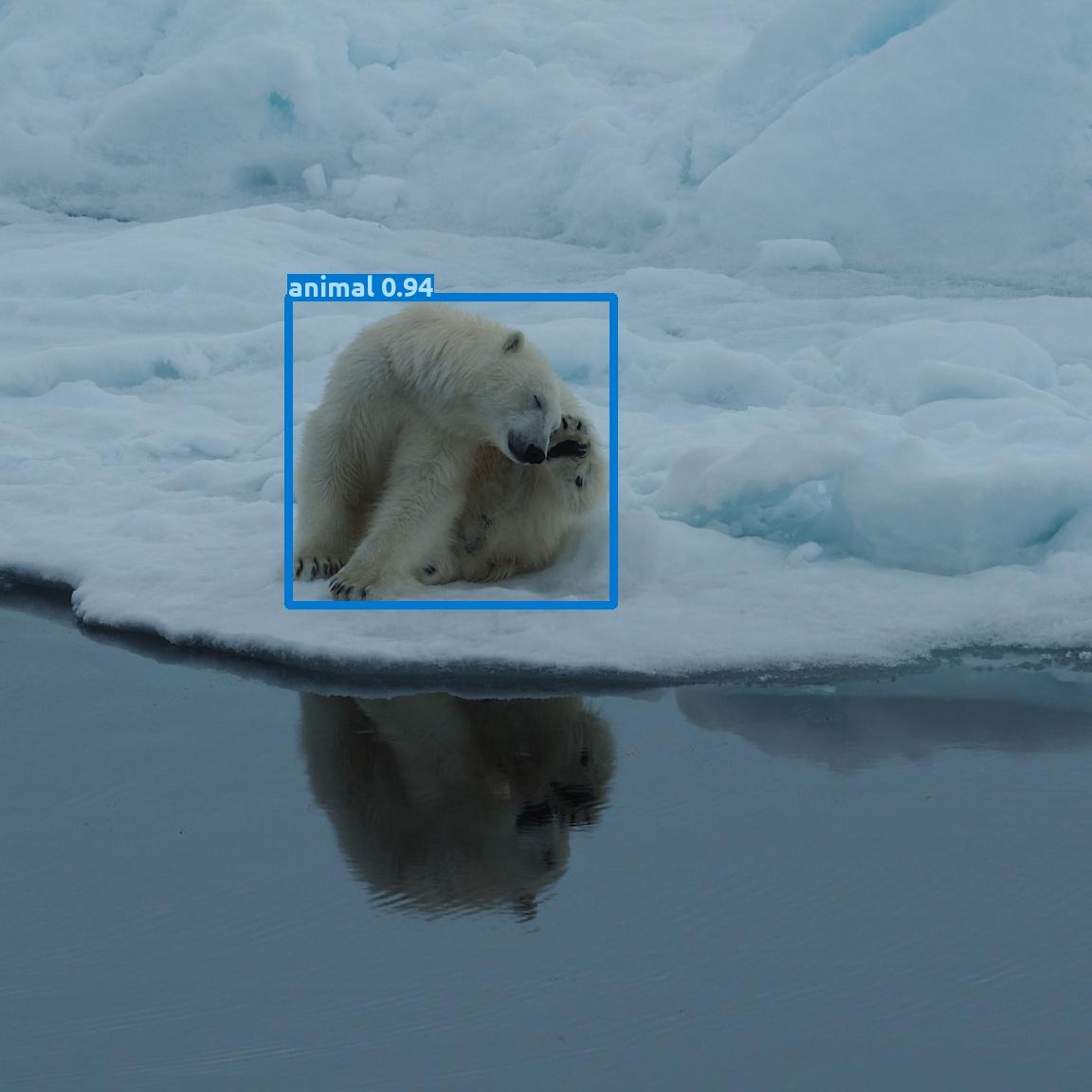

I made a few rotation experiements with MD5b.

Here is the original image (1152x2048) :

When saving this as copy in photoshop, the confidence on the mirror image changes slightly:

and when just cropping to a (1152*1152) square it changes quite a bit:

The mirror image confidence drops below my chosen threshold of 0.2 but the non-mirrored image now gets a confidence boost.

Something must be going on with overall scaling under the hood in MD as the targets here have the exact same number of pixels.

I tried resizing to 640x640:

This bumped the mirror image confidence back over 0.2... but lowered the non-mirrored confidence a bit... huh!?

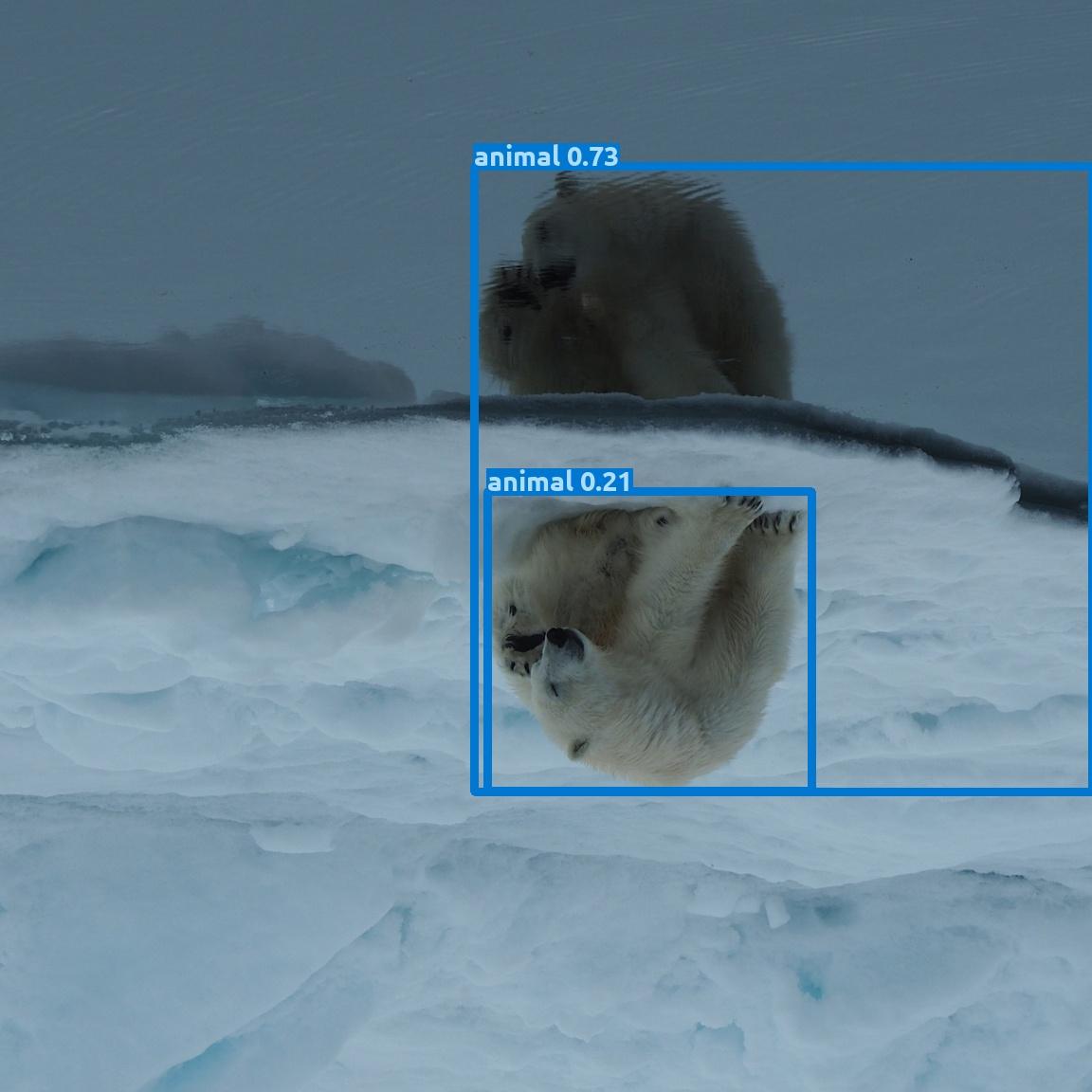

My original hypothesis was that the confidence could be somewhat swapped just by turning the image upside down (180 degree rotation):

Here is the 1152x1152 crop rotated 180 degrees:

The mirror part now got a higher confidence but it is interpreted as sub-part of a larger organism. The non-mirrored polar bear had a drop in confidence.

So my hypothesis was somewhat confirmed...

This leads me to believe that MD is not trained on many upside down animals ....

- and probably our PolarbearWatchdog! should not be either ... ;)

9 December 2024 4:27pm

Seems like we should include some rotations in our image augmentations as the real world can be seen a bit tilted - as this cropped corner view from our fisheye at the zoo shows.

Recommended lora receiver capable microcontrollers

28 November 2024 8:40am

4 December 2024 4:21pm

@ioanF , you can do a little bit better than 10 ma. I have here an adalogger rp2040 feather with DS3231 RTC wing and a I2S Mems microphone. During "dormant" mode running from xosc and waiting for DS3231 wakeup I get 4.7 ma . This includes about 1 ma for microphone and DS323 together. OK, that is still 3 ma higher than 0.7 ma RP2040 documentation is said to claim. I guess, there is some uncertainty with the AT35 USB tester I'm using. Putting the LDO enable pin (EN) to ground, the USB tester said 1 ma, which may be dominated by the offset of the tester as the LIPO charger as remaining component should only consume 0.2 ma (max).

Edit (for the records): After 'disabling' all GPIO but the RTC wakeup pin, the system (RP2040+DS3231 RTC+ I2S Mems microphone) consumes only 1.8 mA. I guess I`m now close to the clamed dormant power consumption!

4 December 2024 8:24pm

A good candidate for low power hibernation and processing power is the Teensy 4.1 from PJRC, which is an ARM Cortex M7. standard clock is 600 MHz and there is provision for 16 MB PSRAM. It hibernates at 0.1 ma. What is more important than the specs, there is a very active (the best IMHO) community Search | Teensy Forum with direct connection to the developer (Paul Stoffregen). For a simple recorder consumption is 50% higher than RP2040 (Teensy running at 24 MHz and RP2040 running at 48 MHz, but RP2040 is M0+ and not a M7).

5 December 2024 6:08am

Thanks! The Teensys are nice for processing power if choosing an external Lora board I’d say that’s a good choice. I started with teensies, there was a well supported code base and certainly well priced.

My preference is to one with onboard Lora. I’ve used one one onboard murata Lora before. It was good both for low power operation and it’s Lora operation.

For a transmitter the grasshopper if it’s still being made is quite good for small distances are being made because it has an onboard ceramic antennae. Which is good for about three houses away, although one was also received > 20 km away.

Individual Identification of Snow Leopard

25 November 2024 5:39am

1 December 2024 3:00pm

Hi Raza,

I manage a lot of snow leopard camera trap data also (I work for the Snow Leopard Trust). I am not aware of any AI solutions for snow leopards that can do all the work for us, but there are 'human-in-the-loop' approaches, in which AI suggests possible matches and a human makes the actual decision. Among these, are Hotspotter and the algorithm in WildMe.

You can make use of Hotspotter in the software TrapTagger. I have found this software to be very useful when identifying snow leopards. Anecdotally, I think it improves the accuracy of snow leopard identifications. But, like I said, you still have to manually make the decisions; the results from Hotspotter are just a helpful guide.

The other cutting edge solutions mentioned here (e.g. MegaDescriptor, linked above) will require a massive dataset of labelled individuals. And considerable expertise in Python to develop your own solution. I had a quick look at the paper at they were getting around 60-70% accuracy for leopards, which is a much easier species than snow leopard. So I don't think this approach is useful, at least for now. Unless I've misunderstood something (others who deeply understand this, please chime in and correct me!).

Incidentally, I did try to gain access to WildMe / Whiskerbook last year but wasn't successful gaining an account. @holmbergius can you help me out? That would be appreciated, thanks!

Best of luck Raza, let me know if I can help more,

Ollie

1 December 2024 4:38pm

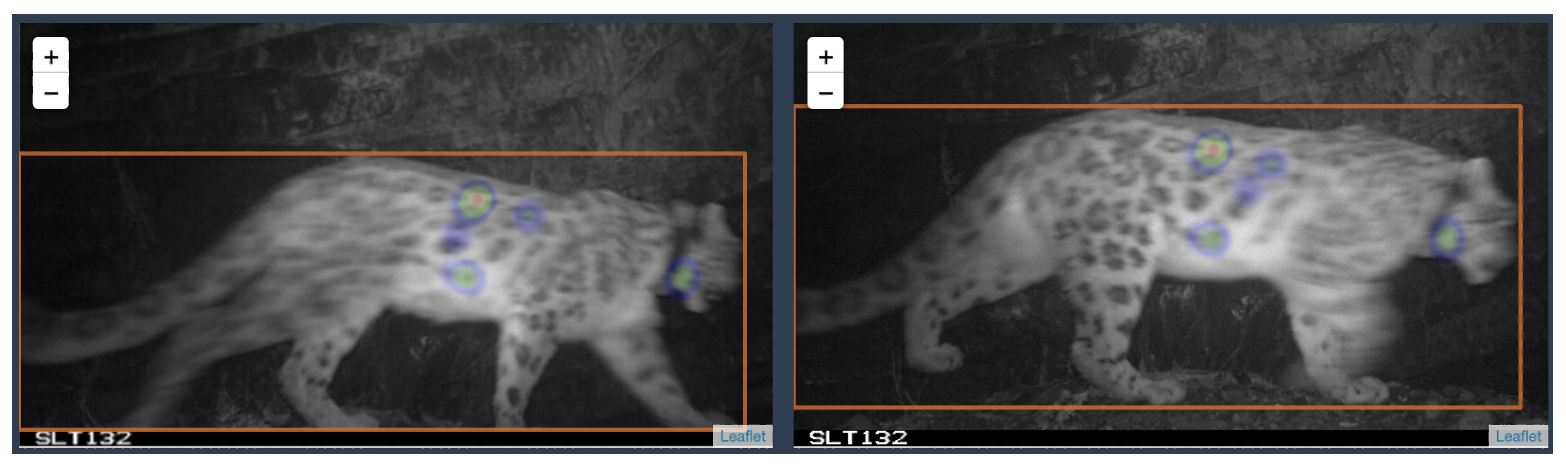

An example of Hotspotter doing quite a good job, even with blurry images, to successfully draw attention to matching parts of the body. This is a screenshot from TrapTagger, which very helpfully runs Hotspotter across your images.

Note that I wouldn't necessarily recommend ID'ing snow leopards from such poor imagery, but this just demonstrates that Hotspotter can sometimes do quite well even on harder images.

3 December 2024 11:03am

Hi Raza,

As @ollie_wearn suggests, if think traptagger will be the easiest for you:

You just have to follow the tutorial there:

TrapTagger Tutorial - YouTube

The full TrapTagger tutorial. It is recommended that you start here, and watch through all the videos in order. You can then revisit topics as needs be.

The person in charge of Traptagger is also very responsive.

best,

AmazonTEC: 4D Technology for Biodiversity Monitoring in the Amazon (English)

25 November 2024 8:33pm

GreenCrossingAI Project Update

22 November 2024 6:10pm

MegaDetector V6 and Pytorch-Wildlife V1.1.0 !

9 November 2024 3:34am

11 November 2024 7:57pm

Hello Patrick, thanks for asking! We are currently working on a bioacoustics module and will be releasing some time early next year. Maybe we can have some of your models in our initial bioacoustics model zoo, or if you don't have a model yet but have annotated datasets, we can probably train some models together? Let me know what you think!

11 November 2024 7:58pm

Thank you so much! We are also working on a bounding box based aerial animal detection model. Hopefully will release sometime early next year as well. It would be great to see how the model runs on your aerial datasets! We will keep you posted!

22 November 2024 5:13pm

Hi Zhongqi! We are finalizing our modelling work over the next couple of weeks and can make our work availabile for your team. Our objective is to create small (<500k parameters) quantized models that can run on low-power ARM processors. We have custom hardware that we built around them and will be deploying back in Patagonia in March 2025. Would be happy to chat further if interested!

We have an active submission to Nature Scientific Data with the annotated dataset. Once that gets approved (should be sometime soon), I can send you the figshare link to the repo.

Instant Detect 2.0 and related cost

16 November 2023 12:50am

11 November 2024 9:16am

Sam any update on Instant Detect 2.0 - previously you mentioned that you hope to go into volume production by mid-2024?

I would love to also see a comparison between Instant Detect 2.0 and Conservationxlabs' Sentinel products if anyone has done comparisons.

Are there any other similar solutions currently on the market - specifically with the images over LoRa capability, and camera to satellite solution?

11 November 2024 6:41pm

Nightjar comes to mind but I am not too sure if it is actually “on the market”…

19 November 2024 6:44pm

There's quite a few diy or prototype solutions described online and in literature - but it seems none of these have made it to market yet as generally available fully usable products. We can only hope.

Inquiry About e-con Systems/arducam Cameras for Camera Trapping Projects

19 November 2024 1:35pm

19 November 2024 2:44pm

I think the big thing is power consumption. Commercial camera traps have a large power (current) dynamic range. That means they can often swing from ~0.1 mA to ~1000 mA of current within a few milliseconds. It's often difficult to replicate that in DIY systems which is why you don't see a lot of Raspberry Pi camera traps. The power consumption is often too high and the boot time is too long.

One of the big challenges is powering down the system so that it's essentially in sleep mode and having it wake up in less than a second. That said, if you're mainly doing time lapse or don't have the strict speed requirements to wake up that quickly, it may make sense to roll your own camera trap.

Anyways, hope I'm not being too discouraging. It never hurts to give it a shot and please feed back your experiences to the forum. I'd love to hear reviews about Arducam and it's my first time hearing about e-con Systems.

Akiba

AI Animal Identification Models

30 March 2023 5:01am

6 November 2024 6:50am

I trained the model tonight. Much better performance! mAP has gone from 73.8% to 82.0% and running my own images through anecdotally it is behaving better.

After data augmentation (horizontal flip, 30% crop, 10° rotation) my image count went from 1194 total images to 2864 images. I trained for 80 epochs.

6 November 2024 8:56am

Very nice!

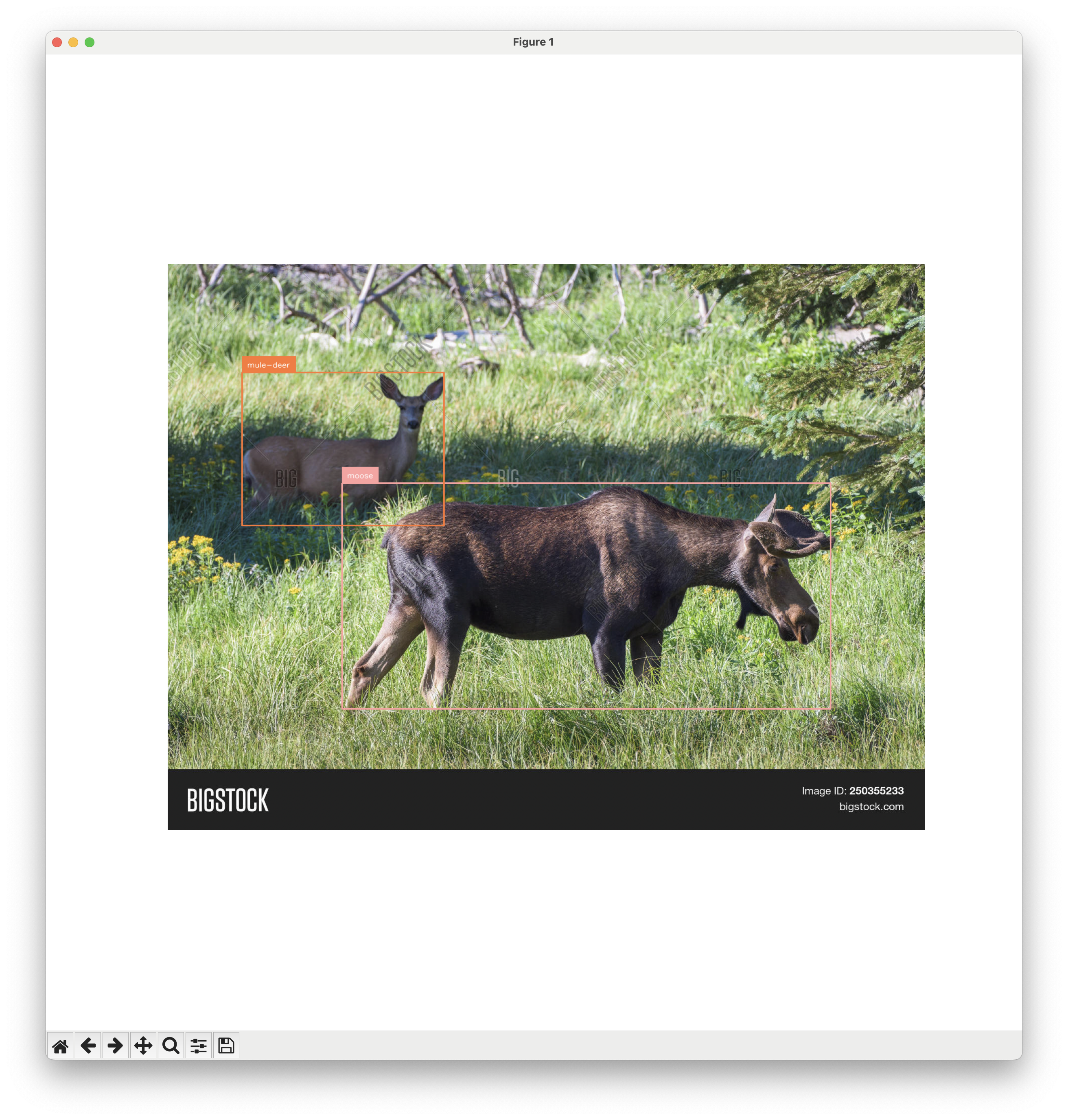

I thought it would retain all the classes of YOLO (person, bike, etc) but it doesn't. This makes a lot of sense as to why it is seeing everything as a moose right now!

I had the same idea. ChatGPT says there should be possibilities though...

You may want to explore more "aggressive" augmentations like the ones possible with

Albumentations Transforms

A list of Albumentations transforms

to boost your sample size.

Or you could expand the sample size by combining with some of the annotated datasets available at LILA BC like:

Caltech Camera Traps - LILA BC

This data set contains 244,497 images from 140 camera locations in the Southwestern United States, with species-level labels for 22 species, and approximately 66,000 bounding box annotations.

North American Camera Trap Images - LILA BC

This data set contains 3.7M camera trap images from five locations across the United States, with species-level labels for 28 species.

Cheers,

Lars

15 November 2024 5:30pm

As others have said, pretty much all image models at least start with general-subject datasets ("car," "bird," "person", etc.) and have to be refined to work with more precision ("deer," "antelope"). A necessity for such refinement is a decent amount of labeled data ("a decent amount" has become smaller every year, but still has to cover the range of angles, lighting, and obstructions, etc.). The particular architecture (yolo, imagenet, etc.) has some effect on accuracy, amount of training data, etc., but is not crucial to choose early; you'll develop a pipeline that allows you to retrain with different parameters and (if you are careful) different architectures.

You can browse many available datasets and models at huggingface.co

You mention edge, so it's worth mentioning that different architectures have different memory requirements and then, within the architecture, there will generally be a series of models ranging from lower to higher memory requirements. You might want to use a larger model during initial development (since you will see success faster) but don't do it too long or you might misestimate performance on an edge-appropriate model.

In terms of edge devices, there is a very real capacity-to-cost trade-off. Arduinos are very inexpensive, but are very underpowered even relative to, e.g., Raspberry Pis. The next step are small dedicated coprocessors such as the Coral line (). Then you have the Jetson line from NVidia, which are extremely capable, but are priced more towards manufacturing/industrial use.

Products | Coral

Helping you bring local AI to applications from prototype to production

Q&A - AI for Conservation Office Hours 2025

Jake Burton

and 1 more

Jake Burton

and 1 more

15 November 2024 11:20am

Apply Now: AI for Conservation Office Hours 2025

Jake Burton

and 1 more

Jake Burton

and 1 more

13 November 2024 11:31am

Looking for feedback & testers for Animal Detect platform

6 November 2024 9:39am

6 November 2024 9:57am

Hi Eugene!

Interesting project!

I already signed up to test it!

Cheers,

Lars

Discussing an Open Source Camera Trap Project

2 April 2019 2:49am

29 October 2024 8:15am

Regarding using the openMV as a basis for an allrounder camera, we just published this:

30 October 2024 1:41pm

That looks like an amazing project. Congratulations!

4 November 2024 8:47pm

Thanks :)

We are working on making it smaller and simpler using the latest openMV board.

Southwest Florida - Trail Cam

3 November 2024 5:48pm

Automatic extraction of temperature/moon phase from camera trap video

29 November 2023 1:15pm

7 September 2024 9:44am

I just noticed that TrapTagger has integrated AI reading of timestamps for videos. I haven't had a chance to try it out yet, but it sounds promising.

28 October 2024 7:30pm

Small update. I uploaded >1000 videos from a spypoint flex camera and TrapTagger worked really well. Another program that I'm currently interested in is Timelapse which uses the file creation date/time. I haven't yet tried it, but it looks promising as well.

1 November 2024 12:29pm

Hi Lucy,

I now realised it is an old thread and you most likely have already found a solution long ago but this might be of interest to others.

As mentioned previously, it is definitely much better to take moon phase from the date and location. While moon phase in general is not a good proxy for illumination, that moon phase symbol on the video is even worse as it generalises the moon cycle into a few discreet categories. For calculating moon phase you can use suncalc package in R but if you want a deeper look and more detailed proxy for moonlight intensity, I wrote a paper on it

Biologically meaningful moonlight measures and their application in ecological research | Behavioral Ecology and Sociobiology

Light availability is one of the key drivers of animal activity, and moonlight is the brightest source of natural light at night. Moon phase is commonly us

with accompanying R package called moonlit

GitHub - msmielak/moonlit: moonlit - R package to estimate moonlight intensity for any given place and time

moonlit - R package to estimate moonlight intensity for any given place and time - msmielak/moonlit

When it comes to temperature I also agree that what is recorded in the camera is often very inconsistent so unless you have multiple cameras to average your measurements you are probably better off using something like NCEP/NCAR Reanalysis (again, there is an R package for that) but if you insist on extracting temperature from the picture, I tried it using tesseract and wrote a description here:

Good luck!

7 January 2025 12:32pm

Hi Simon,

Did you already contact INBO? Both biologging and citizen science are big themes at INBO. Last year we had a master thesis on camera trapping invasive muntjac. You can send me a private message for more info!