This group aims to unite practitioners, researchers, and technologists working at the intersection of edge AI and conservation. With a growing need for real-time, on-device data processing in the field, edge computing is becoming a vital tool for monitoring ecosystems, detecting threats, and making decisions where internet connectivity is limited or nonexistent.

Our focus is on practical, field-tested applications of edge AI such as smart camera traps, acoustic monitoring devices, sensor networks, and lightweight machine learning models deployed on low-power hardware. By bringing together diverse perspectives from wildlife biologists deploying systems in remote areas to engineers building optimized edge pipelines, we hope to accelerate innovation, troubleshoot common obstacles, and improve system resilience in harsh environments.

The group will serve as a collaborative hub to share open source tools, machine learning models, deployment workflows, power management strategies, and case studies. Members will also explore key topics like data sovereignty, ethical AI deployment in conservation, energy efficient design, and the role of edge systems in community-based monitoring.

Through community calls, tutorials, expert talks, collaborative challenges, and potential hackathons or summer schools, we aim to foster a connected ecosystem of edge innovators tackling real-world environmental challenges. Whether you are deploying edge systems in the rainforest, prototyping solar powered machine learning hardware, or just curious about where AI meets ecology, this group offers a space to connect, learn, and co-create.

Group curators

- @jennamkline

- | She/Her

Imageomics Institute & ABC Global Climate Center

PhD Student @ OSU, Edge AI for Adaptive Animal Ecology Field Studies

- 0 Resources

- 7 Discussions

- 7 Groups

Bsc in IoT Networks |MSc student in Advanced Engineering for Robotics & AI. AI projects on camera traps and edge bioacoustics for bird mapping. Open Source Contributor to Microsoft Camera Trap project. Nat Geo & Nature Conservancy marine conservation externship alumnus.

- 0 Resources

- 24 Discussions

- 1 Groups

No showcases have been added to this group yet.

- @jcolombini

- | he/him

Electrical and Computer Engineer with a Msc in Robotics. Working on edge computing and adhoc networks for urban fauna conservation

- 0 Resources

- 2 Discussions

- 3 Groups

Woods Hole Oceanographic Institution

Behavioral Ecologist and bioacoustician

- 0 Resources

- 3 Discussions

- 1 Groups

- @Javan_INITIAM

- | He/Him/His

Nature Conservation Center

Javan BUTIRA is a young Congolese leader committed to technological innovation, sustainable development, and social entrepreneurship. As the Principal Coordinator and Executive Director of INITIAM (Enhanced Intelligent Technological Initiative), I lead several innov projects.

- 0 Resources

- 0 Discussions

- 28 Groups

- @jennamkline

- | She/Her

Imageomics Institute & ABC Global Climate Center

PhD Student @ OSU, Edge AI for Adaptive Animal Ecology Field Studies

- 0 Resources

- 7 Discussions

- 7 Groups

We are thrilled to start a brand new group: Edge Computing! Meet Jenna Kline and Youssef Bayouli, the two Group Leaders for the 2025-2026 term.

19 August 2025

The Marine Innovation Lab for Leading-edge Oceanography develops hardware and software to expand the ocean observing network and for the sustainable management of natural resources. For Fall 2026, we are actively...

24 July 2025

New paper - "acoupi integrates audio recording, AI-based data processing, data management, and real-time wireless messaging into a unified and configurable framework. We demonstrate the flexibility of acoupi by...

7 February 2025

Article

SPARROW: Solar-Powered Acoustics and Remote Recording Observation Watch

18 December 2024

Applications are now open for an open capital funding opportunity for projects demonstrating innovative approaches toward environmental monitoring at a sensor or systems-based level.

31 August 2023

We've now wrapped our 2023 AI for Conservation Office Hours, where we helped conservationists connect with AI experts to get tailored expert advice on AI and ML problems in their projects

11 May 2023

The Department of Ecoscience, Aarhus University, invites applications for a postdoc position to strengthen our team on image recognition and deep learning in ecology. Specifically, the candidate will further develop...

9 May 2023

David Will, Head of Innovation @ Island Conservation & Charles Ferland, VP & GM of Edge Computing & Communication Service Providers @ Lenovo

13 June 2022

Our friends at Edge Impulse are proud to announce that they have become the first AI company to join 1% for the Planet, pledging to donate 1% of revenue to support nonprofit organizations focused on the environment. To...

15 January 2021

August 2025

event

October 2025

July 2022

event

June 2020

event

| Description | Activity | Replies | Groups | Updated |

|---|---|---|---|---|

| Hi Chris! Send me a direct message and we can have a chat! |

+5

|

AI for Conservation, Autonomous Camera Traps for Insects, Camera Traps, Edge Computing | 4 days 11 hours ago | |

| Hello Youssef,Yes, let's keep contact. I'm currently looking for good conferences to publish my work, if you are aware of something, please let me know. |

+53

|

AI for Conservation, Community Base, Edge Computing | 1 month ago | |

| @LukeD, I am looping in @Kamalama997 from the TRAPPER team who is working on porting MegaDetector and other models to RPi with the AI HAT+. Kamil will have more specific questions. |

|

AI for Conservation, Camera Traps, Edge Computing | 3 months 2 weeks ago | |

| As others have said, pretty much all image models at least start with general-subject datasets ("car," "bird," "person", etc.) and have to be refined to work with more precision... |

+20

|

AI for Conservation, Camera Traps, Edge Computing | 9 months ago | |

| great project !! |

|

Camera Traps, Edge Computing | 1 year ago | |

| Yeah that would be great - I have done a little looking into it today and I have some ideas. I'd love to collab. I will DM you |

+16

|

Acoustics, Edge Computing | 1 year ago | |

| Great work, unfortunately, I'm not familiar with programming but computer friendly enough to follow a good tutorial. I was wondering if you will share your findings?Thanks for the... |

+2

|

Camera Traps, Edge Computing | 2 years 10 months ago | |

| Dear Community,Together with Hackster.io, Seeed Studio is very happy to jointly organize “IoT Into the Wild Contest for Sustainable Plant... |

|

AI for Conservation, Connectivity, Emerging Tech, Open Source Solutions, Sensors, Edge Computing | 3 years 2 months ago | |

| There's a discussion over in camera traps about the design of a device to run autonomously (in an inaccessible location) with reliable... |

|

AI for Conservation, Edge Computing | 6 years 1 month ago |

New Group Announcement: Edge Computing

Jenna Kline

and 1 more

Jenna Kline

and 1 more

19 August 2025 7:50pm

Mini AI Wildlife Monitor

25 June 2025 12:27pm

14 August 2025 12:55am

Aloha Luke,

This is an amazing tool that you have created. Are your cameras availble for purchase? We use a lot of camera traps in the Hono O Na Pali Natural Area Reserve on Kauai to pasively detect animals. We do not have the staff knowledge and capacity to build our own camera traps like this.

15 August 2025 7:53am

Hi Chris!

Send me a direct message and we can have a chat!

Tech4Nature Presents: 2025 Innovation Challenge Workshop Series - Registration Now Open!

6 August 2025 5:08pm

MS and PhD Opportunities in Ocean Engineering and Oceanography (Fall 2026)

24 July 2025 5:55am

Exploring the Wild Edge: A Proposal for a New WILDLABS Group

9 June 2025 5:41pm

13 July 2025 5:02pm

This sounds like a great idea, this is an area that I want to do more work in,

Where can I sign up.

15 July 2025 5:43pm

Hey Stuart,

Thank you for your interest! We're glad you'd like to be part of our journey. We're still in the process of setting up the group, and we'll let you know as soon as we're ready.

Thanks for your understanding! 🤗

18 July 2025 2:22pm

Hello Youssef,

Yes, let's keep contact. I'm currently looking for good conferences to publish my work, if you are aware of something, please let me know.

Listen to the Future: Mobilizing Bioacoustic Data to Meet Conservation Goals

5 May 2025 8:02pm

19 May 2025 6:36pm

AI Edge Compute Based Wildlife Detection

23 February 2025 5:24am

29 April 2025 3:20pm

Sorry, I meant ONE hundred million parameters.

The Jetson Orin NX has ~25 TOPS FP16 Performance, the large YOLOv6 processing 1280x1280 takes requires about 673.4 GFLOPs per inference. You should therefore theoretically get ~ 37fps, you're unlikely to get this exact number, but you should get around that...

Also later YOLO models (7+) are much more efficient (use less FLOPs for the same mAP50-95) and run faster.

Most Neural network inference only accelerators (Like Hailo's) use INT8 models and, depending on your use case, any drop in performance is acceptable.

29 April 2025 3:34pm

Ah I see, thanks for clarifying.

BTW yolov7 actually came out earlier than yolov6. yolov6 has higher precision and recall figures. And I noticed that in practise it was slightly better.

My suspicion is that it's not trival to translate the layer functions from yolov6 or yolov9 to hailo specific ones without affecting quality in unknown ways. If you manage to do it, do tell :)

The acceptability of a drop of performance depends heavily on the use case. In security if I get woken up 2x a night versus once in 6 months I don't care how fast it is, it's not acceptable for that use case for me.

I would imagine that for many wild traps as well a false positive would mean having to travel out and reset the trap.

But as I haven't personally dropped quantization to 8-bits I appreciate other peoples insights on the subject. Thanks for your insights.

1 May 2025 7:32pm

@LukeD, I am looping in @Kamalama997 from the TRAPPER team who is working on porting MegaDetector and other models to RPi with the AI HAT+. Kamil will have more specific questions.

acoupi: An Open-Source Python Framework for Deploying Bioacoustic AI Models on Edge Devices

7 February 2025 1:39am

Announcement of Project SPARROW

18 December 2024 8:01pm

3 January 2025 6:48pm

AI Animal Identification Models

30 March 2023 5:01am

6 November 2024 6:50am

I trained the model tonight. Much better performance! mAP has gone from 73.8% to 82.0% and running my own images through anecdotally it is behaving better.

After data augmentation (horizontal flip, 30% crop, 10° rotation) my image count went from 1194 total images to 2864 images. I trained for 80 epochs.

6 November 2024 8:56am

Very nice!

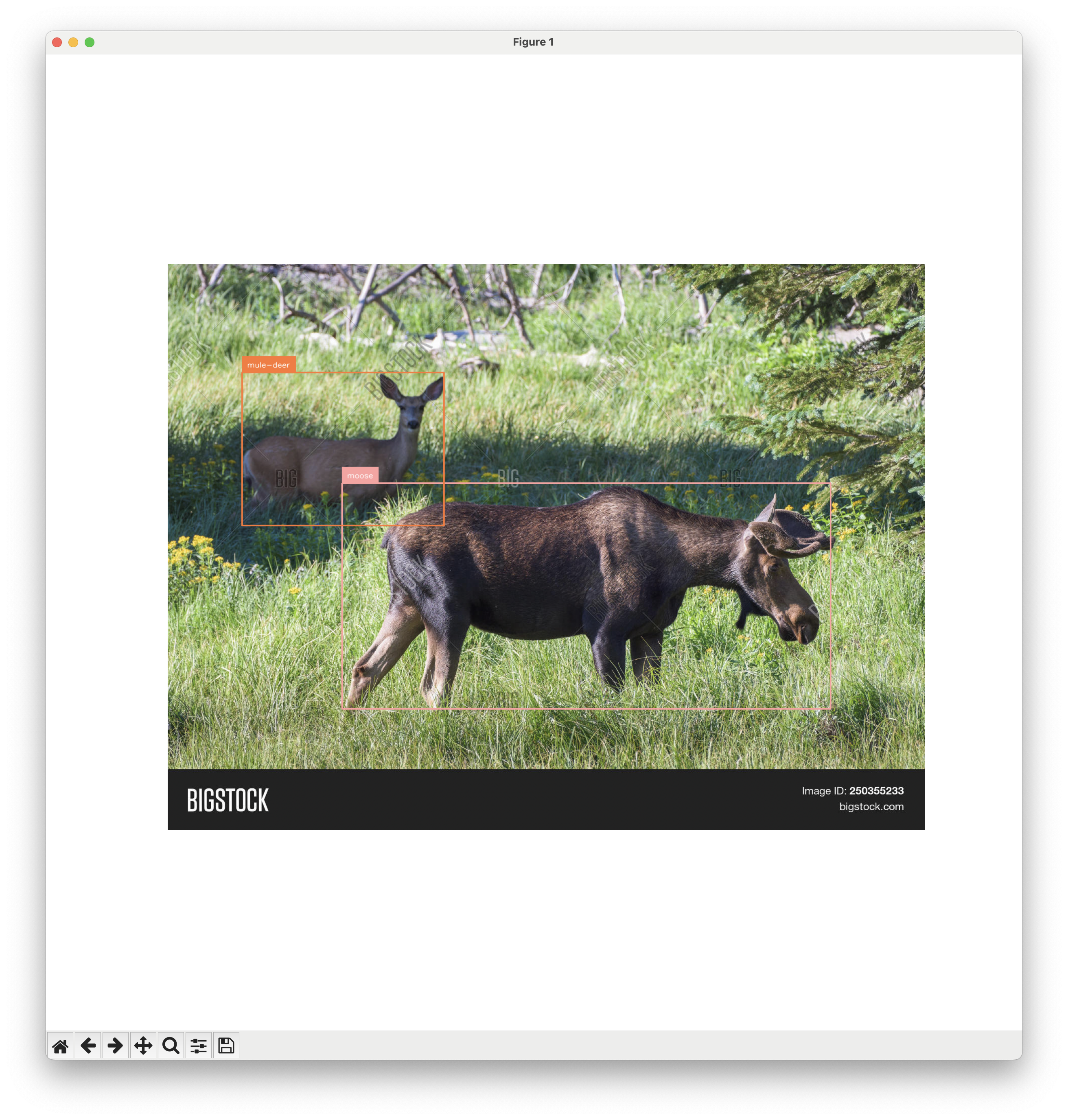

I thought it would retain all the classes of YOLO (person, bike, etc) but it doesn't. This makes a lot of sense as to why it is seeing everything as a moose right now!

I had the same idea. ChatGPT says there should be possibilities though...

You may want to explore more "aggressive" augmentations like the ones possible with

Albumentations Transforms

A list of Albumentations transforms

to boost your sample size.

Or you could expand the sample size by combining with some of the annotated datasets available at LILA BC like:

Caltech Camera Traps - LILA BC

This data set contains 244,497 images from 140 camera locations in the Southwestern United States, with species-level labels for 22 species, and approximately 66,000 bounding box annotations.

North American Camera Trap Images - LILA BC

This data set contains 3.7M camera trap images from five locations across the United States, with species-level labels for 28 species.

Cheers,

Lars

15 November 2024 5:30pm

As others have said, pretty much all image models at least start with general-subject datasets ("car," "bird," "person", etc.) and have to be refined to work with more precision ("deer," "antelope"). A necessity for such refinement is a decent amount of labeled data ("a decent amount" has become smaller every year, but still has to cover the range of angles, lighting, and obstructions, etc.). The particular architecture (yolo, imagenet, etc.) has some effect on accuracy, amount of training data, etc., but is not crucial to choose early; you'll develop a pipeline that allows you to retrain with different parameters and (if you are careful) different architectures.

You can browse many available datasets and models at huggingface.co

You mention edge, so it's worth mentioning that different architectures have different memory requirements and then, within the architecture, there will generally be a series of models ranging from lower to higher memory requirements. You might want to use a larger model during initial development (since you will see success faster) but don't do it too long or you might misestimate performance on an edge-appropriate model.

In terms of edge devices, there is a very real capacity-to-cost trade-off. Arduinos are very inexpensive, but are very underpowered even relative to, e.g., Raspberry Pis. The next step are small dedicated coprocessors such as the Coral line (). Then you have the Jetson line from NVidia, which are extremely capable, but are priced more towards manufacturing/industrial use.

Products | Coral

Helping you bring local AI to applications from prototype to production

GreenCrossingAI project update

1 July 2024 7:59pm

31 July 2024 6:46pm

great project !!

Questions for Biologists relating to system requirements in acoustic research

6 July 2024 3:54am

21 July 2024 7:49pm

Hey @jamie_mac

These are FPODs from Chelonia was an exceptional read. I love the implementation and would have a great time developing systems like that. Very clever method for low power detection and would provide an ultra-fast turnaround for recording, where AI would take an entire detection window timescale to start recording. It does also make sense that marine environments would be an exceptionally difficult place for species detection.

I am interested in your idea of an OS marine ARU system. I have been doing a little research on marine acoustics and have a few ideas. This seems right up my alley. If you'd be interested in playing around with the idea and seeing if we can make something that works, I could likely make quick work with this

22 July 2024 8:19am

Hi Morgan.

We are actually at the very early stages of developing a new OS marine PAM device. This is a side project (read no funding currently) but if you're interested, I'd be happy to have a chat about what we are doing?

23 July 2024 2:40am

Yeah that would be great - I have done a little looking into it today and I have some ideas. I'd love to collab. I will DM you

Innovation in Environmental Monitoring

31 August 2023 11:31pm

AI for Conservation Office Hours: 2023 Review

Dan Morris

and 1 more

Dan Morris

and 1 more

11 May 2023 10:00am

Postdoc for image-based insect monitoring with computer vision and deep learning

9 May 2023 12:27pm

MegaDetector on Edge Devices ??

19 February 2021 12:30am

30 August 2022 5:05am

Has anyone tried running the MegaDetector model through an optimizer like Amazon SageMaker Neo? It can reduce the overall memory footprint and possibly speed up inference on devices like Raspberry Pi and Jetson.

27 September 2022 10:19am

Great work Luke @sheneman! Having a relatively lightweight bit of hardware to run MDv5 on will open up opportunities for many more people. The upfront financial cost of a Jetson Nano is an order of magnitude less than a computer with a beefy GPU.

I haven't used any of the Nvidia edge devices yet. Do you think it would be possible for someone to make a disk image with MDv5 pre installed to lower the entry barrier of learning a new system, installing software, environments and packages etc.?

A difficulty I have seen for some projects is not having access to or not having internet bandwidth to utilize cloud compute services. If anyone needs to churn through camera trap image processing in a remote field station this may now be possible!

10 October 2022 12:27pm

Great work, unfortunately, I'm not familiar with programming but computer friendly enough to follow a good tutorial. I was wondering if you will share your findings?

Thanks for the work.

Join Seeed’s “IoT Into the Wild Contest for Sustainable Planet 2022” on Hackster to Get 100 Free Hardware and to Win $14,000+ in Prizes!!

14 June 2022 11:04am

Join Seeed’s “IoT Into the Wild Contest for Sustainable Planet 2022” on Hackster to Get 100 Free Hardware and to Win $14,000+ in Prizes!!!

14 June 2022 9:14am

Video: Delivering edge computing on Robinson Crusoe Island (Chile) to preserve biodiversity

13 June 2022 2:08pm

Edge Impulse Becomes the First AI Company to Join 1% for the Planet

15 January 2021 12:00am

Event: Arm’s AI Virtual Tech Sessions

Arm

Arm

9 June 2020 12:00am

ML at the Edge

28 June 2019 8:16am

26 July 2025 7:04am

A side note, For insects you'll need an higher resolution camera. The Mothbox porject uses a 64MP camera

Parts List | Mothbox

Open Source Low Cost DIY Nocturnal Insect Monitoring

Amazon.com