Despite critical advancements in the tech solutions available to conservationists worldwide, many existing tools are cost-prohibitive in the landscapes that need them most. Additionally, those who create low-cost and open-source alternatives to pricey market tech often operate on tight budgets themselves, meaning they need more resources to promote their solutions to a broader market. We need increased communication around these solutions to highlight their availability, share lessons learned in their creation, and avoid duplication of efforts.

This group is a place to share low-cost, open-source devices for conservation; describe how they are used, including what needs they are addressing and how they fit into the wider conservation tech market; identify the obstacles in advancing the capacity of these technologies; and to discuss the future of these solutions, particularly their sustainability and how best to collaborate moving forward. We welcome contributions from both makers and users, whether active or prospective.

Here is how we see the current OSS space and how this group plans to change it by supporting both makers and users:

Many users do not appreciate the significant benefits of open-source tech. This group will educate users about the advantages and the need for open-source tech, specifically in the context of ecology work. We achieve this by stimulating regular discussions on the forum and by encouraging and supporting users to use open-source tools wherever possible.

Building OSS can be difficult. We want to support both established and potential makers who wish to develop OSS. The OSS group is a place where makers can find funding opportunities, ask current and potential users questions, and share their technologies.

Using OSS can be difficult. We also want to support adopters of OSS tech. We do this by offering a place for users to share challenges they face and crowdsource advice on things like technology choice or technical support.

Makers do not know what users want or need. The OSS group is a place that facilitates conversations between makers and users. This will give users a voice and ensure that makers are aware of the needs of users, enabling them to build better solutions.

The community is small and scattered. We want to grow an inclusive community of OSS practitioners. Our goal is to become the go-to place for discussions on the topic where people feel a sense of belonging.

Resources for getting started

- How do I use open source remote sensing data in Google Earth Engine? | Tech Tutors

- How do I use open source remote sensing data to monitor fishing? | Tech Tutors

- Low Cost, Open Source Solutions | Virtual Meetup

- What would an open source conservation technology toolkit look like? | Discussion

- December 2024 Open Source Solutions Community Call

Header image: Shawn F. McCracken

Group curators

- @Nycticebus_scientia

- | he/they

MammalWeb.org

Co-founded citizen science camera-trapping project with interest in developing 100% open source wildlife tech. Advocate for open science/open research. Former Community Councilor of the Gathering for Open Science Hardware.

- 4 Resources

- 25 Discussions

- 3 Groups

trying to understand and improve the welfare of all animals that can suffer

- 4 Resources

- 6 Discussions

- 7 Groups

- @briannajohns

- | she/they

Gathering for Open Science Hardware (GOSH)

Interested in the application of open source technologies for conservation research.

- 9 Resources

- 5 Discussions

- 4 Groups

No showcases have been added to this group yet.

Stop The Desert

Founder of Stop The Desert, leading global efforts in regenerative agriculture, sustainable travel, and inclusive development.

- 0 Resources

- 3 Discussions

- 8 Groups

WILDLABS & Wildlife Conservation Society (WCS)

I'm the Bioacoustics Research Analyst at WILDLABS. I'm a marine biologist with particular interest in the acoustics behavior of cetaceans. I'm also a backend web developer, hoping to use technology to improve wildlife conservation efforts.

- 40 Resources

- 38 Discussions

- 33 Groups

- @carlybatist

- | she/her

ecoacoustics, biodiversity monitoring, nature tech

- 113 Resources

- 361 Discussions

- 19 Groups

- @Nycticebus_scientia

- | he/they

MammalWeb.org

Co-founded citizen science camera-trapping project with interest in developing 100% open source wildlife tech. Advocate for open science/open research. Former Community Councilor of the Gathering for Open Science Hardware.

- 4 Resources

- 25 Discussions

- 3 Groups

- @alexreyyap

- | Male

I am embedded AI systems developer based in Davao City, Philippines.

- 0 Resources

- 0 Discussions

- 5 Groups

- @Kyle_Birchard

- | He/Him

Building tools for research and management of insect populations

- 0 Resources

- 0 Discussions

- 6 Groups

- @jsulloa

- | He/Him

Instituto Humboldt & Red Ecoacústica Colombiana

Scientist and engineer developing smart tools for ecology and biodiversity conservation.

- 3 Resources

- 22 Discussions

- 7 Groups

- @TaliaSpeaker

- | She/her

WILDLABS & World Wide Fund for Nature/ World Wildlife Fund (WWF)

I'm the Executive Manager of WILDLABS at WWF

- 23 Resources

- 64 Discussions

- 31 Groups

- @bluevalhalla

- | he/him

BearID Project & Arm

Developing AI and IoT for wildlife

- 0 Resources

- 55 Discussions

- 8 Groups

- @alex_shetnikov

- | he/him

Engineer and AGI developer from Russia. Passionate about wildlife protection and low-cost sensing systems. Creator of SwarmGuard.

- 0 Resources

- 3 Discussions

- 3 Groups

Aeracoop & Dronecoria

Computer engineer, Drone Pilot, Seed researcher, Wild Tech Maker

- 3 Resources

- 37 Discussions

- 11 Groups

- 0 Resources

- 0 Discussions

- 10 Groups

The Marine Innovation Lab for Leading-edge Oceanography develops hardware and software to expand the ocean observing network and for the sustainable management of natural resources. For Fall 2026, we are actively...

24 July 2025

Lead a growing non-profit to sustain open source solutions for open science!

19 July 2025

In this case, you’ll explore how the BoutScout project is improving avian behavioural research through deep learning—without relying on images or video. By combining dataloggers, open-source hardware, and a powerful...

24 June 2025

Weeds, by definition, are plants in the wrong place but Weed-AI is helping put weed image data in the right place. Weed-AI is an open source, searchable, weeds image data platform designed to facilitate the research and...

7 May 2025

Bringing you the latest in open-source technology for agriculture. This accompanies the Open Source Agriculture respository: Collating all open-source datasets, software tools and deployment platforms related to open-...

1 May 2025

Repository for all things open-source in agricultural technology (agritech) development by Guy Coleman. This accompanies the OpenSourceAg newsletter. Aiming to collate all open-source datasets and projects in agtech...

1 May 2025

Fires in Serengeti and Masai Mara National Parks have burned massive areas this year. With Google Earth Engine, it's possible to quantify burn severity using the normalized burn ratio function, then calculate the total...

29 April 2025

Conservation International is proud to announce the launch of the Nature Tech for Biodiversity Sector Map, developed in partnership with the Nature Tech Collective!

1 April 2025

Modern GIS is open technology, scalable, and interoperable. How do you implement it? [Header image: kepler.gl]

12 March 2025

Join the FathomNet Kaggle Competition to show us how you would develop a model that can accurately classify varying taxonomic ranks!

4 March 2025

Last month, we spent four days trekking through Cerro Hoya National Park in Panama, where we hiked from near sea level up to the cloud forest to deploy Mothboxes.

14 February 2025

Drone Photogrammetry & GIS Intermediate Course

Drone Photogrammetry & GIS Intermediate Course

Drone Photogrammetry & GIS Intermediate Course

Drone Photogrammetry & GIS Advanced Course

Drone Photogrammetry & GIS Advanced Course

Drone Photogrammetry & GIS Advanced Course

Drone Photogrammetry & GIS Introduction (Foundation) Course

Drone Photogrammetry & GIS Introduction (Foundation) Course

Drone Photogrammetry & GIS Introduction (Foundation) Course

August 2025

event

September 2025

event

event

October 2025

event

June 2025

event

event

| Description | Activity | Replies | Groups | Updated |

|---|---|---|---|---|

| want to join 2025 contest for humpback whale photos in Juneo Alaska of Kelp |

|

Citizen Science, Conservation Tech Training and Education, Drones, Emerging Tech, Geospatial, Human-Wildlife Coexistence, Marine Conservation, Open Source Solutions | 3 hours 40 minutes ago | |

| This is awesome!! |

|

Conservation Tech Training and Education, Drones, Emerging Tech, Geospatial, Open Source Solutions | 19 hours 40 minutes ago | |

| Hi Elsa, We have used InVEST for a pollinator project we supported (the crop pollination model - details here), and looking to using it more for marine and coastal... |

|

Geospatial, Software Development, Climate Change, Funding and Finance, Marine Conservation, Open Source Solutions | 5 days ago | |

| Kudos for such an innovative approach—integrating additional sensors with acoustic recorders is a brilliant step forward! I'm especially interested in how you tackle energy... |

|

Acoustics, AI for Conservation, Latin America Community, Open Source Solutions | 5 days 14 hours ago | |

| Hi Chris Great to meet you — and I love that you called it convergent evolution! That’s exactly how it feels when two people independently reach a similar idea because the need... |

|

Open Source Solutions | 1 week 2 days ago | |

| Amazing. Thanks for pointing them out. Didn't see it. |

|

Open Source Solutions | 4 weeks 1 day ago | |

| Do you know a nonprofit or organization that is looking to work with students passionate about the environment? Code the Change... |

|

AI for Conservation, Citizen Science, Conservation Tech Training and Education, Open Source Solutions, Software Development | 1 month 1 week ago | |

| 15 years ago I had to rebuild the dams on a game reserve I was managing due to flood damage and neglect. How I wished there was an easier,... |

|

Drones, Conservation Tech Training and Education, Data management and processing tools, Emerging Tech, Geospatial, Open Source Solutions | 3 months 4 weeks ago | |

| Thank you for your comment Chris! Using these tools has made a huge difference in the way we can monitor and manage Invasive Alien Vegetation. I hope you are able to integrate... |

|

AI for Conservation, Citizen Science, Conservation Tech Training and Education, Drones, Geospatial, Human-Wildlife Coexistence, Open Source Solutions | 1 month 3 weeks ago | |

| I would love to hear updates on this if you have a mailing list or list of intersted parties! |

|

AI for Conservation, Community Base, Drones, Latin America Community, Marine Conservation, Open Source Solutions, Software Development | 1 month 4 weeks ago | |

| Passing along a question from the Gathering for Open Science Hardware, asking about sources of funding to support in-person events... |

|

Open Source Solutions, Funding and Finance, Community Base | 2 months ago | |

| I'm excited to see this project begin; I think its focus on versatility and functionality for users in diverse environments will allow Trapper Keeper to have a broad impact,... |

|

Camera Traps, Data management and processing tools, Emerging Tech, Open Source Solutions, Software Development | 2 months 1 week ago |

Updates on Mole-Rat Mystery Drone Project

10 June 2025 10:21am

12 June 2025 11:33am

Thank you Elsa :)

14 August 2025 6:07am

want to join 2025 contest for humpback whale photos in Juneo Alaska of Kelp

WOOHOO ITS WORKING!! Tech finds undetected decades old Alien Invasive Parent Plants in indigenous forest!

24 July 2025 12:21pm

13 August 2025 2:06pm

This is awesome!!

Anyone using InVEST?

10 July 2025 1:31pm

5 August 2025 7:27pm

If you're curious about InVEST I just created a page in "The Inventory" (see link on this page) with some resources and just wanted to hlighlight some potentially interesting models that are worth having a look at!

- Habitat Quality: this model uses habitat quality and rarity as proxies to represent the biodiversity of a landscape, estimating the extent of habitat and vegetation types across a landscape, and their state of degradation.

- Habitat Risk Assessment: this model evaluates risks posed to coastal and marine habitats in terms of exposure to human activities and the habitat-specific consequence of that exposure for delivery of ecosystem services.

Crop Pollination: this model focuses on wild pollinators providing an ecosystem service. The model estimates insect pollinator nest sites, floral resources, and flight ranges to derive an index of pollinator abundance on each cell on a landscape. If desired, the model can creates an index of the value of these pollinators to agricultural production, and attributes this value back to source cells.

7 August 2025 7:40pm

Elsa,

We have been working with InVEST for a number of years. We have found them helpful for conservation and scenario planning. Happy to share as helpful.

John

8 August 2025 2:23pm

Hi Elsa,

We have used InVEST for a pollinator project we supported (the crop pollination model - details here), and looking to using it more for marine and coastal applications so really appreciate the details you shared here!

Cheers,

Liz

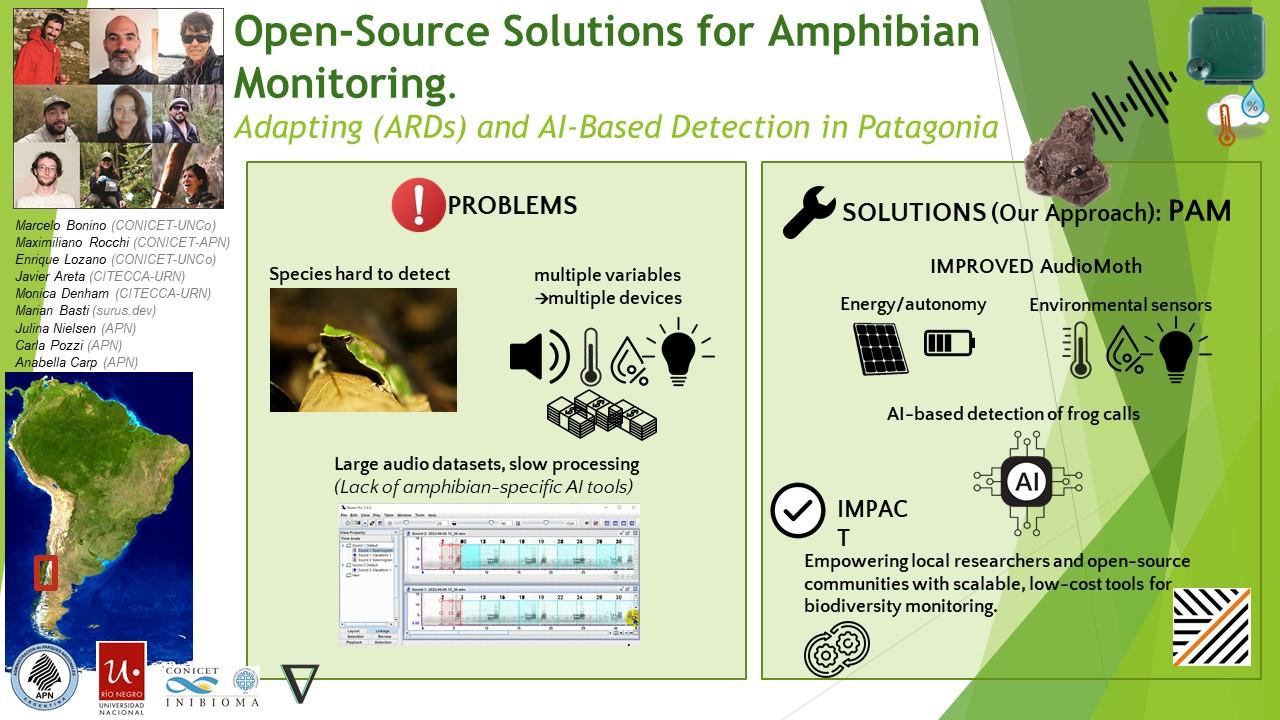

🐸 WILDLABS Awards 2025: Open-Source Solutions for Amphibian Monitoring: Adapting Autonomous Recording Devices (ARDs) and AI-Based Detection in Patagonia

27 May 2025 8:39pm

7 August 2025 9:27pm

Project Update — Sensors, Sounds, and DIY Solutions (sensores, sonidos y soluciones caseras)

We continue making progress on our bioacoustics project focused on the conservation of Patagonian amphibians, thanks to the support of WILDLABS. Here are some of the areas we’ve been working on in recent months:

(Seguimos avanzando en nuestro proyecto de bioacústica aplicada a la conservación de anfibios patagónicos, gracias al apoyo de WildLabs.Queremos compartir algunos de los frentes en los que estuvimos trabajando estos meses)

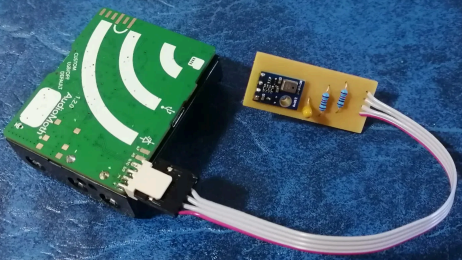

1. Hardware

One of our main goals was to explore how to improve AudioMoth recorders to capture not only sound but also key environmental variables for amphibian monitoring. We tested an implementation of the I2C protocol using bit banging via the GPIO pins, allowing us to connect external sensors. The modified firmware is already available in our repository:

👉 https://gitlab.com/emiliobascary/audiomoth

We are still working on managing power consumption and integrating specific sensors, but the initial tests have been promising.

(Uno de nuestros principales objetivos fue explorar cómo mejorar las grabadoras AudioMoth para que registren no sólo sonido, sino también variables ambientales clave para el monitoreo de anfibios. Probamos una implementación del protocolo I2C mediante bit banging en los pines GPIO, lo que permite conectar sensores externos. La modificación del firmware ya está disponible en nuestro repositorio:

https://gitlab.com/emiliobascary/audiomoth

Aún estamos trabajando en la gestión del consumo energético y en incorporar sensores específicos, pero los primeros ensayos son alentadores.)

2. Software (AI)

We explored different strategies for automatically detecting vocalizations in complex acoustic landscapes.

BirdNET is by far the most widely used, but we noted that it’s implemented in TensorFlow — a library that is becoming somewhat outdated.

This gave us the opportunity to reimplement it in PyTorch (currently the most widely used and actively maintained deep learning library) and begin pretraining a new model using AnuraSet and our own data. Given the rapid evolution of neural network architectures, we also took the chance to experiment with Transformers — specifically, Whisper and DeltaNet.

Our code and progress will be shared soon on GitHub.

(Exploramos diferentes estrategias para la detección automática de vocalizaciones en paisajes acústicos complejos. La más utilizada por lejos es BirdNet, aunque notamos que está implementado en TensorFlow, una libreria de que está quedando al margen. Aprovechamos la oportunidad para reimplementarla en PyTorch (la librería de deep learning con mayor mantenimiento y más popular hoy en día) y realizar un nuevo pre-entrenamiento basado en AnuraSet y nuestros propios datos. Dado la rápida evolución de las arquitecturas de redes neuronales disponibles, tomamos la oportunidad para implementar y experimentar con Transformers. Más específicamente Whisper y DeltaNet. Nuestro código y avances irán siendo compartidos en GitHub.)

3. Miscellaneous

Alongside hardware and software, we’ve been refining our workflow.

We found interesting points of alignment with the “Safe and Sound: a standard for bioacoustic data” initiative (still in progress), which offers clear guidelines for folder structure and data handling in bioacoustics. This is helping us design protocols that ensure organization, traceability, and future reuse of our data.

We also discussed annotation criteria with @jsulloa to ensure consistent and replicable labeling that supports the training of automatic models.

We're excited to continue sharing experiences with the Latin America Community— we know we share many of the same challenges, but also great potential to apply these technologies to conservation in our region.

(Además del trabajo en hardware y software, estamos afinando nuestro flujo de trabajo. Encontramos puntos de articulación muy interesantes con la iniciativa “Safe and Sound: a standard for bioacoustic data” (todavía en progreso), que ofrece lineamientos claros sobre la estructura de carpetas y el manejo de datos bioacústicos. Esto nos está ayudando a diseñar protocolos que garanticen orden, trazabilidad y reutilización futura de la información. También discutimos criterios de etiquetado con @jsulloa, para lograr anotaciones consistentes y replicables que faciliten el entrenamiento de modelos automáticos. Estamos entusiasmados por seguir compartiendo experiencias con Latin America Community , con quienes sabemos que compartimos muchos desafíos, pero también un enorme potencial para aplicar estas tecnologías a la conservación en nuestra región.)

7 August 2025 10:02pm

Love this team <3

We would love to connect with teams also working on the whole AI pipeline- pretraining, finetuning and deployment! Training of the models is in progress, and we know lots can be learned from your experiences!

Also, we are approaching the UI design and development from the software-on-demand philosophy. Why depend on third-party software, having to learn, adapt and comply to their UX and ecosystem? Thanks to agentic AI in our IDEs we can quickly and reliably iterate towards tools designed to satisfy our own specific needs and practices, putting the researcher first.

Your ideas, thoughts or critiques are very much welcome!

8 August 2025 1:20pm

Kudos for such an innovative approach—integrating additional sensors with acoustic recorders is a brilliant step forward! I'm especially interested in how you tackle energy autonomy, which I personally see as the main limitation of Audiomoths

Looking forward to seeing how your system evolves!

Tech4Nature Presents: 2025 Innovation Challenge Workshop Series - Registration Now Open!

6 August 2025 5:09pm

Tech4Nature Presents: 2025 Innovation Challenge Workshop Series - Registration Now Open!

6 August 2025 5:09pm

Tech4Nature Presents: 2025 Innovation Challenge Workshop Series - Registration Now Open!

6 August 2025 5:08pm

SwarmGuard: low-cost autonomous mesh system to detect poaching threats and protect wildlife

15 June 2025 7:03pm

17 June 2025 4:56pm

Hi Aleksey, thanks for sharing this! We'd love to see you add SwarmGuard to The Inventory, our wiki-style database of conservation tech products, R&D projects, and organizations. To learn about how to add SwarmGuard to The Inventory, read the user guide my colleague @JakeBurton created. Reach out to either one of us with questions!

21 July 2025 2:41pm

Good morning Aleksey,

Your idea is very similar to an idea I had -- I guess we are experiencing some convergent evolution. I'd be very interested in understanding your project more. I'd be curious to know if you've done much testing with Lora and dense foliage. I'm just curious to know if you've hit any real limitations. Reading your description, it sounds like perhaps you are using some mesh networking. Anyway -- it's nice to meet you and see we had similar ideas.

Chris

4 August 2025 8:20am

MS and PhD Opportunities in Ocean Engineering and Oceanography (Fall 2026)

24 July 2025 5:55am

Executive Director for the Open Science Hardware Foundation

19 July 2025 2:15pm

New open source Kinéis transmitter for €50

12 June 2025 10:12am

2 July 2025 11:59pm

Awesome news! Sorry if this question is addressed but I didn't see schematics on the page. If this is open source do you release the full PCB layout files? I can see this being a great base for many applications, including commercial operations.

9 July 2025 7:38am

Hi Jared,

That's great. There's lots of possibility for integration.

The SMD Breakout board schematics and Altium files are available below for the pinout to the board itself and to integrate into your products. We are publishing the SMD files together with a new Getting Started guide in the next few weeks to support this too. https://github.com/arribada/featherwings-argos-smd-hw/

GitHub - arribada/featherwings-argos-smd-hw: A breakout board for integrating the ARGOS-SMD module with Adafruit Feather boards.

A breakout board for integrating the ARGOS-SMD module with Adafruit Feather boards. - arribada/featherwings-argos-smd-hw

14 July 2025 11:16pm

Amazing. Thanks for pointing them out. Didn't see it.

Tech for Impact Collaboration

2 July 2025 3:41am

Off-The-Shelf Drones & Open Source GIS Software for Dam Site Surveying?

15 April 2025 3:57pm

Drone Photogrammetry & GIS Advanced Course

Sean Hill

and 1 more

Sean Hill

and 1 more

24 June 2025 9:31am

Drone Photogrammetry & GIS Intermediate Course

Sean Hill

and 1 more

Sean Hill

and 1 more

24 June 2025 9:31am

BoutScout – Beyond AI for Images, Detecting Avian Behaviour with Sensors

24 June 2025 1:46am

Imageomics FuncaPalooza 2025

17 June 2025 6:21pm

How drones, AI & Open Source Software are being used to combat Alien Invasive Plants in South Africa

2 June 2025 10:08am

7 June 2025 9:36pm

Hi Ginevra, thank you! Its such a huge advantage to have tech tools available to us in conservation. Not just from an analysis point of view but also from a practical application view😊

17 June 2025 1:26am

Aloha, this is a great project. Thanks for sharing. I have been looking for ideas to integrate machine learning with some of the conservation work we are engaed in here on Kauai. Thank you

17 June 2025 11:00am

Thank you for your comment Chris! Using these tools has made a huge difference in the way we can monitor and manage Invasive Alien Vegetation. I hope you are able to integrate similar systems with your projects there. If you need any help, feel free to reach out!

Smart Drone to Tag Whales Project

8 June 2025 12:19pm

14 June 2025 12:19pm

I would love to hear updates on this if you have a mailing list or list of intersted parties!

Is Crablante the New Korg?

15 June 2025 10:43am

Suggestions for finding event funding (for GOSH)?

9 June 2025 11:47am

Artificial Intelligence Meets Biodiversity Science: Mining Museum Labels

5 June 2025 4:37pm

WILDLABS AWARDS 2025 - Trapper Keeper - a scalable and energy-efficient open-source camera trap data infrastructure

30 May 2025 12:05pm

2 June 2025 10:24pm

Help needed : Overview of Image Analysis and Visualization from Camera Traps

2 June 2025 1:59pm

2 June 2025 2:17pm

This camera trap survey addresses the crucial need for a unified and comprehensive solution to improve data reliability and standardize monitoring techniques in wildlife research. Well done !

2 June 2025 3:18pm

This survey on camera trap use is a valuable effort to improve data quality and consistency in wildlife monitoring. Looking forward to the results and how they will help shape best practices and future research. Great initiative!

Mothbox wins 2025 WILDLABS Award!

22 May 2025 7:55pm

Software QA Topics

9 January 2025 12:00pm

19 May 2025 5:30am

Hi everyone,

What should we share or demo about Software Quality Assurance?

Alex Saunders and I, the two Software QA people at Wildlife Protection Solutions (WPS) are going to do a community call to knowledge share on software testing and test automation in the 3rd or 4th week of January.

We've listed a few QA topics that we could talk about in this 1-2 minute poll here basketball stars and would like your feedback on topic priority.

Thanks for your feedback and we look forward to connecting! We'll also post when we have an exact date and time pinned down.

Sounds like a great initiative—looking forward to it! I’d love to hear more about your real-world test automation setup, especially any tools or frameworks you’ve found effective at WPS. It’d also be helpful to see how QA fits into your dev workflow and any challenges you’ve faced specific to conservation tech. I just filled out the poll and can’t wait to see what topics get chosen. Thanks, Alex and team, for organizing this!

Weed-AI: Supporting the AI revolution in weed control

7 May 2025 10:58pm

Sustainable financing for open source conservation tech - Open Source Solutions + Funding and Finance Community Meeting

Pen-Yuan Hsing

and 5 more

Pen-Yuan Hsing

and 5 more

1 May 2025 11:52am

OpenAgTech newsletter: Bringing you the latest in open-source tech for agriculture.

1 May 2025 9:24am

Open Source Agriculture Repository

1 May 2025 9:18am

10 June 2025 6:04pm

Such a great case study!!