The Latin America Community group is designed to create a dedicated regional hub within WILDLABS, where conservationists and technology enthusiasts from Latin America can come together to share knowledge, collaborate on projects, and build networks that support conservation efforts across the region.

This group is for individuals working in or passionate about conservation technology across Latin America. From researchers, conservationists, tech developers, policy-makers or just people starting in the field, this community welcomes everyone interested in advancing conservation technology in the region.

A primary challenge faced by the Latin American conservation tech community is a lack of cohesive networking and communication channels specific to the region. The large geographic distances, and the varying levels of access to resources have made it difficult for people to connect and collaborate effectively. This group aims to address these issues by providing a central space where members can connect, find peers with similar interests, communicate more seamlessly, share resources, and build networks. It also encourages cross-border collaboration and helps bridge the gap between those with access to technology, knowledge and resources and those in need of them.

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

El grupo de la Comunidad de América Latina está diseñado para crear un centro regional dedicado dentro de WILDLABS, donde conservacionistas y entusiastas de la tecnología de América Latina puedan reunirse para compartir conocimientos, colaborar en proyectos y construir redes que apoyen los esfuerzos de conservación en toda la región.

Este grupo está dirigido a personas que trabajan o sienten pasión por la tecnología para la conservación en América Latina. Desde investigadores, conservacionistas, desarrolladores de tecnología, formuladores de políticas, hasta personas que recién comienzan en el campo, esta comunidad da la bienvenida a todos aquellos interesados en avanzar la tecnología para la conservación en la región.

Uno de los principales desafíos que enfrenta la comunidad de tecnología para la conservación en América Latina es la falta de redes de comunicación y canales de colaboración cohesivos específicos para la región. Las grandes distancias geográficas y los diferentes niveles de acceso a recursos, a menudo dificultad que las personas se conecten y colaboren de manera efectiva. Este grupo busca abordar estos problemas proporcionando un espacio central donde los miembros puedan conectarse, encontrar colegas con intereses similares, comunicarse de manera más fluida, compartir recursos y construir redes. También fomenta la colaboración sin fronteras y ayuda a cerrar la brecha entre quienes tienen acceso a tecnología, conocimientos y recursos, y quienes los necesitan.

Community Guidelines

When participating in this group, either through commenting or posting, please post and/or comment in both Spanish (or Portuguese) and English.

Image Credit: Banco de Imágenes del Instituto Humboldt

Group curators

- @jsulloa

- | He/Him

Instituto Humboldt & Red Ecoacústica Colombiana

Scientist and engineer developing smart tools for ecology and biodiversity conservation.

- 3 Resources

- 22 Discussions

- 7 Groups

WILDLABS & Wildlife Conservation Society (WCS)

I'm the Bioacoustics Research Analyst at WILDLABS. I'm a marine biologist with particular interest in the acoustics behavior of cetaceans. I'm also a backend web developer, hoping to use technology to improve wildlife conservation efforts.

- 40 Resources

- 38 Discussions

- 33 Groups

No showcases have been added to this group yet.

WILDLABS & Wildlife Conservation Society (WCS)

I'm the Bioacoustics Research Analyst at WILDLABS. I'm a marine biologist with particular interest in the acoustics behavior of cetaceans. I'm also a backend web developer, hoping to use technology to improve wildlife conservation efforts.

- 40 Resources

- 38 Discussions

- 33 Groups

- @jsulloa

- | He/Him

Instituto Humboldt & Red Ecoacústica Colombiana

Scientist and engineer developing smart tools for ecology and biodiversity conservation.

- 3 Resources

- 22 Discussions

- 7 Groups

- @TaliaSpeaker

- | She/her

WILDLABS & World Wide Fund for Nature/ World Wildlife Fund (WWF)

I'm the Executive Manager of WILDLABS at WWF

- 23 Resources

- 64 Discussions

- 31 Groups

- @Frank_van_der_Most

- | He, him

RubberBootsData

Field data app developer, with an interest in funding and finance

- 57 Resources

- 188 Discussions

- 9 Groups

- @GonzaloBravo

- | Dr.

Gonzalo Bravo is a Marine Biologist (BSc, MSc, PhD) and postdoctoral researcher at CONICET (Argentina). His research focuses on benthic biodiversity in Patagonian subtidal rocky reefs, with experience in non-destructive sampling and AI-based image analysis.

- 0 Resources

- 0 Discussions

- 5 Groups

Wildlife photographer with a focus on conservation. Collaborating with conservation projects around the world.

- 0 Resources

- 0 Discussions

- 6 Groups

- @Aurel

- | She/Her

Looking to reconcile biodiversity conservation and finance.

- 2 Resources

- 1 Discussions

- 10 Groups

- @EstebanICC

- | He/ him

- 0 Resources

- 0 Discussions

- 1 Groups

A colombian biologist | Master of Engineering (MEng) | Master's in Data Science. Researcher Associate and Adjunct Professor at Universidad Icesi

- 1 Resources

- 4 Discussions

- 3 Groups

- @boninom

- | Marce

National Scientific and Technical Research Council of Argentina (CONICET)

I am a biologist specializing in herpetology at INIBIOMA-CONICET-UNComa. My research focuses on how climate change impacts amphibians, using passive acoustic monitoring to study key environmental factors like temperature and rainfall, integrating niche modeling and eco-physiology

- 0 Resources

- 2 Discussions

- 1 Groups

3point.xyz

Over 35 years of experience in biodiversity conservation worldwide, largely focused on forests, rewilding and conservation technology. I run my own business assisting nonprofits and agencies in the conservation community

- 6 Resources

- 69 Discussions

- 12 Groups

Universidad San Francisco de Quito

Biologist/Professor focusing on carnivore conservation in Ecuador

- 0 Resources

- 0 Discussions

- 11 Groups

Un ambicioso proyecto internacional está usando transmisores satelitales para monitorear los viajes migratorios de ballenas francas australes, permitiendo entender sus sorprendentes patrones de desplazamiento en tiempo...

7 August 2025

Una misión pionera para conocer y proteger las profundidades del talud argentino // A pioneering mission to explore and protect the depths of the Argentine continental slope

31 July 2025

El Instituto Humboldt está buscando un(a) Desarrollador(a) de Inteligencia Artificial que quiera aplicar su experiencia en Python y procesamiento de lenguaje natural para proteger la biodiversidad.

25 July 2025

In this case, you’ll explore how the BoutScout project is improving avian behavioural research through deep learning—without relying on images or video. By combining dataloggers, open-source hardware, and a powerful...

24 June 2025

Provides a comprehensive overview of new studies, giving insights into the most endangered ecosystem in the tropics. The book concentrates on four thematic areas such as LiDAR remote sensing, remote sensing and ecology...

15 June 2025

La Universidad de Ingeniería y Tecnología (UTEC) está buscando cubrir nuevas vacantes en su Instituto Amazónico de Investigación para la Sostenibilidad (ASRI).

12 June 2025

Article

Programa de aceleración para emprendimientos que trabajan en la protección y restauración del Arrecife Mesoamericano. Incluye bootcamp, mentoría personalizada y networking para proyectos de impacto marino.

2 June 2025

La Fundación Camille Goblet ofrece becas para mujeres que trabajan en conservación de especies amenazadas. Si estás haciendo un posgrado y tu investigación está enfocada en conservación, esta puede ser una excelente...

30 May 2025

Financiamiento para proyectos de biodiversidad en América Latina y el Caribe

10 April 2025

$3 millions of funding for NGO in Brazil using AI for conservation / $ 3 milhões em financiamento para ONGs no Brasil que usam IA para conservação!

1 April 2025

Actividad gratuita y online para Investigadores en ciencias marinas trabajando en América Latina con interés en aprender a programar en Python, R, y trabajo colaborativo. Último día para aplicar a OceanHackWeek en...

31 March 2025

Funding

I have been a bit distracted the past months by my move from Costa Rica to Spain ( all went well, thank you, I just miss the rain forest and the Ticos ) and have to catch up on funding calls. Because I still have little...

28 March 2025

October 2025

event

event

July 2025

event

June 2025

event

May 2025

event

event

event

event

event

| Description | Activity | Replies | Groups | Updated |

|---|---|---|---|---|

| I just did a quick search and it doesn't look like there's a place where you can ship them in Mexico. However, at least in Mexico City, there are a bunch of collection... |

|

Camera Traps, Latin America Community | 4 years 2 months ago | |

| Dear all, Next November 2019 I will hold a aerial sowing workshop with Drones in Brazil, in Rondonopolis, in collaboration with the... |

|

Drones, Latin America Community | 5 years 11 months ago | |

| Monitoring and protecting vast protected areas and wildlife with limited resources and small teams is a huge challenge in Brazil and in... |

|

Software Development, Latin America Community | 6 years 1 month ago | |

| Hi Don, I agree, it's very intriguing. I think they're using the Remora. It looks like a solar powered GPS tracker. I couldn't find much more... |

|

Marine Conservation, Latin America Community | 6 years 6 months ago | |

| Dear Colleagues, The SMART Partnership, in collaboration with Flora & Fauna International and WCS Belize, is organizing and/or... |

|

Software Development, Latin America Community | 7 years 11 months ago | |

| hey Felix, great to hear from you! I'll definitely be popping by to find out more, thanks for sharing your booth details. As there are two tech focused symposiums... |

|

Community Base, Latin America Community | 8 years ago | |

| The police have tried to use New World Vultures to find dead bodies in Europe. The success was some what limited but not the fault of the birds. This does open up a whole area... |

|

Animal Movement, Latin America Community | 9 years 5 months ago | |

| http://news.mongabay.com/2015/12/mapbiomas-new-mapping-platform-will-track-annual-deforestation-in-brazil/ New mapping... |

|

Geospatial, Latin America Community | 9 years 7 months ago |

Seeking Connections with Chile’s Wildlife Conservation

11 August 2025 6:28pm

Suggestions for research funds

10 August 2025 5:56pm

🐸 WILDLABS Awards 2025: Open-Source Solutions for Amphibian Monitoring: Adapting Autonomous Recording Devices (ARDs) and AI-Based Detection in Patagonia

27 May 2025 8:39pm

7 August 2025 10:02pm

Love this team <3

We would love to connect with teams also working on the whole AI pipeline- pretraining, finetuning and deployment! Training of the models is in progress, and we know lots can be learned from your experiences!

Also, we are approaching the UI design and development from the software-on-demand philosophy. Why depend on third-party software, having to learn, adapt and comply to their UX and ecosystem? Thanks to agentic AI in our IDEs we can quickly and reliably iterate towards tools designed to satisfy our own specific needs and practices, putting the researcher first.

Your ideas, thoughts or critiques are very much welcome!

8 August 2025 1:20pm

Kudos for such an innovative approach—integrating additional sensors with acoustic recorders is a brilliant step forward! I'm especially interested in how you tackle energy autonomy, which I personally see as the main limitation of Audiomoths

Looking forward to seeing how your system evolves!

Siguiendo Ballenas: rastreo satelital desde el espacio revela sus rutas migratorias // Tracking right whales from outer space reveals their migratory routes

7 August 2025 10:26pm

Transmisión en vivo a 3.900 metros de profundidad // Live Broadcast from 3,900 Meters Deep

31 July 2025 2:26pm

WILDLABS AWARDS 2024 - Innovative Sensor Technologies for Sustainable Coexistence: Advancing Crocodilian Conservation and Ecosystem Monitoring in Costa Rica

11 July 2024 11:30pm

14 December 2024 6:41pm

¡Muchas gracias @vanereyes, apreciamos su apoyo!

4 February 2025 11:44am

Super interesting! I'm currently developing sensor accelerometers for fence perimeters in wildlife conservation centres. I think this is a really cool application of accelerometers; I would love to know how the sensor which you developed for part 3 looked like, or what type of software/machine learning methods you've used? Currently my design is a cased raspberry pi pico, combined with an accelerometer and ml decision trees in order to create a low-cost design. Perhaps there is something to be learnt from this project as well :)

4 February 2025 2:45pm

Wonderful video! Really impressive :)

Counting aggregated animals in orthomosaics?

11 July 2025 8:51pm

26 July 2025 6:59am

Thank you for sharing. Would love to learn bit more about the data workflow.

Last year I tired to using QGIS and few existing models to count the birds from orthomosaics of wadding birds in Cambodia but gave after dismal results.

Búsqueda de desarrollador de IA - Instituto Humboldt

25 July 2025 3:49pm

Issue with SongBeam recorder

17 July 2025 9:40pm

24 July 2025 9:19pm

Hi Josept! Thank you for sharing your experience! This types of feedback are important for the community to know about when choosing what tech to use for their work. Would you be interested in sharing a review of Songbeam and the Audiomoth on The Inventory, our wiki-style database of conservation tech tools, R&D projects, and organizations? You can learn more here about how to leave reviews!

Encuentro virtual de la comunidad Latinoamerican

22 July 2025 3:48pm

PACE LAND DATA AND USER GROUP

8 July 2025 11:06am

8 July 2025 11:28am

The Jupyter notebook on projecting and formatting PACE OCI data is also available here!

21 July 2025 1:42pm

Very interesting! Thanks for posting this. I found the NASA ARSET Tutorial quite useful for an overview on PACE before delving into the data. Highly recommend it if you're new to hyperspectral and the PACE mission!

GBIF: Conectando datos abiertos de biodiversidad con conocimiento y acción

Vanesa Reyes

and 1 more

Vanesa Reyes

and 1 more

10 July 2025 8:32pm

Bioacústica de insectos para la conservación

3 July 2025 4:08pm

BoutScout – Beyond AI for Images, Detecting Avian Behaviour with Sensors

24 June 2025 1:46am

Remote Sensing of Tropical Dry Forests in the Americas

15 June 2025 10:38am

Smart Drone to Tag Whales Project

8 June 2025 12:19pm

14 June 2025 12:19pm

I would love to hear updates on this if you have a mailing list or list of intersted parties!

Oportunidades laborales en investigación en la Amazonía | UTEC - ASRI

12 June 2025 4:56pm

Convocatoria abierta para liderar el grupo LATAM de WILDLABS

Vanesa Reyes

and 1 more

Vanesa Reyes

and 1 more

4 June 2025 4:51pm

Convocatoria abierta: MAR+Invest 2025 - Aceleración para soluciones de conservación de arrecifes

2 June 2025 1:13pm

Oportunidad de financiamiento para mujeres en conservación

30 May 2025 3:15pm

'Boring Fund' Workshop: AI for Biodiveristy Monitoring in the Andes

5 February 2025 5:55pm

8 February 2025 4:29pm

Hey @benweinstein , this is really great. I bet there are better ways to find bofedales (puna fens) currently than what existed back in 2010. I'll share this with the Audubon Americas team.

2 May 2025 2:59pm

Hi everyone, following up here with a summary of our workshop!

The AI for Biodiversity Monitoring workshop brought together twenty-five participants to explore uses of machine learning for ecological monitoring. Sponsored by the WILDLABS ‘Boring Fund’, we were able to support travel and lodging for a four-day workshop at the University of Antioquia in Medelín, Colombia. The goal was to bring together ecologists interested in AI tools and data scientists interested in working on AI applications from Colombia and Ecuador. Participants were selected based on potential impact on their community, their readiness to contribute to the topic, and a broad category of representation, which balanced geographic origin, business versus academic experience, and career progression.

Before the workshop began I developed a website on github that laid out the aims of the workshop and provided a public focal point for uploading information. I made a number of technical videos, covering subjects like VSCODE + CoPilot, both to inform participants, as well as create an atmosphere of early and easy communication. The WhatsApp group, the youtube channel (link) of video introductions, and a steady drumbeat of short tutorial videos were key in establishing expectations for the workshop.

The workshop material was structured around data collection methods, Day 1) Introduction and Project Organization, Day 2) Camera Traps, Day 3) Bioacoustics, and Day 4) Airborne data. Each day I asked participants to install packages using conda, download code from github, and be active in supporting each other solving small technical problems. The large range of technical experience was key in developing peer support. I toyed with the idea of creating a juypterhub or joint cloud working space, but I am glad that I resisted; it is important for participants to see how to solve package conflicts and the many other myriad installation challenges on 25 different laptops.

We banked some early wins to help ease intimidation and create a good flow to technical training. I started with github and version control because it is broadly applicable, incredibly useful, and satisfying to learn. Using examples from my own work, I focused on github as a way both to contribute to machine learning for biology, as well as receive help. Building from these command line tools, we explored vscode + copilot for automated code completion, and had a lively discussion on how to balance utility of these new features with transparency and comprehension.

Days two, three and four flew by, with a general theme of existing foundational models, such as BirdNET for bioacoustics, Megadetector for Camera traps, DeepForest for airborne observation. A short presentation each morning was followed by a worked python example making predictions using new data, annotation using label-studio, and model developing with pytorch-lightning. There is a temptation to develop jupyter notebooks that outline perfect code step by step, but I prefer to let participants work through errors and have a live coding strategy. All materials are in Spanish and updated on the website. I was proud to see the level of joint support among participants, and tried to highlight these contributions to promote autonomy and peer teaching.

Sprinkled amongst the technical sessions, I had each participant create a two slide talk, and I would randomly select from the group to break up sessions and help stir conversation. I took it as a good sign that I was often quietly pressured by participants to select their talk in our next random draw. While we had general technical goals and each day had one or two main lectures, I tried to be nimble, allowing space for suggestions. In response to feedback, we rerouted an afternoon to discuss biodiversity monitoring goals and data sources. Ironically, the biologists in the room later suggested that we needed to get back to code, and the data scientists said it was great. Weaving between technical and domain expertise requires an openness to change.

Boiling down my takeaways from this effort, I think there are three broad lessons for future workshops.

- The group dynamic is everything. Provide multiple avenues for participants to communicate with each other. We benefited from a smaller group of dedicated participants compared to inviting a larger number.

- Keep the objectives, number of packages, and size of sample datasets to a minimum.

- Foster peer learning and community development. Give time for everyone to speak. Step in aggressively as the arbiter of the schedule in order to allow all participants a space to contribute.

I am grateful to everyone who contributed to this effort both before and during the event to make it a success. Particular thanks goes to Dr. Juan Parra for hosting us at the University of Antioquia, UF staff for booking travel, Dr. Ethan White for his support and mentorship, and Emily Jack-Scott for her feedback on developing course materials. Credit for the ideas behind this workshop goes to Dr. Boris Tinoco, Dr. Sara Beery for her efforts at CV4Ecology and Dr. Juan Sebastian Ulloa. My co-instructors Dr. Jose Ruiz and Santiago Guzman were fantastic, and I’d like to thank ARM through the WILDLABS Boring fund for its generous support.

2 May 2025 2:59pm

Connecting the Dots: Integrating Animal Movement Data into Global Conservation Frameworks

Lacey Hughey

and 3 more

Lacey Hughey

and 3 more

30 April 2025 1:38am

Análise de Cluster no Kaleidoscope Pro: de Classificadores Simples a Avançados (Intermediário)

24 April 2025 7:03pm

Extração de Sinais Usando o Kaleidoscope Lite (Intermediário)

24 April 2025 7:01pm

Introducción al uso de Kaleidoscope Pro para Murciélagos (Principiante)

24 April 2025 6:54pm

Transformando el Sonido en Descubrimiento (Principiante)

24 April 2025 6:50pm

Reunión de la Comunidad Latinoamericana: Aplicaciones de Drones en el Monitoreo de Fauna

Vanesa Reyes

and 1 more

Vanesa Reyes

and 1 more

24 April 2025 4:08pm

Project Update — Nestling Growth App

22 April 2025 10:45pm

Introducción al uso de Kaleidoscope Pro para Murciélagos (Principiante)

18 April 2025 3:50pm

7 August 2025 9:27pm

Project Update — Sensors, Sounds, and DIY Solutions (sensores, sonidos y soluciones caseras)

We continue making progress on our bioacoustics project focused on the conservation of Patagonian amphibians, thanks to the support of WILDLABS. Here are some of the areas we’ve been working on in recent months:

(Seguimos avanzando en nuestro proyecto de bioacústica aplicada a la conservación de anfibios patagónicos, gracias al apoyo de WildLabs.Queremos compartir algunos de los frentes en los que estuvimos trabajando estos meses)

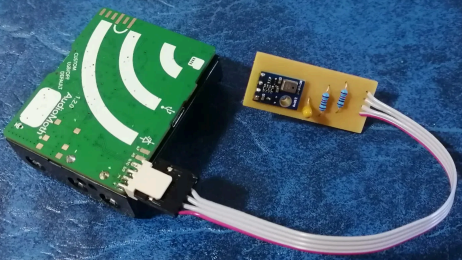

1. Hardware

One of our main goals was to explore how to improve AudioMoth recorders to capture not only sound but also key environmental variables for amphibian monitoring. We tested an implementation of the I2C protocol using bit banging via the GPIO pins, allowing us to connect external sensors. The modified firmware is already available in our repository:

👉 https://gitlab.com/emiliobascary/audiomoth

We are still working on managing power consumption and integrating specific sensors, but the initial tests have been promising.

(Uno de nuestros principales objetivos fue explorar cómo mejorar las grabadoras AudioMoth para que registren no sólo sonido, sino también variables ambientales clave para el monitoreo de anfibios. Probamos una implementación del protocolo I2C mediante bit banging en los pines GPIO, lo que permite conectar sensores externos. La modificación del firmware ya está disponible en nuestro repositorio:

https://gitlab.com/emiliobascary/audiomoth

Aún estamos trabajando en la gestión del consumo energético y en incorporar sensores específicos, pero los primeros ensayos son alentadores.)

2. Software (AI)

We explored different strategies for automatically detecting vocalizations in complex acoustic landscapes.

BirdNET is by far the most widely used, but we noted that it’s implemented in TensorFlow — a library that is becoming somewhat outdated.

This gave us the opportunity to reimplement it in PyTorch (currently the most widely used and actively maintained deep learning library) and begin pretraining a new model using AnuraSet and our own data. Given the rapid evolution of neural network architectures, we also took the chance to experiment with Transformers — specifically, Whisper and DeltaNet.

Our code and progress will be shared soon on GitHub.

(Exploramos diferentes estrategias para la detección automática de vocalizaciones en paisajes acústicos complejos. La más utilizada por lejos es BirdNet, aunque notamos que está implementado en TensorFlow, una libreria de que está quedando al margen. Aprovechamos la oportunidad para reimplementarla en PyTorch (la librería de deep learning con mayor mantenimiento y más popular hoy en día) y realizar un nuevo pre-entrenamiento basado en AnuraSet y nuestros propios datos. Dado la rápida evolución de las arquitecturas de redes neuronales disponibles, tomamos la oportunidad para implementar y experimentar con Transformers. Más específicamente Whisper y DeltaNet. Nuestro código y avances irán siendo compartidos en GitHub.)

3. Miscellaneous

Alongside hardware and software, we’ve been refining our workflow.

We found interesting points of alignment with the “Safe and Sound: a standard for bioacoustic data” initiative (still in progress), which offers clear guidelines for folder structure and data handling in bioacoustics. This is helping us design protocols that ensure organization, traceability, and future reuse of our data.

We also discussed annotation criteria with @jsulloa to ensure consistent and replicable labeling that supports the training of automatic models.

We're excited to continue sharing experiences with the Latin America Community— we know we share many of the same challenges, but also great potential to apply these technologies to conservation in our region.

(Además del trabajo en hardware y software, estamos afinando nuestro flujo de trabajo. Encontramos puntos de articulación muy interesantes con la iniciativa “Safe and Sound: a standard for bioacoustic data” (todavía en progreso), que ofrece lineamientos claros sobre la estructura de carpetas y el manejo de datos bioacústicos. Esto nos está ayudando a diseñar protocolos que garanticen orden, trazabilidad y reutilización futura de la información. También discutimos criterios de etiquetado con @jsulloa, para lograr anotaciones consistentes y replicables que faciliten el entrenamiento de modelos automáticos. Estamos entusiasmados por seguir compartiendo experiencias con Latin America Community , con quienes sabemos que compartimos muchos desafíos, pero también un enorme potencial para aplicar estas tecnologías a la conservación en nuestra región.)