Powerful conservation tech tools are gathering more data in the field than ever before. But without equally powerful and effective data management and processing tools, that data - no matter how groundbreaking or interesting - will not be able to reach its full potential for impact.

Data management can sometimes seem intimidating to conservationists, especially those just getting started in the world of conservation tech or experimenting with new data collection methods. While every community member's workflow and preferred data management and processing methods may be different, this group can serve as a resource to explore what works for others, share your own advice, and develop new strategies together.

Below are a few WILDLABS events dealing with datasets collected from various conservation tech tools:

Nicole Flores: How do I get started with Wildlife Insights?

Jamie Macaulay: How do I analyse large acoustic datasets using PAMGuard?

Sarah Davidson: Tools for Bio-logging Data in Conservation

Whatever conservation tech tools you work with, and whatever your preferred data management methods, we hope you'll find something helpful and effective in this group when you become a member!

Scaling biodiversity monitoring, using smart sensors and ai-pipelines

- 0 Resources

- 2 Discussions

- 10 Groups

- @ahmedjunaid

- | He/His

Zoologist, Ecologist, Herpetologist, Conservation Biologist

- 63 Resources

- 7 Discussions

- 26 Groups

Max Planck Institute of Animal Behavior

Project leader of MoveApps | Movement ecology

- 1 Resources

- 5 Discussions

- 5 Groups

- @frides238

- | She/her

Hi! I am Frida Ruiz, a current Mechanical Engineering undergraduate student very interested in habitat restoration & conservation. I am excited to connect with others and learn about technology applications within applied ecology & potential research opportunities

- 0 Resources

- 5 Discussions

- 13 Groups

- @Robincrocs

- | He/Him//El//Ele

Wildlife biologist, works with Caimans and Crocodiles

- 0 Resources

- 0 Discussions

- 15 Groups

PhD student at the University of Wuerzburg, working on the influence of climate change on animal migration, especially the Northern Bald Ibis.

- 1 Resources

- 0 Discussions

- 6 Groups

- @StephODonnell

- | She / Her

- 193 Resources

- 676 Discussions

- 32 Groups

Associate Wildlife Biologist M.S. Student

- 2 Resources

- 19 Discussions

- 7 Groups

- 0 Resources

- 7 Discussions

- 7 Groups

I am a conservation technology advisor with New Zealand's Department of Conservation. I have experience in developing remote monitoring tech, sensors, remote comms and data management.

- 0 Resources

- 0 Discussions

- 15 Groups

- @daviana

- | she/her

PhD student at Stanford University studying marine migratory species and human-wildlife interactions through biologging, remote sensing, and participatory science.

- 0 Resources

- 0 Discussions

- 3 Groups

- @erinconnolly

- | She/her

University College London (UCL)

PhD Student at UCL's People and Nature Lab. Studying human-livestock-wildlife coexistence in the Greater Maasai Mara Ecosystem, Kenya.

- 0 Resources

- 0 Discussions

- 8 Groups

HawkEars is a deep learning model designed specifically to recognize the calls of 328 Canadian bird species and 13 amphibians.

13 May 2025

Hi together, I am working on detecting causalities between land surface dynamics and animal movement by using satellite-based earth observation data. As this is might be your expertise I kindly ask for your support...

8 May 2025

The Convention on the Conservation of Migratory Species (CMS) is seeking information on existing databases relevant for animal movement. If you know of a database that should be included, please complete the survey to...

30 April 2025

Conservation International is proud to announce the launch of the Nature Tech for Biodiversity Sector Map, developed in partnership with the Nature Tech Collective!

1 April 2025

Funding

I have been a bit distracted the past months by my move from Costa Rica to Spain ( all went well, thank you, I just miss the rain forest and the Ticos ) and have to catch up on funding calls. Because I still have little...

28 March 2025

Modern GIS is open technology, scalable, and interoperable. How do you implement it? [Header image: kepler.gl]

12 March 2025

Join the FathomNet Kaggle Competition to show us how you would develop a model that can accurately classify varying taxonomic ranks!

4 March 2025

Temporary contract | 36 months | Luxembourg

3 March 2025

Up to 48 months contract - 14+22 (+12) | Belvaux

25 February 2025

Up to 6 months internship position to work on DiMON project which aims to develop a non-lethal, compact device that, when coupled with traditional entomological traps, captures high-resolution, multi-view insect images...

25 February 2025

We are hiring for a customer support / marketing specialist.

20 February 2025

We look for a person with programming skills in R and/or Python.

4 February 2025

June 2025

event

event

event

October 2025

event

event

November 2025

March 2025

February 2025

event

event

85 Products

Recently updated products

| Description | Activity | Replies | Groups | Updated |

|---|---|---|---|---|

| Hi all, We're excited to welcome Siyu Yang to Tech Tutors to chat about getting started with Megadetector! For all you AI... |

|

AI for Conservation, Camera Traps, Data management and processing tools | 3 years 10 months ago | |

| The GBIF backbone taxonomy is composed of Catalogue of Life, ITIS, the Red List and dozens of other checklists. It's just been updated again, but this blogpost from a 2019... |

+12

|

Data management and processing tools | 4 years 1 month ago | |

| Dan's comments about the need for technologists and conservationists to manage and share (properly annotated) data struck a chord with me, it was right at the end of the... |

|

AI for Conservation, Data management and processing tools | 4 years 3 months ago | |

| Hi all, For anyone still looking, the recording is and notes from this event are available here. Talia |

|

Data management and processing tools | 5 years 10 months ago | |

| Oh very interesting, thanks for sharing, Ben. Are your or your team presenting at all, or are you going to soak in all the information? Easy link for anyone interested:... |

|

Data management and processing tools | 6 years 8 months ago | |

| BirdLife International are conducting a global audit of biodiversity monitoring to identify gaps in data, coverage and capacity in long-... |

|

Data management and processing tools | 6 years 8 months ago | |

| Come and learn about the work of UNEP-WCMC's informatics team on Wednesday the 18th May at 12:30 in room 1.25B at the David... |

|

Data management and processing tools | 9 years ago |

The 100KB Challenge!

7 February 2025 11:47am

20 February 2025 9:13am

~500mA peak current, it has a similar power profile as the current RockBLOCK product, in that it needs lots of juice for a for a small period of time (to undertake the transmission) we include onboard circuitry to help smooth this over. I'll be able to share more details on this once the product is officially launched!

20 February 2025 9:31am

Hi Dan,

Not right now but I can envision many uses. A key problem in RS is data streams for validation and training of ML models, its really not yet a solved problem. Any kind of system that is about deploying and "forgetting" as it collects data and streams it is a good opportunity.

If you want we can have a talk so you tell me about what you developed and I'll see if it fits future projects.

All the best

INTERNSHIP FOR COMPUTER VISION BASED INSECT DETECTION AND CLASSIFICATION

25 February 2025 10:46am

Camera Trap Data Visualization Open Question

4 February 2025 3:00pm

12 February 2025 12:31pm

Hey Ed!

Great to see you here and thanks a lot for your thorough answer.

We will be checking out Trapper for sure - cc @Jeremy_ ! A standardized data exchange format like Camtrap DP makes a lot of sense and we have it in mind to build the first prototypes.

Our main requirements are the following:

- Integrate with the camtrap ecosystem (via standardized data formats)

- Make it easy to run for non technical users (most likely an Electron application that can work cross OSes).

- Make it useful to explore camtrap data and generate reports

In the first prototyping stage, it is useful for us to keep it lean while keeping in mind the interface (data exchange format) so that we can move fast.

Regards,

Arthur

12 February 2025 1:36pm

Quick question on this topic to take advantage of those that know a lot about it already. So once you have extracted all your camera data and are going through the AI object detection phase which identifies the animal types. What file formation that contains all of the time + location + labels in the photos data do the most people consider the most useful ? I'm imagining that it's some format that is used by the most expressive visualization software around I suppose. Is this correct ?

A quick look at the trapper format suggested to me that it's meta data from the camera traps and thus perform the AI matching phase. But it was a quick look, maybe it's something else ? Is the trapper format also for holding the labelled results ? (I might actually the asking the same question as the person that started this thread but in different words).

12 February 2025 2:04pm

Another question. Right now pretty much all camera traps trigger on either PIR sensors or small AI models. Small AI models would tend to have a limitation that they would only accurately detect animal types and recognise them at close distances where the animal is very large and I have question marks as to whether small models even in these circumstances are not going to make a lot of classification errors (I expect that they do and they are simply sorted out back at the office so to speak). PIR sensors would typically only see animals within say 6m - 10m distance. Maybe an elephant could be detected a bit further. Small animals only even closer.

But what about when camera traps can reliably see and recognise objects across a whole field, perhaps hundreds of meters?

Then in principle you don't have to deploy as many traps for a start. But I would expect you would need a different approach to how you want to report this and then visualize it as the co-ordinates of the trap itself is not going to give you much information. We would be in a situation to potentially have much more accurate and rich biodiversity information.

Maybe it's even possible to determine to a greater degree of accuracy where several different animals from the same camera trap image are spatially located, by knowing the 3D layout of what the camera can see and the location and size of the animal.

I expect that current camera trap data formats may fall short of being able to express that information in a sufficiently useful way, considering the in principle more information available and it could be multiple co-ordinates per species for each image that needs to be registered.

I'm likely going to be confronted with this soon as the systems I build use state of the art large number of parameter models that can see species types over much greater distances. I showed in a recent discussion here, detection of a polar bear at a distance between 130-150m.

Right now I would say it's an unknown as to how much more information about species we will be able to gather with this approach as the images were not being triggered in this manner till now. Maybe it's far greater than we would expect ? We have no idea right now.

Paper: Technology's social and structural effects in environmental organizations

10 February 2025 6:00am

Collecting interesting resources to visualise spatio-temporal data from wildlife observations

9 February 2025 12:21pm

🌍 explorer.land Beginners Webinar: Create your first project and funding opportunity

6 February 2025 1:05pm

Machine learning for bird pollination syndromes

25 November 2024 7:30am

3 January 2025 3:55am

Hi @craigg, my background is machine learning and deep neural networks, and I'm also actively involved with developing global geospatial ecological models, which I believe could be very useful for your PhD studies.

First of all to your direct challenges, I think there will be many different approaches, which could serve more or less of your interests.

As one idea that came up, I think it will be possible in the coming months, through a collaboration, to "fine-tune" a general purpose "foundation model" for ecology that I'm developing with University of Florida and Stanford University researchers. More here.

You may also find the 1+ million plant trait inferences searchable by native plant habitats at Ecodash.ai to be useful. A collaborator at Stanford actually is from South Africa, and I was just about to send him this e.g. https://ecodash.ai/geo/za/06/johannesburg

I'm happy to chat about this, just reach out! I think there could also be a big publication in Nature (or something nice) by mid-2025, with dozens of researchers demonstrating a large number of applications of the general AI techniques I linked to above.

6 February 2025 9:57am

We are putting together a special issue in the journal Ostrich: Journal of African Ornithology and are welcoming (review) papers on the use of AI in bird research. https://www.nisc.co.za/news/202/journals/call-for-papers-special-issue-on-ai-and-ornithology

Giving different types of labeled data to the community - solutions?

5 February 2025 4:19pm

Free/open-source app for field data collection

6 December 2024 2:04pm

4 February 2025 3:52pm

Awesome, thank you!

4 February 2025 3:57pm

Thanks! Essentially field technicians, students, researchers etc. go out into the field and find one of our study groups and from early in the morning until evening the researchers record the behaviour of individual animals at short intervals (e.g., their individual traits like age-sex class, ID, what the animal is doing, how many conspecifics it has within a certain radius, what kind of food the animal is eating if it happens to be foraging). Right now in our system things work well but we are using an app that is somewhat expensive so we want to move towards open-source

4 February 2025 4:26pm

Thanks! I am familiar with EarthRanger but wasn't aware it could be used for behavioural data collection

Technical Assistant (m/f/d) | Moveapps

4 February 2025 8:32am

Webinar: Wildlife Drones’ Dragonfly – Revolutionizing VHF Tracking Technology

3 February 2025 4:31am

Deliver stronger VM0047-aligned Nature-Based Solutions

31 January 2025 9:30am

The Minor Foundation for Major Challenges calls for "new and unexpected ways of communicating the need for transitioning to a low-carbon economy"

29 January 2025 9:23pm

Accessing the Global Register of Introduced and Invasive Species (GRIIS)

25 January 2025 4:39pm

Nature Tech Unconference

Living Data 2025

16 January 2025 6:30pm

Webinar: Drone-based VHF tracking for Wildlife Research and Management

9 January 2025 11:45pm

Which LLMs are most valuable for coding/debugging?

25 September 2024 5:48pm

4 October 2024 7:53pm

Thanks, Lampros!

29 October 2024 11:10am

When it comes to coding and debugging, several large language models (LLMs) stand out for their value. Here are a few of the most valuable LLMs for these tasks:

1. OpenAI's Codex: This model is specifically trained for programming tasks, making it excellent for generating code snippets, suggesting improvements, and even debugging existing code. It powers tools like GitHub Copilot, which developers find immensely helpful.

2. Google's PaLM: Known for its versatility, PaLM excels in understanding complex queries, making it suitable for coding-related tasks as well. Its ability to generate and refine code snippets is particularly useful for developers.

3. Meta's LLaMA: This model is designed to be adaptable and can be fine-tuned for specific coding tasks. Its open-source nature allows developers to customize it according to their needs, making it a flexible option for coding and debugging.

4. Mistral: Another emerging model that shows promise in various tasks, including programming. It’s being recognized for its capabilities in generating and understanding code.

These LLMs are gaining traction not just for their coding capabilities but also for their potential to streamline the debugging process, saving developers time and effort. If you want to dive deeper into the features and strengths of these models, you can check out the full article here: Best Open Source Large Language Models LLMs

9 January 2025 8:51pm

thanks kristy! super helpful list.

Video evidence for the evaluation of behavioral state predictions

17 December 2024 11:02am

19 December 2024 11:53am

Currently, the main focus is visual footage as we don't render audio data in the same way as we do for acceleration (also: the highly different frequencies can be hard to show sensibly side by side).

But In this sense, yes, the new module features 'quick adjust knobs' for time shifts: you can roll-over a timestamp and use a combination of shift/control and mouse-wheel to adjust the offset of the video by 1/10/60 seconds or simply enter the target timestamp manually down to the millisecond level. This work can then and also be saved in a custom mapping file to continue synchronisation work later on.

19 December 2024 12:59pm

but no "time scaling" adjustments to adjust a too "slow" or too "fast" video ?

19 December 2024 4:07pm

No, not yet. The player we attached does support slower/faster replay up to a certain precision, but I'm not sure that this will be sufficiently precise for the kind of offsets we are talking about. Adding an option on the frontend to adjust this is quite easy, but understanding the impact of this on internal timestamp handling will add a level of complexity that we need to experiment with first.

As you said, for a reliable estimate on this kind of drift we need at least 2 distinct synchronized markers with sufficient distance to each other, e.g. a precise start timestamp and some recognizable point event later on.

I perfectly agree that providing an easy-to-use solution does make perfect sense. We'll definitely see into this.

Announcement of Project SPARROW

18 December 2024 8:01pm

3 January 2025 6:48pm

Firetail 13 - now available

10 December 2024 10:55am

13 December 2024 3:31am

Thank you so much for looking into this issue quickly! Much appreciated.

17 December 2024 10:40am

I promised to keep you updated, and the article is now available here:

17 December 2024 10:42am

and a short contribution in this e-obs setup, where we used Firetail VideoSync to analyze motion-triggered camera footage:

Detecting Thrips and Larger Insects Together

16 December 2024 1:14pm

16 December 2024 2:32pm

16 December 2024 2:36pm

Yeah, I would expect that you might need to have higher resolution if the critters are very small. Still might be just a lens choice. But not up on this amount of lens difference, so don't know how hard it would be.

16 December 2024 2:48pm

So, updated the text a bit with images cropped at 100% zoom :) we are already happy with the time reductions we got, but... would like to get at least 90% time reduction instead of 70% :))) we know that with a very expensive and high power camera we could probably do it, so one approach we are thinking of is just taking a closer macro picture with a cellphone of let's say 1/3 or 1/4 of the sticky paper and use this data instead of everything... or take 2-3 pictures (but we don't want to waste time in sticking the images together).

AI Researcher / Doctoral Candidate

11 December 2024 1:45pm

Mirror images - to annotate or not?

5 December 2024 8:32pm

7 December 2024 3:18pm

I will send you a DM on LinkedIn and try to find a time to chat

8 December 2024 12:36pm

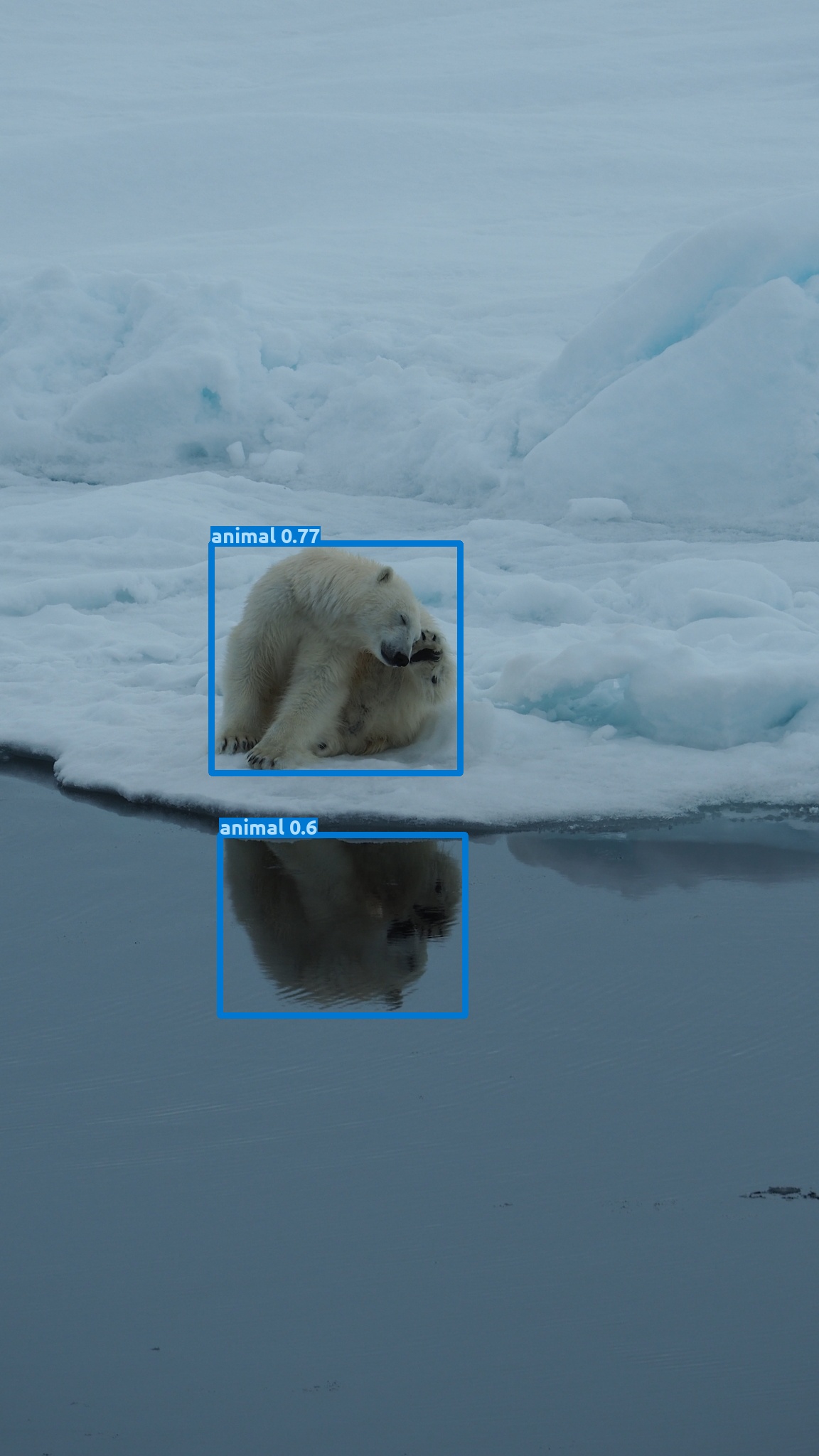

I made a few rotation experiements with MD5b.

Here is the original image (1152x2048) :

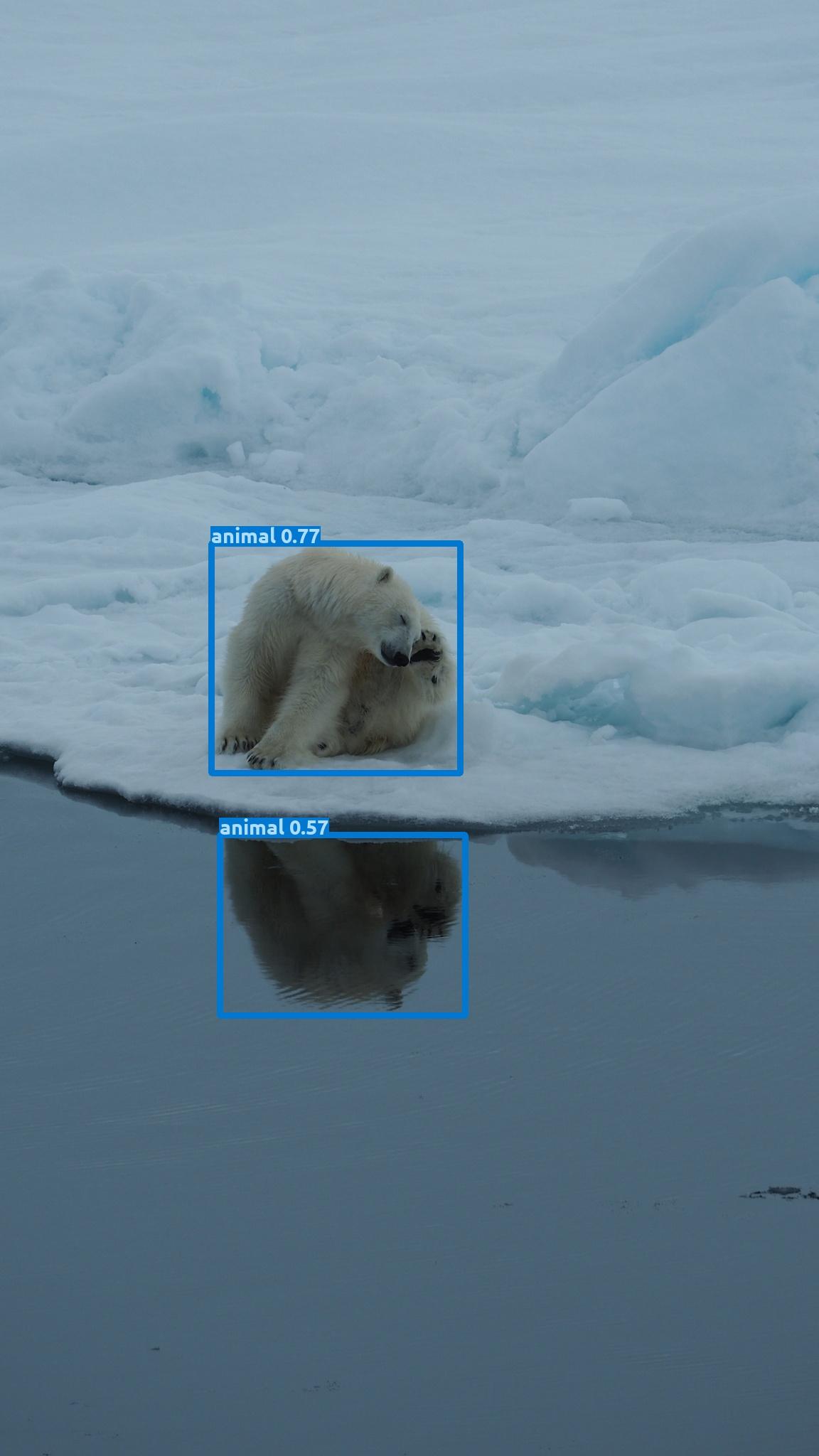

When saving this as copy in photoshop, the confidence on the mirror image changes slightly:

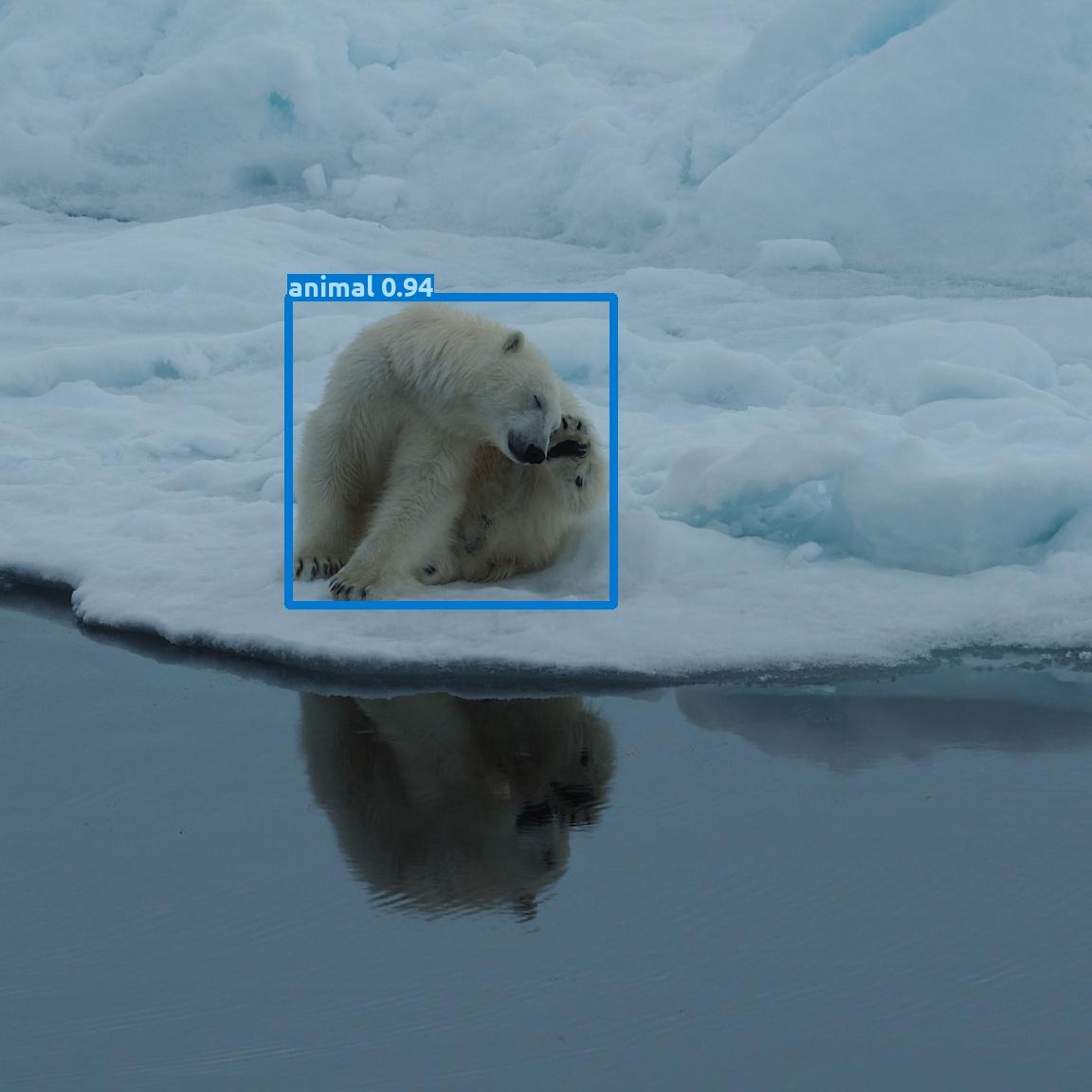

and when just cropping to a (1152*1152) square it changes quite a bit:

The mirror image confidence drops below my chosen threshold of 0.2 but the non-mirrored image now gets a confidence boost.

Something must be going on with overall scaling under the hood in MD as the targets here have the exact same number of pixels.

I tried resizing to 640x640:

This bumped the mirror image confidence back over 0.2... but lowered the non-mirrored confidence a bit... huh!?

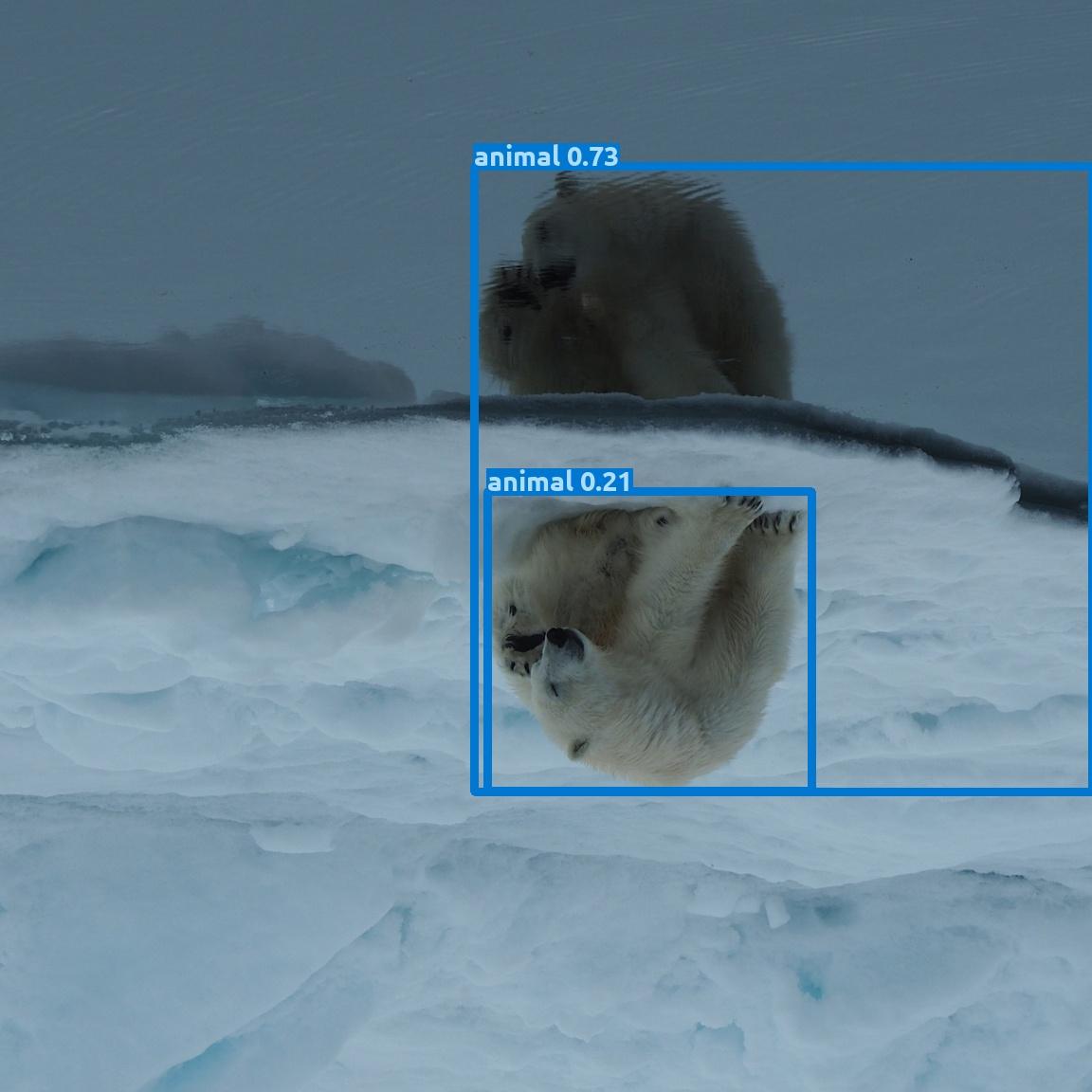

My original hypothesis was that the confidence could be somewhat swapped just by turning the image upside down (180 degree rotation):

Here is the 1152x1152 crop rotated 180 degrees:

The mirror part now got a higher confidence but it is interpreted as sub-part of a larger organism. The non-mirrored polar bear had a drop in confidence.

So my hypothesis was somewhat confirmed...

This leads me to believe that MD is not trained on many upside down animals ....

- and probably our PolarbearWatchdog! should not be either ... ;)

9 December 2024 4:27pm

Seems like we should include some rotations in our image augmentations as the real world can be seen a bit tilted - as this cropped corner view from our fisheye at the zoo shows.

Fauna & Flora SMART Competences Consultancy

4 December 2024 2:21pm

Conservation Data Strategist?

20 November 2024 3:50pm

22 November 2024 2:28pm

Great resources being shared! Darwin Core is a commonly used bio-data standard as well.

For bioacoustic data, there are some metadata standards (GUANO is used by pretty much all the terrestrial ARU manufacturers). Some use Tethys as well.

Recordings are typically recorded as .WAV files but many store them as .flac (a type of lossless compression) to save on space.

For ethics, usually acoustic data platforms with a public-facing component (e.g., Arbimon, WildTrax, etc.) will mask presence/absence geographical data for species listed on the IUCN RedList, CITES, etc. so that you're not giving away geographical information on where a species is to someone who would use it to go hunt them for example.

29 November 2024 12:13pm

Hello, I am experienced in conservation data strategy. If you want to have a conversation you can reach me at SustainNorth@gmail.com.

29 November 2024 5:51pm

Bird Monitoring Data Exchange is a standard often used for birds data.

Recovery Ecology Post Doctoral Associate - San Diego Zoo Wildlife Alliance

26 November 2024 11:47pm

20 February 2025 9:13am

Nice one - what kind of thing would you use this for?

~500mA peak current, it has a similar power profile as the current RockBLOCK product, in that it needs lots of juice for a for a small period of time (to undertake the transmission) we include onboard circuitry to help smooth this over. I'll be able to share more details on this once the product is officially launched!