With new technologies revolutionizing data collection, wildlife researchers are becoming increasingly able to collect data at much higher volumes than ever before. Now we are facing the challenges of putting this information to use, bringing the science of big data into the conservation arena. With the help of machine learning tools, this area holds immense potential for conservation practices. The applications range from online trafficking alerts to species-specific early warning systems to efficient movement and biodiversity monitoring and beyond.

However, the process of building effective machine learning tools depends upon large amounts of standardized training data, and conservationists currently lack an established system for standardization. How to best develop such a system and incentivize data sharing are questions at the forefront of this work. There are currently multiple AI-based conservation initiatives, including Wildlife Insights and WildBook, that are pioneering applications on this front.

This group is the perfect place to ask all your AI-related questions, no matter your skill level or previous familiarity! You'll find resources, meet other members with similar questions and experts who can answer them, and engage in exciting collaborative opportunities together. The AI for Conservation group provides a dedicated space to:

- Bridge disciplines: Create a space where ecologists, conservationists and environmental scientists can connect with computer scientists, artificial intelligence researchers and practitioners to address shared challenges.

- Advance knowledge: Share and discuss research, case studies and best practices at the intersection of artificial intelligence and conservation.

- Education: Provide educational resources that help ecologists understand AI methods and their use cases and inspire AI experts to learn about ecological applications.

- Facilitate collaboration: Offer resources and networking opportunities that enable AI researchers and conservation practitioners to co-develop solutions with real-world conservation impact.

Just getting started with AI in conservation? Check out our introduction tutorial, How Do I Train My First Machine Learning Model? with Daniel Situnayake, and our Virtual Meetup on Big Data. If you're coming from the more technical side of AI/ML, Sara Beery runs an AI for Conservation slack channel that might be of interest. Message her for an invite.

Header Image: Dr Claire Burke / @CBurkeSci

Explore the Basics: AI

Understanding the possibilities for incorporating new technology into your work can feel overwhelming. With so many tools available, so many resources to keep up with, and so many innovative projects happening around the world and in our community, it's easy to lose sight of how and why these new technologies matter, and how they can be practically applied to your projects.

Machine learning has huge potential in conservation tech, and its applications are growing every day! But the tradeoff of that potential is a big learning curve - or so it seems to those starting out with this powerful tool!

To help you explore the potential of AI (and prepare for some of our upcoming AI-themed events!), we've compiled simple, key resources, conversations, and videos to highlight the possibilities:

Three Resources for Beginners:

- Everything I know about Machine Learning and Camera Traps, Dan Morris | Resource library, camera traps, machine learning

- Using Computer Vision to Protect Endangered Species, Kasim Rafiq | Machine learning, data analysis, big cats

- Resource: WildID | WildID

Three Forum Threads for Beginners:

- I made an open-source tool to help you sort camera trap images | Petar Gyurov, Camera Traps

- Batch / Automated Cloud Processing | Chris Nicolas, Acoustic Monitoring

- Looking for help with camera trapping for Jaguars: Software for species ID and database building | Carmina Gutierrez, AI for Conservation

Three Tutorials for Beginners:

- How do I get started using machine learning for my camera traps? | Sara Beery, Tech Tutors

- How do I train my first machine learning model? | Daniel Situnayake, Tech Tutors

- Big Data in Conservation | Dave Thau, Dan Morris, Sarah Davidson, Virtual Meetups

Want to know more about AI, or have your specific machine learning questions answered by experts in the WILDLABS community? Make sure you join the conversation in our AI for Conservation group!

Group curators

- @annavallery

- | she/her

Seabird biologist experienced in research and applied conservation. Dedicated to conducting and using innovative research to inform conservation decisions.

- 0 Resources

- 3 Discussions

- 7 Groups

- @ViktorDo

- | he/him

PhD student at University of Exeter & University of Queensland. Interested in researching AI and its responsible application to Ecology, Environmental Monitoring and Nature Conservation.

- 0 Resources

- 0 Discussions

- 5 Groups

- 0 Resources

- 2 Discussions

- 8 Groups

No showcases have been added to this group yet.

I'm a software developer. I have projects in practical object detection and alerting that is well suited for poacher detection and a Raspberry Pi based sound localizing ARU project

- 0 Resources

- 437 Discussions

- 8 Groups

- @silbelliard

- | she/her/ella

- 0 Resources

- 0 Discussions

- 5 Groups

- 0 Resources

- 1 Discussions

- 2 Groups

Building Animal Detect: Software tool to process wildlife data in minutes using latest cool tech.

- 0 Resources

- 33 Discussions

- 3 Groups

- @annavallery

- | she/her

Seabird biologist experienced in research and applied conservation. Dedicated to conducting and using innovative research to inform conservation decisions.

- 0 Resources

- 3 Discussions

- 7 Groups

Stop The Desert

Founder of Stop The Desert, leading global efforts in regenerative agriculture, sustainable travel, and inclusive development.

- 0 Resources

- 3 Discussions

- 8 Groups

- @CourtneyShuert

- | she/her

I am a behavioural ecologist and eco-physiologist interested in individual differences in marine mammals and other predators

- 0 Resources

- 12 Discussions

- 11 Groups

- @TaliaSpeaker

- | She/her

WILDLABS & World Wide Fund for Nature/ World Wildlife Fund (WWF)

I'm the Executive Manager of WILDLABS at WWF

- 23 Resources

- 64 Discussions

- 32 Groups

- @sam_lima

- | She/her

Postdoctoral Associate studying burrowing owls at the San Diego Zoo Wildlife Alliance

- 0 Resources

- 6 Discussions

- 5 Groups

- @ginevrabellini

- | She/her

I am an ecologist by training and at the moment I work as community manager at the WiNoDa Knowledge Lab of the Museum für Naturkunde Berlin. As a data competence center, we offer free educational resources for researchers working with natural science collections and biodiversity

- 6 Resources

- 2 Discussions

- 5 Groups

WILDLABS & Wildlife Conservation Society (WCS)

I'm the Bioacoustics Research Analyst at WILDLABS. I'm a marine biologist with particular interest in the acoustics behavior of cetaceans. I'm also a backend web developer, hoping to use technology to improve wildlife conservation efforts.

- 41 Resources

- 39 Discussions

- 34 Groups

- 0 Resources

- 0 Discussions

- 5 Groups

El Instituto Humboldt está buscando un(a) Desarrollador(a) de Inteligencia Artificial que quiera aplicar su experiencia en Python y procesamiento de lenguaje natural para proteger la biodiversidad.

25 July 2025

The Marine Innovation Lab for Leading-edge Oceanography develops hardware and software to expand the ocean observing network and for the sustainable management of natural resources. For Fall 2026, we are actively...

24 July 2025

Dear colleagues, I'd like to share with you the output of the project KIEBIDS, which focused on using AI to extract biodiversity-relevant information from museum labels. Perhaps it can be applied also to other written...

17 July 2025

The Department of AI and Society (AIS) at the University at Buffalo (UB) invites candidates to apply for multiple positions as Assistant Professor, Associate Professor, or Full Professor. The new AIS department was...

8 July 2025

This is a chance to participate in a short survey about the preferences that conservation practitioners have for evidence. There's a chance to win one of three £20 Mastercard gift cards.

24 June 2025

In this case, you’ll explore how the BoutScout project is improving avian behavioural research through deep learning—without relying on images or video. By combining dataloggers, open-source hardware, and a powerful...

24 June 2025

Using Ultra-High-Resolution Drone Imagery and Deep Learning to quantify the impact of avian influenza on northern gannet colony of Bass Rock, Scotland. Would love to hear if you know of any other similar exaples from...

12 June 2025

La Universidad de Ingeniería y Tecnología (UTEC) está buscando cubrir nuevas vacantes en su Instituto Amazónico de Investigación para la Sostenibilidad (ASRI).

12 June 2025

Shared from WWF: "ManglarIA is a mangrove conservation project, supported by Google.org in 2023, that is deploying advanced technology, including artificial intelligence (AI), to collect and analyze data on the health...

12 June 2025

Careers

Rewilding Europe is seeking a Business Intelligence Analyst to support measuring rewilding impact through data automation, dashboards, and cross-domain analysis.

3 June 2025

The intern will support CI in exploring and implementing AI solutions that address conservation challenges. We are looking for someone familiar with modern AI technologies (genAI, AI agents, LLMs, foundation models, etc...

2 June 2025

HawkEars is a deep learning model designed specifically to recognize the calls of 328 Canadian bird species and 13 amphibians.

13 May 2025

September 2025

event

event

event

event

October 2025

event

event

event

December 2025

event

March 2026

event

July 2025

83 Products

Recently updated products

17 Products

Recently updated products

| Description | Activity | Replies | Groups | Updated |

|---|---|---|---|---|

| Hello everyone,I’ve been running a set of trail cameras for several months and now have thousands of images showing consistent activity... |

|

Camera Traps, AI for Conservation, Animal Movement | 13 minutes 41 seconds ago | |

| Hello WildLabs community,Here's an update on our progress with the AI-based marine mammal sound detection platform.What We've AccomplishedWe've completed our first milestone:... |

|

Acoustics, AI for Conservation, Marine Conservation | 58 minutes 38 seconds ago | |

| hi chad, its great to hear from you_its really a great idea and impactful journey in our community having experience in community-led conservation initiatives, working... |

|

Acoustics, AI for Conservation, Animal Movement, Camera Traps, Citizen Science, Climate Change, Community Base, Connectivity, Drones, eDNA & Genomics, Emerging Tech, Funding and Finance, Geospatial, Human-Wildlife Coexistence, Software Development, Wildlife Crime | 1 day 5 hours ago | |

| Hi everyone :)I’ll be in Kinshasa, DRC from 22 to 27 September as part of the AFRISTART program (Qawafel/RedStart Tunisie), which supports... |

|

AI for Conservation, Community Base, East Africa Community, Edge Computing | 1 day 7 hours ago | |

| We sell a product that includes the model, but we don't have a freely downloadable model for that purpose. You could try pytorch wildlife or speciesnet for a version to compare... |

|

AI for Conservation, Camera Traps, Edge Computing | 6 days 1 hour ago | |

| in the last 3 months we hosted allander hobbs, an under graduated student from the university of edinbrugh, UK .during his interactive... |

|

AI for Conservation, Animal Movement, Autonomous Camera Traps for Insects, Citizen Science | 6 days 1 hour ago | |

| Thanks for the informative videos ... wondered if anyone had recommendations for best IR PoE camera for monitoring wildlife at nighttime? my setup is to stream to a frigate server... |

+15

|

AI for Conservation, Autonomous Camera Traps for Insects, Camera Traps, Edge Computing | 1 week ago | |

| Hi Youssef,Yes I've been following along and am looking forward to this group!Feel free to share whatever! |

+16

|

AI for Conservation, Camera Traps, Edge Computing | 1 week 1 day ago | |

| Hey Alejandro, thanks for trying it! :))The feature you are asking about is called in technical language - animal re-identification. Unfortunately, we currently don't have this... |

|

AI for Conservation, Camera Traps, Data Management & Mobilisation, Software Development | 1 week 4 days ago | |

| Very inspiring! I completely agree that AI should help free conservationists from repetitive tasks so they can focus on strategy and fieldwork. In our IDEPROCONA project in... |

|

AI for Conservation | 1 week 6 days ago | |

| I create ocean exploration and marine life content on YouTube, whether it be recording nautilus on BRUVs, swimming with endangered bowmouth... |

|

Acoustics, AI for Conservation, Animal Movement, Camera Traps, Citizen Science, Drones, Emerging Tech, Marine Conservation, Sensors, Sustainable Fishing Challenges, Wildlife Crime | 2 months ago | |

| I think it is time for exploring many AI s application around our careers and projects. With waste management too! this is also very interesting |

|

AI for Conservation | 2 weeks ago |

Seeking Guidance: Identifying Individual Deer, Bears, and Bobcats from Trail Camera Images

2 September 2025 8:05pm

WILDLABS AWARDS 2025 - Open-Access AI for Marine Mammal Acoustic Detection

27 May 2025 7:53pm

New Group Proposal: Systems Builders & PACIM Designers

18 June 2025 2:52pm

19 June 2025 12:19pm

Hello again sir - PACIMs really mean 'projects' is the way I see it. Each part of the acronym can be seen as a project (if you have an assignment to do, you have a project really).

As for your query on 10 projects in 'this' group - I should ask for clarification if you mean particularly acoustics or in any group (I see now this is the acoustics thread after I selected all the groups for this post). If you are asking on acoustics, you're right - I am unsure on 10 as I am not too keen on acoustics yet. If you are asking 10 projects as a whole like 10 projects in the funding and finance group - I believe 10 to be a very reasonable number. Our projects we have co-created are for the most part replicable, rapidly deployable, quickly scalable, fundable through blended finance and more.

Thank you again for the feedback.

19 June 2025 1:43pm

Thank you for your reply, Chad

I meant 10 as a whole, indeed. Perhaps you see your post in one group, but since it is tagged for all groups, I assumed you meant 10 in total.

In your first post you explain PACIM stands for "Projects, Assignments, Campaigns, Initiatives, Movements, and Systems", so I understood it as more than just projects. Obviously, many things can be packed into a project or called a project, but then, what does it mean that 'Projects' is part of the list?

Well, if you think 10 projects is doable, then don't let me stop you.

1 September 2025 2:51pm

hi chad, its great to hear from you_its really a great idea and impactful journey in our community

having experience in community-led conservation initiatives, working and leading in community based conservation @nature_embassy as the leader (found on insta and other social pages),

i will be glad to be part of the group, thankyou

Exploring Conservation Tech Partnerships in DRC

1 September 2025 1:02pm

Edge AI Earth Guardians

28 August 2025 2:00pm

Proof of concept - Polar bear detection

22 August 2025 2:02pm

23 August 2025 3:44pm

Thanks man!

27 August 2025 7:01pm

Great work!

Does your bear detection model avaliable for 3rd party usage?

I`m working on a prototype project for tracking bears in a zoo enclosure. I`ve trained the yolo11n model on the bears dataset and would like to compare the results.

27 August 2025 8:55pm

We sell a product that includes the model, but we don't have a freely downloadable model for that purpose. You could try pytorch wildlife or speciesnet for a version to compare against.

I'm happy to process any of your images and feed you the results if you like?

AI TECH FOR CONSERVATION,

27 August 2025 7:09pm

Mini AI Wildlife Monitor

25 June 2025 12:27pm

26 August 2025 10:47am

Hi Luke,

I wasn't thinking of turning the RPi on/off, just the energy hungry AI stuff. But if the board needs 1W on its own... ugh.

Just wondering on your last comment, why does the MPPT have to interface with the RasPi? You can have a cheap standalone mppt (or even pwm) charger with a large PV panel(s), and battery/ies, but all the RasPi cares about is its 5V supply from some external source. The monitoring for a SLA/AGM battery bank could be just a voltage drop across a resistor/bridge onto an ADC pin. For LiFePo batteries, you'd need to query their BMS or run a shunt I guess, but some do have bluetooth APIs... So clearly, since you're working on it, there's a good reason (the "bunch of features"?) I'm missing :-)

26 August 2025 11:32am

We want the pi to interface with the charge controller so we can monitor power input and battery usage/status. We've got a few other features for remote scheduling and power control etc. There are absolutely other ways you cant do this, but they are usually big, bulky and have to be hacked together. We're developing something much more compact then what you can build with off the shelf components.

I've got some pictures in my latest blog:

Blog — AutoEcology

" /> <link rel=

26 August 2025 11:45am

Thanks for the informative videos ... wondered if anyone had recommendations for best IR PoE camera for monitoring wildlife at nighttime? my setup is to stream to a frigate server where im running the detection.

AI Edge Compute Based Wildlife Detection

23 February 2025 5:24am

23 August 2025 10:27pm

Hi Brian,

I have a number of videos on my channel about aspects of this project/technology. For training a model for the AI camera see this video.

https://youtu.be/I69lAtA2pP0

24 August 2025 11:06am

Hi Luke,

Thank you so much for the high-quality content you create , it’s been incredibly valuable. I’m excited to share that a new community group dedicated to Edge Computing has just been launched, and as one of the co-leads, I’d be delighted to invite you to join and participate.

Link to the group:

With your permission, I’d also love to feature your channel and videos in our Learning Resources section, so more members can benefit from your expertise.

Looking forward to your thoughts, :)

Youssef

25 August 2025 6:20am

Hi Youssef,

Yes I've been following along and am looking forward to this group!

Feel free to share whatever!

Free online tool to analyze wildlife images

4 August 2025 8:01am

8 August 2025 5:23pm

Hello Eugene, I just tried your service:

Was wondering how possible will it be to have the option to upload a second image and have a comparison running to let the user know if body patterns are: 'same' or 'different', helping to identify individuals.

Thanks and kind regards from Colombia,

Alejo

22 August 2025 6:55am

Hey Alejandro, thanks for trying it! :))

The feature you are asking about is called in technical language - animal re-identification. Unfortunately, we currently don't have this technology on Animal Detect. It is quite different from finding animals on the image and guessing their species, so there is no easy way to add it.

In a similar wildlabs post there was a discussion about re-identification of snow leopards - would ocelotes be similar in this context?

Take a look at the discussion here:

IDEPROCONA Presentation Video

20 August 2025 8:28pm

Excited about AI in Conservation

21 July 2025 2:03pm

20 August 2025 8:00pm

Very inspiring! I completely agree that AI should help free conservationists from repetitive tasks so they can focus on strategy and fieldwork. In our IDEPROCONA project in Eastern DRC, we are also exploring how AI could support drones and mobile apps to detect illegal logging and wildlife threats faster. Excited to learn from your experience!

I WANT TO TELL YOUR STORY

29 June 2025 10:22am

AI For waste Management.

19 August 2025 1:39pm

19 August 2025 2:53pm

I think it is time for exploring many AI s application around our careers and projects. With waste management too! this is also very interesting

How AI Can Help Save Wetlands: The Power of Large Language Models in Conservation

16 August 2025 2:06pm

International Conference on Computer Vision, ICCV 2025

14 August 2025 2:41pm

API endpoint to detect & classify animals

14 August 2025 12:54pm

Tracking Individual Whales in 360-degree Videos

28 July 2025 1:22am

5 August 2025 5:20pm

Hey Courtney! I've just sent you an email about coming on Variety Hour to talk about your work :) Looking forward to hearing from you!

8 August 2025 4:40pm

Have you tried using Insta360's DeepTrack feature on your Studio desktop app? We have used it for similar use cases and it worked well. I would love to hear if it works for your science objectives. We are also experimenting and would love to know your thoughts. :) https://youtu.be/z8WKtdPCL_E?t=123

9 August 2025 12:44am

Hi @CourtneyShuert

We support NOAA with AI for individual ID for belugas (but from aerial and from lateral surface too). If some of our techniques can be cross-applied please reach out: jason at wildme dot org

Cheers,

Jason

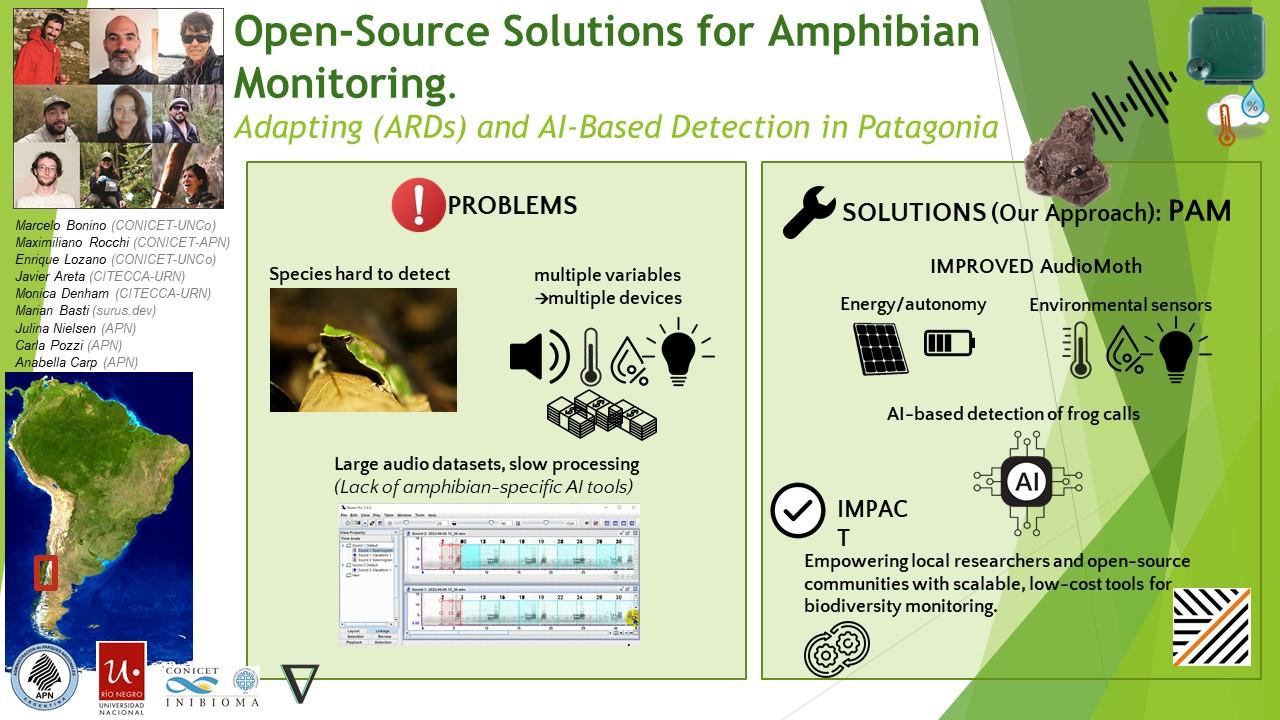

🐸 WILDLABS Awards 2025: Open-Source Solutions for Amphibian Monitoring: Adapting Autonomous Recording Devices (ARDs) and AI-Based Detection in Patagonia

27 May 2025 8:39pm

7 August 2025 9:27pm

Project Update — Sensors, Sounds, and DIY Solutions (sensores, sonidos y soluciones caseras)

We continue making progress on our bioacoustics project focused on the conservation of Patagonian amphibians, thanks to the support of WILDLABS. Here are some of the areas we’ve been working on in recent months:

(Seguimos avanzando en nuestro proyecto de bioacústica aplicada a la conservación de anfibios patagónicos, gracias al apoyo de WildLabs.Queremos compartir algunos de los frentes en los que estuvimos trabajando estos meses)

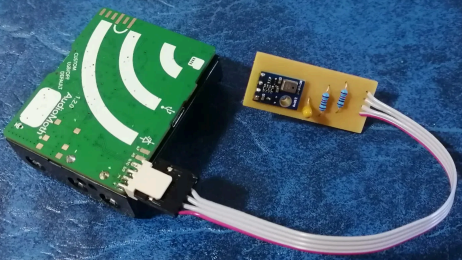

1. Hardware

One of our main goals was to explore how to improve AudioMoth recorders to capture not only sound but also key environmental variables for amphibian monitoring. We tested an implementation of the I2C protocol using bit banging via the GPIO pins, allowing us to connect external sensors. The modified firmware is already available in our repository:

👉 https://gitlab.com/emiliobascary/audiomoth

We are still working on managing power consumption and integrating specific sensors, but the initial tests have been promising.

(Uno de nuestros principales objetivos fue explorar cómo mejorar las grabadoras AudioMoth para que registren no sólo sonido, sino también variables ambientales clave para el monitoreo de anfibios. Probamos una implementación del protocolo I2C mediante bit banging en los pines GPIO, lo que permite conectar sensores externos. La modificación del firmware ya está disponible en nuestro repositorio:

https://gitlab.com/emiliobascary/audiomoth

Aún estamos trabajando en la gestión del consumo energético y en incorporar sensores específicos, pero los primeros ensayos son alentadores.)

2. Software (AI)

We explored different strategies for automatically detecting vocalizations in complex acoustic landscapes.

BirdNET is by far the most widely used, but we noted that it’s implemented in TensorFlow — a library that is becoming somewhat outdated.

This gave us the opportunity to reimplement it in PyTorch (currently the most widely used and actively maintained deep learning library) and begin pretraining a new model using AnuraSet and our own data. Given the rapid evolution of neural network architectures, we also took the chance to experiment with Transformers — specifically, Whisper and DeltaNet.

Our code and progress will be shared soon on GitHub.

(Exploramos diferentes estrategias para la detección automática de vocalizaciones en paisajes acústicos complejos. La más utilizada por lejos es BirdNet, aunque notamos que está implementado en TensorFlow, una libreria de que está quedando al margen. Aprovechamos la oportunidad para reimplementarla en PyTorch (la librería de deep learning con mayor mantenimiento y más popular hoy en día) y realizar un nuevo pre-entrenamiento basado en AnuraSet y nuestros propios datos. Dado la rápida evolución de las arquitecturas de redes neuronales disponibles, tomamos la oportunidad para implementar y experimentar con Transformers. Más específicamente Whisper y DeltaNet. Nuestro código y avances irán siendo compartidos en GitHub.)

3. Miscellaneous

Alongside hardware and software, we’ve been refining our workflow.

We found interesting points of alignment with the “Safe and Sound: a standard for bioacoustic data” initiative (still in progress), which offers clear guidelines for folder structure and data handling in bioacoustics. This is helping us design protocols that ensure organization, traceability, and future reuse of our data.

We also discussed annotation criteria with @jsulloa to ensure consistent and replicable labeling that supports the training of automatic models.

We're excited to continue sharing experiences with the Latin America Community— we know we share many of the same challenges, but also great potential to apply these technologies to conservation in our region.

(Además del trabajo en hardware y software, estamos afinando nuestro flujo de trabajo. Encontramos puntos de articulación muy interesantes con la iniciativa “Safe and Sound: a standard for bioacoustic data” (todavía en progreso), que ofrece lineamientos claros sobre la estructura de carpetas y el manejo de datos bioacústicos. Esto nos está ayudando a diseñar protocolos que garanticen orden, trazabilidad y reutilización futura de la información. También discutimos criterios de etiquetado con @jsulloa, para lograr anotaciones consistentes y replicables que faciliten el entrenamiento de modelos automáticos. Estamos entusiasmados por seguir compartiendo experiencias con Latin America Community , con quienes sabemos que compartimos muchos desafíos, pero también un enorme potencial para aplicar estas tecnologías a la conservación en nuestra región.)

7 August 2025 10:02pm

Love this team <3

We would love to connect with teams also working on the whole AI pipeline- pretraining, finetuning and deployment! Training of the models is in progress, and we know lots can be learned from your experiences!

Also, we are approaching the UI design and development from the software-on-demand philosophy. Why depend on third-party software, having to learn, adapt and comply to their UX and ecosystem? Thanks to agentic AI in our IDEs we can quickly and reliably iterate towards tools designed to satisfy our own specific needs and practices, putting the researcher first.

Your ideas, thoughts or critiques are very much welcome!

8 August 2025 1:20pm

Kudos for such an innovative approach—integrating additional sensors with acoustic recorders is a brilliant step forward! I'm especially interested in how you tackle energy autonomy, which I personally see as the main limitation of Audiomoths

Looking forward to seeing how your system evolves!

Klarna’s AI for Climate Resilience Program

6 August 2025 6:32pm

Tech4Nature Presents: 2025 Innovation Challenge Workshop Series - Registration Now Open!

6 August 2025 5:09pm

Tech4Nature Presents: 2025 Innovation Challenge Workshop Series - Registration Now Open!

6 August 2025 5:09pm

Tech4Nature Presents: 2025 Innovation Challenge Workshop Series - Registration Now Open!

6 August 2025 5:08pm

AI for Conservation Office Hours: 2025 Review

Jake Burton

and 1 more

Jake Burton

and 1 more

6 August 2025 2:16pm

Collaborate on Conservation Tech Publications With HAAG

5 August 2025 11:46pm

PV Nature is recruiting members to its Technical Advisory Committee

5 August 2025 4:03pm

What metadata is used from trail camera images?

31 July 2025 8:52am

4 August 2025 11:00pm

Hi Hugo, it's great that you are thinking about adding metadata features to Animal Detect! I'll share what I think would be useful from my perspective, but I think there is a lot of variation in how folks handle their image organization.

Time and date are probably the most important features. I rename my image files using the camtrapR package, which uses Exiftool to read file metadata and append date and time to the filename. I find this method to be very robust because of the ability to change datetimes if needed -- for example, if the camera was programmed incorrectly, you can apply a timeshift to ensure they are correct in the filenames. Are you considering adding Exif capability directly to Animal Detect? Otherwise, I think that having a tool to parse filenames would be very helpful, where users could specify which parts of the filename correspond to camera site, date, time, etc., so that this information is included in downstream processing tasks.

I have found it frustrating that information such as camera name and temperature are not included in file metadata by many camera manufacturers. I have used OCR to extract the information in these cases, but it requires a bit of manual review, and I wouldn't say this is a regular part of my workflow.

Camera brand and model can be useful for analysis, and image dimensions and burst sequence can be helpful for computer vision tasks.

Hope this helps!

Cara

5 August 2025 7:43am

Thank you for your reply!

It surely helps, we have use exif for a while to read metadata from images, when there is information available. Could be nice to maybe see if we could “write” some of the data into the metadata of the image, instead of just reading. Really good idea with the filename changes and structure. I will add it to a list of possible improvements and see if/how we could implement it.

Again, thanks for the feedback 😊

2025 Workshop on Camera Traps, AI, and Ecology

4 August 2025 10:47am

How do you tackle the anomalous data during the COVID period when doing analysis?

21 July 2025 8:55am

25 July 2025 3:06pm

To clarify, are you talking about a model that carries out automated detection of vocalizations? or a model that detects specific patterns of behavior/movement? I would suspect that the former is not something that may be impacted while training as the fundamental vocalizations/input is not going to change drastically (although see Derryberry et al., where they show variation in spectral characteristics of sparrows at short distance pre and post-covid lockdowns).

28 July 2025 6:14pm

I'm specifically referring to movement of animals affected by anthropogenic factors. My question has nothing to do with vocalisations.

Humans were essentially removed from large sections of the world during covid and that surely had some effects on wildlife movements, or at least I am assuming it did. But that would not be the regular "trend". If I try to predict the movement of a species over an area frequented by humans, that surely comes into the picture - and so does their absence.

My question is very specific to dealing with data that has absence (or limited interference) of humans during the covid period in all habitats.

1 August 2025 10:24pm

You could just throw out that data, but I think you'd be doing yourself a disservice and missing out on some interesting insights. Are you training the AI with just pre-COVID animal movement data or are you including context on anthropogenic factors as well? Not sure if you are looking at an area that has available visitor/human population data, but if you include that with animal movement data across all years it should net out in the end.

2 September 2025 7:21pm

Hello WildLabs community,

Here's an update on our progress with the AI-based marine mammal sound detection platform.

What We've Accomplished

We've completed our first milestone: a Windows executable for our Burrunan dolphin detection model. This executable allows our AI code to run independently without requiring Python or other dependencies to be installed on the user's machine.

This executable serves as the foundation for our web service - the same packaged code that runs locally will power the online platform, ensuring consistency between our local and web-based offerings.

Current Work

We're currently focused on two main areas:

Web Service Development

We're developing an online platform to make our models accessible via web browsers. The main challenge is finding the right hosting solution that balances cost-effectiveness with handling variable workloads.

Service Optimization

We're developing a more efficient version of our detection algorithms to reduce computational requirements and processing time. This optimisation benefits both the local executables and the planned web service.

Next Steps

Platform Expansion

Model Integration Approaches

We're evaluating two paths for handling multiple species models:

Web Service MVP

Model Library

A system that allows users to choose the species detection model for their acoustic data.

Here are some Burrunan dolphins!